Section outline

-

-

ELIXIR Short-Term Feedback Survey

Dear hackathon attendee,

on Day 2 of the hackathon we will open a survey to get your feedback about the event. The survey is a standard ELIXIR short-term feedback survey and its results will be uploaded to the ELIXIR Training Metrics Database. The survey is anonymous.

-

-

About the course

Uroš Lotrič, Davor Sluga, Timotej LazarUniversity of Ljubljana, Faculty of Computer and Information ScienceAt the workshop, we will get acquainted with the structure and software of computational clusters and start our first business. We will learn to distinguish between login nodes, master nodes, computation nodes, and data storage nodes. We will learn about the role of the operating system, Slurm middleware, environmental modules, containers and user applications. We will connect to login nodes, transfer files to and from the supercomputer, run transactions and monitor their implementation.The course of events

The workshop will take place in two afternoons. In the first meeting, we will get to know the hardware and software and in the second, we will use the supercomputer for video processing.Participants

The workshop is designed for researchers, engineers, students, and others who have realized that you need more computing resources than conventional computers can offer you.Acquired knowledge

After the workshop you will:- understand how a supercomputer works,

- know the main elements and their functions,

- know the Slurm middleware,

- understand environmental modules and containers,

- know how to work with files and do business,

- prepare execution scripts and

- process videos.

The material is published under license Attribution-NonCommercial-ShareAlike 4.0 International.

The workshop is prepared under the European project EuroCC, which aims to establish national centers of competence for supercomputing. More about the EuroCC project can be found on the SLING website.

-

-

-

The term supercomputer refers to the most powerful computer systems available at a given time. The most powerful systems in the world are published on top500.org. When working with supercomputers, we often talk about high-performance computing (HPC), as these are computer systems that are much more powerful than personal computers. Today's supercomputers consist of a multitude of computers or nodes interconnected by specially adapted networks for fast data transfer. Many nodes are additionally equipped with accelerators for certain types of calculations. Computer systems composed in this way are also referred to as a computer cluster.Supercomputers are designed for engineers, scientists, data analysts, and others who struggle with vast amounts of data or complex calculations that require billions of computational operations. They are used for computationally intensive calculations in various scientific fields such as biosciences (genomics, bioinformatics), chemistry (development of new compounds, molecular simulations), physics (particle physics, astrophysics), mechanical engineering (construction, fluid dynamics), meteorology, weather forecasting, climate studies), mathematics (cryptology) and computer science (law detection in data, artificial intelligence, image recognition, natural language processing).

Gaining access

Information about the procedure for obtaining access is usually published on the website of the supercomputer center. Computer systems under the auspices of SLING use the single sign-on system SLING SSO (Single Sign-On). More details can be found on the pages of SLING and the HPC-RIVR project.Login nodes

During our course we will start operations on the supercomputer via Slurm middleware. The computer clusters within the open access that allow working with the Slurm system are NSC, Maister and Trdina. To work with the Slurm system, we connect to the computer cluster via the login node. Names of login nodes on the mentioned clusters:A computer cluster consists of a multitude of nodes fairly closely connected to a network. Nodes work in the same way and consist of the same elements as found in personal computers: processors, memory, and input / output units. Clusters, of course, predominate in the quantity, performance, and quality of the built-in elements, but they usually do not have input-output devices such as a keyboard, mouse, and screen.

Node types

We distinguish several types of nodes in clusters, their structure depends on their role in the system. The following are important for the user:

- head nodes,

- login nodes,

- compute nodes and

- data nodes.

The main node ensures the coordinated operation of the entire cluster. It runs programs that monitor the status of other nodes, classify transactions into computational nodes, control the execution of transactions and more.Users log in to the login node using software tools via the SSH protocol. Through the login node, we transfer data and programs to and from the cluster, prepare, monitor and manage transactions for computing nodes, reserve computing time at computing nodes, log in to computing nodes etc.The tasks that we prepare at the login node are performed at the computational nodes. We distinguish several types of computational nodes. In addition to the usual processor nodes, we have more powerful nodes with more memory and nodes with accelerators, such as general-purpose graphics processing units. Computational nodes can be grouped into groups or partitions.We store data and programs on data nodes. Data nodes are connected to a distributed file system (for example, ceph). The distributed file system is seen by all login and array nodes. Files that are transferred via the login node to the cluster are stored in a distributed file system.All nodes are interconnected by high-speed network connections, usually including an Ethernet network and sometimes an Infiniband (IB) network for efficient communication between computing nodes. For network connections, it is desirable that they have high bandwidth (the ability to transfer large amounts of data) and low latency (they need a short time to establish a connection).Node structure

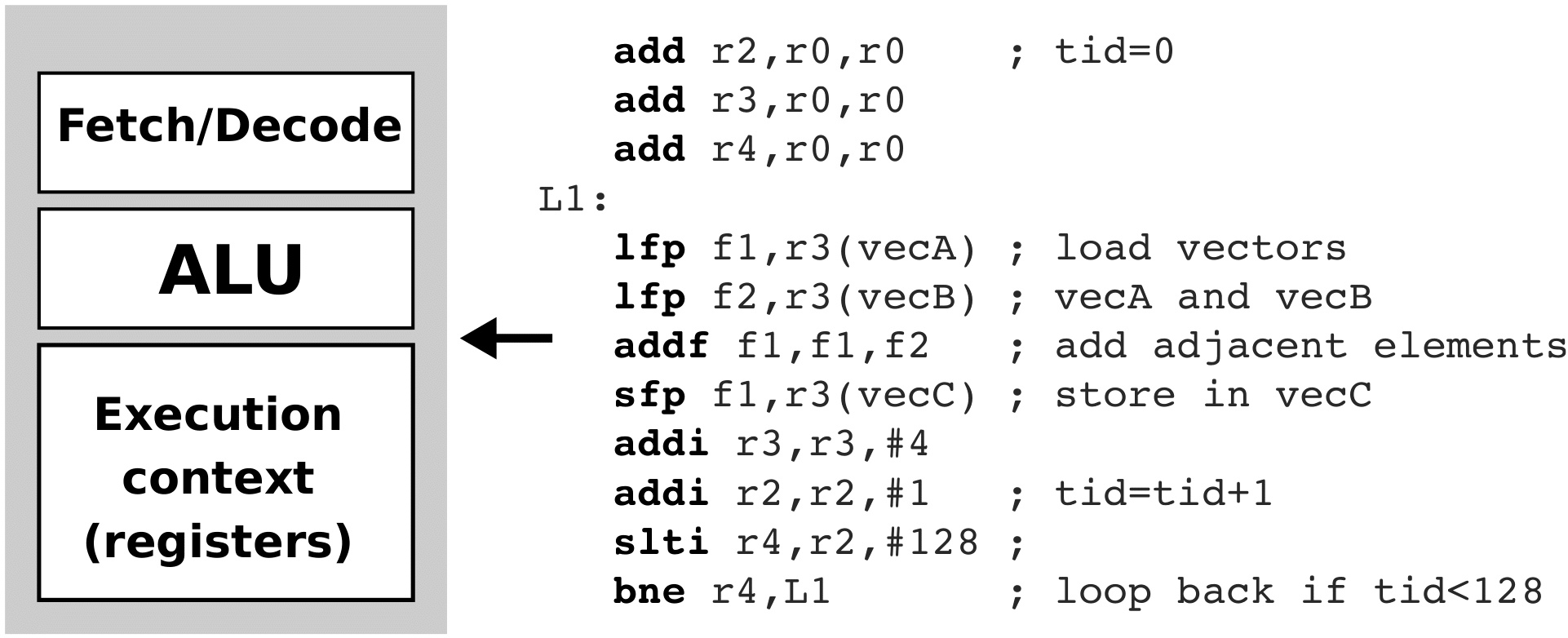

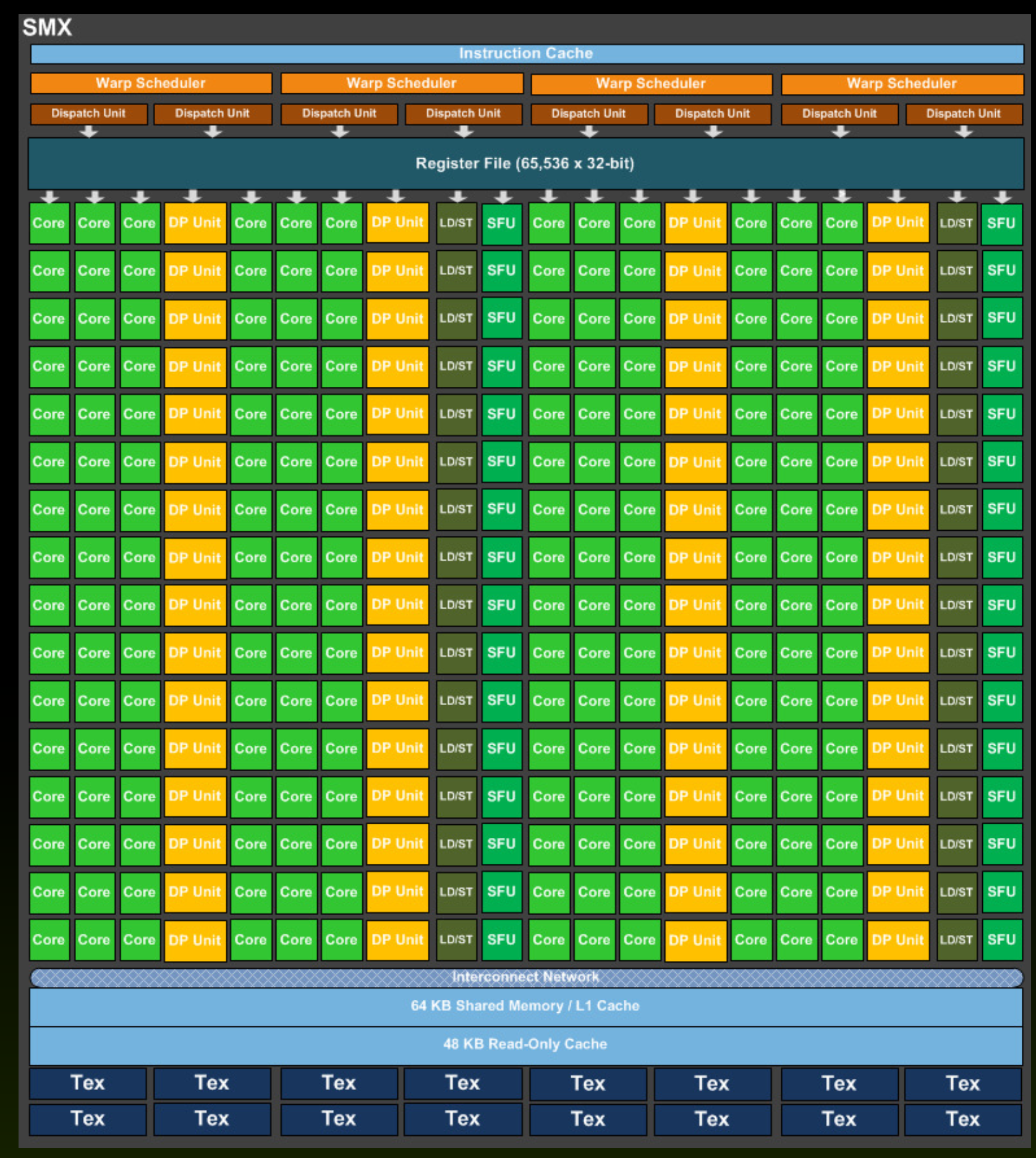

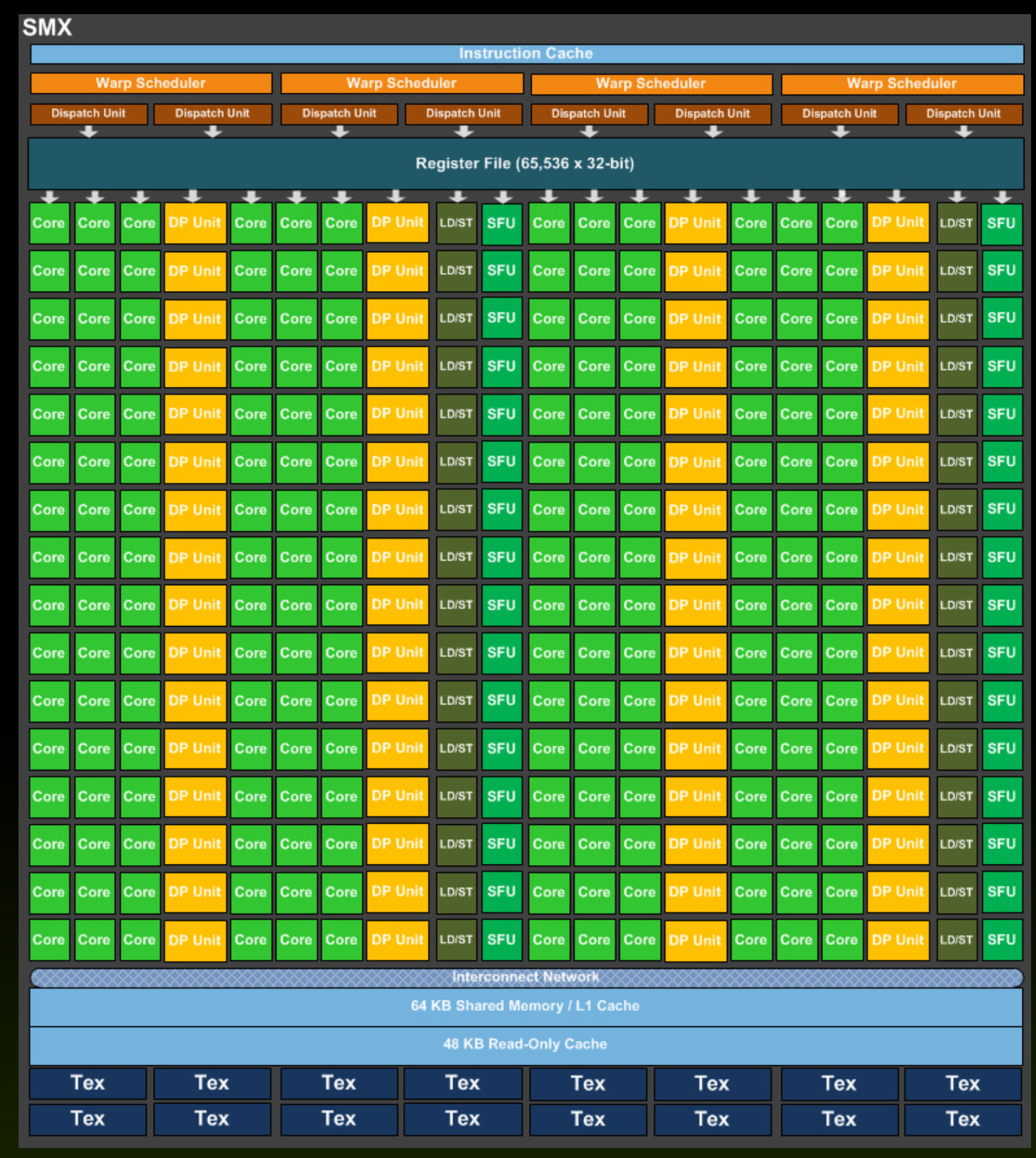

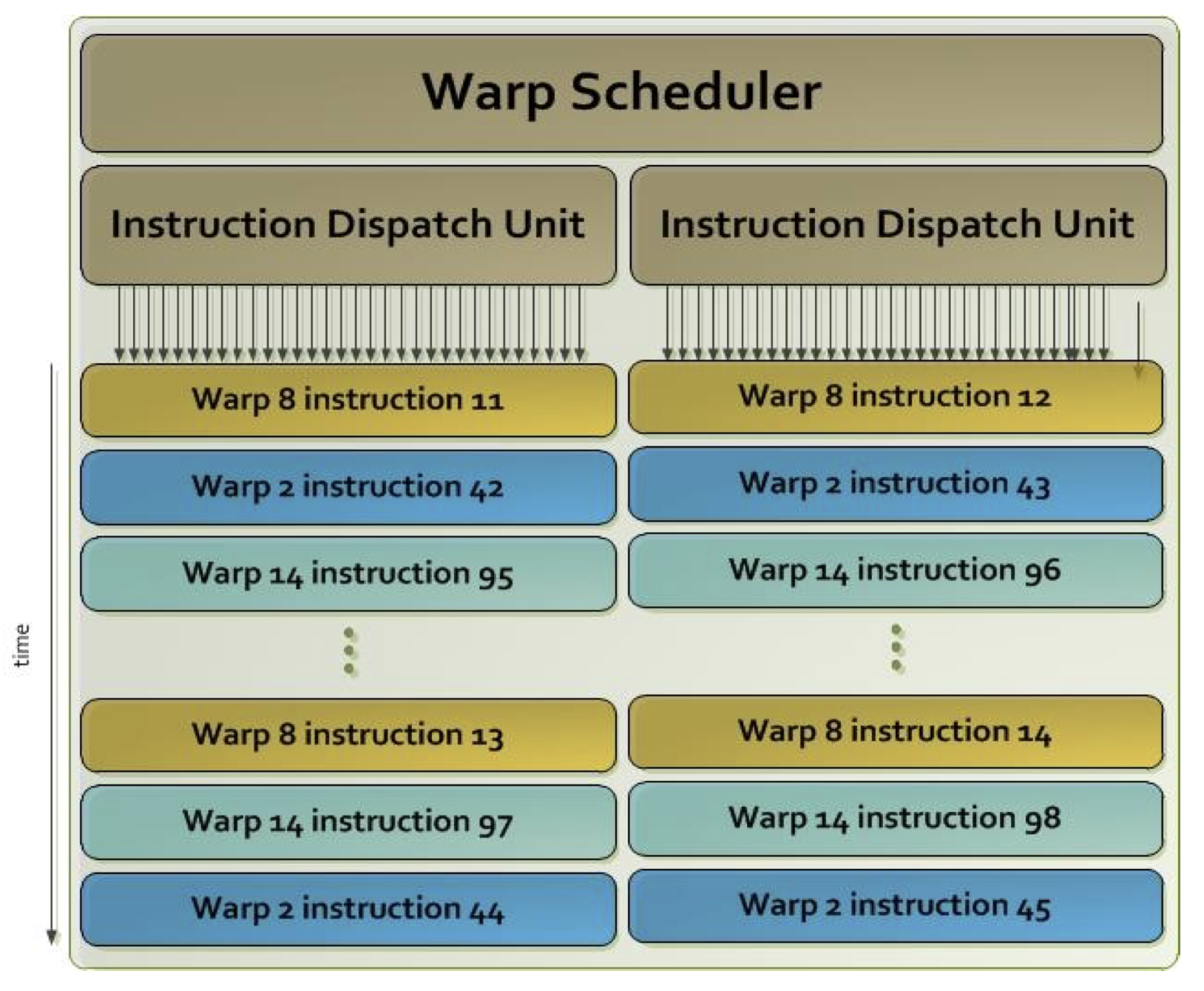

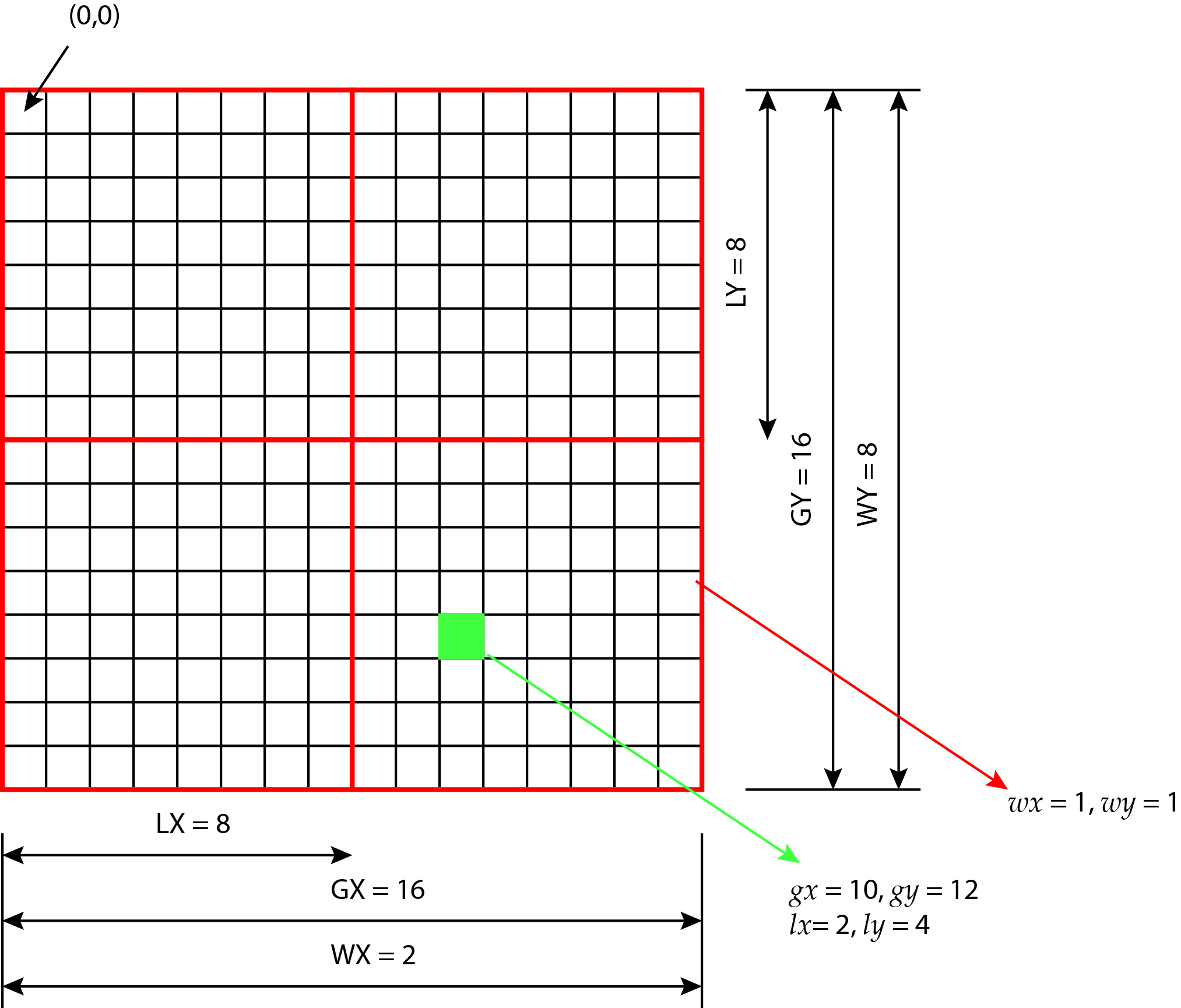

The vast majority of computers today follow Von Neumann’s architecture. In the processor, the control unit takes care of the coordinated operation of the system, reads commands and operands from memory and writes the results to memory. The processor executes commands in the arithmetic logic unit (ALE), using registers (for example, tracking program flow, storing intermediate results). Memory stores data - commands and operands, and input-output units transfer data between the processor and memory and the outside world.Graphic processing units

Some nodes are additionally equipped with calculation accelerators. Today, graphics processing units are mostly used as accelerators. The basic task of graphics cards is to relieve the processor when drawing graphics on the screen. When plotting on a screen, they have to perform a multitude of independent calculations for millions of screen points. When they are not plotting on the screen, however, they can only count.We are talking about General Purpose Graphics Porcessing Units (GPGPU). They cut perfectly whenever we have to do a multitude of similar calculations on a large amount of data with few dependencies. For example, in deep learning in artificial intelligence and in video processing. The architecture of graphics processing units is quite different from the architecture of conventional processors. Therefore, in order to effectively implement programs on graphics processing units, we need to heavily rework existing programs.Computer cluster software consists of:

- operating system,

- middleware and

- user software (applications).

Operating system

An operating system is a bridge between user software and computer hardware. It is software that performs basic tasks such as: memory management, processor management, device control, file system management, implementation of security functions, system operation control, resource consumption monitoring, error detection. Popular operating systems are free Linux, paid macOS and Windows. The CentOS Linux operating system is installed on the nodes of the NSC, Maister, and Trdina clusters.

Intermediate software

Middleware is software that clusters the operating system and user applications. In the computer cluster, it takes care of the coordinated operation of a multitude of nodes, enables centralized management of nodes, takes care of user authentication, controls the execution of transactions (user applications) on nodes and the like. Users of computer clusters mostly work with middleware for business monitoring, and SLURM (Simple Linux Utility for Resource Management) is very widespread. The Slurm system manages the queue, allocates the required resources to the business and monitors the execution of business. With the Slurm system, users provide access to resources (computing nodes) for a certain period of time, start transactions and monitor their implementation.

User software

User software is the key software that makes us use computers, both regular and computer clusters. With the user software, users perform the desired functions. Only user software adapted for the Linux operating system can be used on clusters. Some examples of user software used on clusters: Gromacs for molecular dynamics simulations, OpenFOAM for fluid flow simulations, Athena collision analysis software on the LHC collider at CERN (ATLAS), TensorFlow for learning deep models in artificial intelligence. In the workshop, we will use the FFmpeg video processing tool.

User software can be clustered in a variety of ways:

- the administrator installs it directly on the nodes,

- the administrator prepares environmental modules,

- the administrator prepares containers for general use,

- the user places it in his folder, the user prepares the container in his folder.

To make system maintenance easier, administrators install the user software in the form of environmental modules, preferably in the form of containers.

Environmental modules

When logging in to the cluster, we find ourselves in the command line with the standard environment settings. This environment can be supplemented for easier work, most simply with environmental modules. Environmental modules are a tool for changing command line settings and allow users to easily change the environment while working.

Each environment module file contains the information needed to set the command line for the selected software. When we load the environment module, we adjust the environment variables to run the selected user software. One such variable is PATH, which lists the folders where the operating system searches for programs.

The environmental modules are installed and updated by the cluster administrator. By preparing environmental modules for the software, it facilitates maintenance, avoids installation problems due to the use of different versions of libraries, and the like. The prepared modules can be loaded and removed by the user during work.

Virtualization and containers

As we have seen, we have a multitude of processor cores on nodes that can run a multitude of user applications simultaneously. When installing user applications directly on the operating system, it can get stuck, mostly due to improper versions of libraries.

Node virtualization is an elegant solution that ensures the coexistence of a wide variety of user applications and therefore easier system management. The capacity of the system is slightly lower due to virtualization, of course at the expense of greater robustness and ease of system maintenance. We distinguish between hardware virtualization and operating system virtualization. In the first case we are talking about virtual machines (virtual machines), in the second about containers (containers).

Container virtualization is more suitable for supercomputer clusters. The containers do not include the operating system, so they are smaller and it is easier for the controller to switch between them. The container supervisor keeps the containers isolated from each other and gives each container access to a common operating system and core libraries. Only the necessary user software and additional libraries are then installed separately in each container.

Computer cluster administrators want users to use containers as much as possible, because:- we can prepare the containers ourselves (we have the right to install the software in the containers),

- containers offer us many options for customizing the operating system, tools and user software,

- containers ensure repeatability of business execution (same results after upgrading the operating system),

- through containers, administrators can more easily control resource consumption.

Docker containers are the most common. On the mentioned clusters, a Singularity controller is installed for working with containers, which is more adapted for work in a supercomputer environment. In the Singularity container environment, we can work with the same user account as on the operating system, we have organized access to the network and data. The Singularity monitor can run Docker and other containers.

-

-

Dear hackathon attendee,

on Day 2 of the hackathon we will open a survey to get your feedback about the event. The survey is a standard ELIXIR short-term feedback survey and its results will be uploaded to the ELIXIR Training Metrics Database. The survey is anonymous.-

Connecting to a cluster

Software tools

Interactive work on a remote computer

We connect to the login nodes with a client that supports the Secure SHell (SSH) protocol. The SSH protocol enables a secure remote connection from one computer to another - it offers several options for user authentication and ensures strong data integrity with strong encryption while working. The SSH protocol is used for interactive work on the login node as well as for data transfer to and from the cluster. In Linux, macOS and Windows 10 operating systems, we can establish a connection from the command line (terminal, bash, powershell, cmd), in which we run the ssh program. In Windows 10 (April 2018 update and newer), the SSH protocol is enabled by default, but in older versions it must be enabled (instructions, howtogeek). For older versions of Windows, we need to install the SSH client separately, one of the more established is PuTTY.Data transfer

Secure data transfer from our computer to a remote computer and back also takes place via the SSH protocol, also called SFTP (Secure File Transfer Protocol) or FTP SSH.Data can be transferred using the scp program (Secure CoPy), in which commands are written to the command line. This program is installed in operating systems together with the ssh program. For easier work, we can use programs with a graphical interface. FileZilla is available for all the mentioned operating systems, and CyberDuck is also very popular for macOS and Windows operating systems.All-in-one tools

There are a bunch of combined tools for working on remote systems that include support for interactive work and data transfer. For Windows operating systems, the MobaXterm tool is known, on Linux operating systems we can use Snowflake, and on macOS systems (unfortunately only paid) Termius.For software developers, we recommend using the Visual Studio Code development environment with the Remote-SSH extension, which is available for all of these operating systems.Text editors

We need a file editor to prepare jobs on the cluster. With data transfer programs and all-in-one tools we can edit also files on a cluster. On Linux and macOS, we use a default program, such as Text Editor, to edit simple text files. It gets a little complicated with Windows, which uses a slightly different format. Unlike Linux and macOS, which complete the line with the LF (Line Feed) character, Windows completes the line with the CR (Carriage Return) and LF characters. We prefer not to use Notepad to edit files on a cluster in Windows, but to install Notepad ++. Before saving the file to a cluster in Notepad ++, change the Windows (CR LF) format to Linux (LF) in the lower right corner. -

Log in to a cluster

Run the command line, the simplest way is to press a special key on the keyboard (supertype on Linux, command and space keys on macOS or Windows key on Windows), write “terminal” and click on the proposed program. In the command line of the terminal (the window that opens) write:

ssh <name>@nsc-login.ijs.siand start the program by pressing the input key (Enter key). Enter the name of your SLING SSO user account instead of <name>. If we are working with another login node, we replace the content after the @ sign accordingly.At your first sign in you will receive this note:The authenticity of host 'nsc-login.ijs.si (194.249.156.110)' can't be established.

ECDSA key fingerprint is SHA256:CuSOLdnvyAQpGxcKMQrgOQfwxSX9R1kcoqawszv5wtA.

Are you sure you want to continue connecting (yes/no)?insert yes to add the login node with the specified fingerprint on the PC to known hosts.Warning: Permanently added 'nsc-login.ijs.si,194.249.156.110' (ECDSA) to the list of known hosts.After entering the password 'password' for the user account <name>, we find ourselves at the login node, where this command line is waiting for us:[<name>@nsc-login ~]$Note

We will mark the command line at the nodes of the computer cluster only with the $ sign. The $ character at the beginning of a line in these instructions indicates the beginning of a command and we will not copy it. To separate the command from the printout that follows it, a blank line is intentionally added after the command. /Enter hostname to the command line, and thus run the program on the login node, which tells us the name of the remote computer. This is the same as the name of the login node in our case nsc-login.ijs.si.$ hostnamensc-login.ijs.sihostnameWe ran our first program on a computer cluster. Of course not yet exactly right.Warning

Administrators of computer clusters are not happy if we run programs incorrectly directly on the login node, thus preventing other users of the system from working smoothly.To log out of the login node, enter the exit command:

$ exit

logout

Connection to nsc-login.ijs.si closed.exitLogin without password

Hint

After the first login, it is desirable to arrange the possibility of logging in without entering a password for security reasons.Transfer files to and from a cluster

FileZilla

Start the FileZilla program and enter the data in the input fields below the menu bar: Host: sftp: //nsc-login.ijs.si, Username: <name>, Password: <password> and press Quickconnect. Upon login, we confirm that we trust the server. After a successful login, in the left part of the program we see a tree structure of the file system on the personal computer, and on the right the tree structure of the file system on the computer cluster.

CyberDuck

In the CyberDuck toolbar, press the Open Connection button. In the pop-up window in the upper drop-down menu, select the SFTP protocol, and enter the following data: Host: nsc-login.ijs.si, Username:<name>, Password: <password>. Then press the Connect button. Upon login, we confirm that we trust the server. The tree structure of the file system on the computer cluster is displayed.Clicking on folders (directories) easily moves us through the file system. In both programs we can see the folder we are currently in written above the tree structure, for example /ceph/grid/home/<name>. Right-clicking opens a menu where you can find commands for working with folders (add, rename, delete) and files (add, rename, edit, delete). In FileZilla, files are transferred between the left and right program windows, and in CyberDuck, between the program window and regular folders. The files are easily transferred by dragging and dropping them with the mouse.Working with files directly on the cluster

You can also enter commands for working with files directly to the command line. Some important ones are:

- cd (change directory) move through the file system

- cd <folder>: move to the entered folder,

- cd ..: move back to the parent folder,

- cd: move to the base folder of the user account,

- ls (list) printout of the contents of the folder,

- pwd (print working directory) the name of the folder we are in,

- cp (copy) copy files,

- mv (move) move and rename files,

- cat <file> display the contents of the file,

- nano <file> file editing,

- man <command> help for using the command.

Hint

You can deepen your command line skills on the Ryans Tutorials website. -

Jobs and tasks in the Slurm system

Users of computer clusters mostly work with middleware for business monitoring, SLURM (Simple Linux Utility for Resource Management). The Slurm system manages the queue, allocates the required resources to the business and monitors the execution of business. With the Slurm system, users provide access to resources (computing nodes) for a certain period of time, start transactions on them and monitor their implementation.Jobs

The user program on the compute nodes is started via the Slurm system. For this purpose, we prepare a transaction in which we state:- what programs and files we need to run,

- how do we call the program,

- what computer resources do we need to implement,

- time limit for the execution of the transaction and the like.

Tasks life cycle

Once the job is ready, we send it to the queue. The Slurm system then assigns a JOBID to it and puts it on hold. The Slurm system selects queued jobs based on available computing resources, estimated execution time, and set priority.

When the required resources are available, the transaction starts running. After the execution is complete, the transaction goes through the completing state, when Slurm is waiting for some more nodes, to the completed state.If necessary, the job can be suspended or canceled. The job may end in a failure due to execution errors, or the Slurm system may terminate it when the timeout expires. -

Display cluster information

Slurm provides a series of commands for working with a cluster. In this section we will look at some examples of using the sinfo, squeue, scontrol, and sacct commands, which serve to display useful information about cluster configuration and status. Detailed information on all commands supported by Slurm can be found on the Slurm project home page.Sinfo command

The command displays information about the state of the cluster, partitions (cluster parts) and nodes, and the available computing capacity. There are a number of switches with which we can more precisely determine the information we want to print about the cluster (documentation).Above we can see which logical partitions are available, their status, the time limit of jobs on each partition, and the lists of computing nodes that belong to them. The printout can be customized with the appropriate switches, depending on what we are interested in.$ sinfo PARTITION AVAIL TIMELIMIT NODES STATE NODELIST gridlong* up 14-00:00:0 4 drain* nsc-gsv001,nsc-lou001,nsc-msv001,nsc-vfp002 gridlong* up 14-00:00:0 3 down* nsc-fp003,nsc-gsv003,nsc-msv006 gridlong* up 14-00:00:0 1 drain nsc-vfp001 gridlong* up 14-00:00:0 3 alloc nsc-lou002,nsc-msv[003,018] gridlong* up 14-00:00:0 3 resv nsc-fp[005-006],nsc-msv002 gridlong* up 14-00:00:0 24 mix nsc-fp[002,004,007-008],nsc-gsv[002,004-007],nsc-msv[004-005,007-017,019-020] gridlong* up 14-00:00:0 1 idle nsc-fp001 e7 up 14-00:00:0 2 drain* nsc-lou001,nsc-vfp002 e7 up 14-00:00:0 1 drain nsc-vfp001 e7 up 14-00:00:0 1 alloc nsc-lou002sinfoThe above printout tells us the following for each computing node in the cluster: which partition it belongs to (PARTITION), what is its state (STATE), number of cores (CPUS), number of processor slots (S), processor cores in slot (C), machine threads (T), the amount of system memory (MEMORY), and any features (AVAIL_FEATURES) attributed to a given node (e.g., processor type, presence of graphics processing units, etc.). Parts of the cluster can be reserved in advance for various reasons (maintenance work, workshops, projects). Example of listing active reservations on the NSC cluster:$ sinfo --Node --long Tue Jan 05 11:06:02 2021 NODELIST NODES PARTITION STATE CPUS S:C:T MEMORY TMP_DISK WEIGHT AVAIL_FE REASON nsc-fp001 1 gridlong* allocated 16 2:8:1 64200 0 1000 intel,gp none nsc-fp002 1 gridlong* allocated 16 2:8:1 64200 0 1000 intel,gp none nsc-fp003 1 gridlong* allocated 16 2:8:1 64200 0 1000 intel,gp none nsc-fp004 1 gridlong* allocated 16 2:8:1 64200 0 1000 intel,gp none nsc-fp005 1 gridlong* reserved 16 2:8:1 64200 0 1000 intel,gp none nsc-fp006 1 gridlong* reserved 16 2:8:1 64200 0 1000 intel,gp none nsc-fp007 1 gridlong* allocated 16 2:8:1 64200 0 1000 intel,gp none nsc-fp008 1 gridlong* allocated 16 2:8:1 64200 0 1000 intel,gp none nsc-gsv001 1 gridlong* reserved 64 4:16:1 515970 0 1 AMD,bigm none nsc-gsv002 1 gridlong* allocated 64 4:16:1 515970 0 1 AMD,bigm nonesinfo --Node --long$ sinfo --reservation RESV_NAME STATE START_TIME END_TIME DURATION NODELIST fri ACTIVE 2020-10-13T13:57:32 2021-10-13T13:57:32 365-00:00:00 nsc-fp[005-006],nsc-gsv001,nsc-msv002sinfo --reservationThe above printout shows us any active reservations on the cluster, the duration of the reservation and a list of nodes that are part of the reservation. An individual reservation is assigned a group of users who can use it and thus avoid waiting for the completion of transactions of users who do not have a reservation.

Squeue command

In addition to the cluster configuration, we are of course also interested in the state of the job scheduling queue. With the squeue command, we can query for transactions that are currently in the queue, running or have already been successfully or unsuccessfully completed (documentation).Print the current status of the transaction type:$ squeue JOBID PARTITION NAME USER ST TIME NODES NODELIST(REASON) 387388 gridlong mc15_14T prdatlas PD 0:00 1 (Priority) 387372 gridlong mc15_14T prdatlas PD 0:00 1 (Resources) 387437 gridlong mc15_14T prdatlas PD 0:00 1 (Priority) 387436 gridlong mc15_14T prdatlas PD 0:00 1 (Priority) 385913 gridlong mc15_14T prdatlas R 15:57:58 1 nsc-msv004 385949 gridlong mc15_14T prdatlas R 13:47:49 1 nsc-msv017squeueFrom the printout we can find out the identifier of an individual job, the partition on which it is running, the name of the job, which user started it and the current status of the job.The report also returns information about the total time of the job and the list of nodes on which the job is carried out, or the reason why the job has not yet begun. Usually, we are most interested in the state of jobs that we have started ourselves. The printout can be limited to the jobs of the selected user using the --user switch. Example of listing jobs owned by user gen012:Some important jobs conditions

- PD (PenDing) - job waiting in line,

- R (Running) - job is running

- out, CD (CompleteD) - job is completed,

- F (Failed) - execution error.

In addition we can limit the printout to only those jobs that are in a certain state. This is done using the --states switch. Example of a printout of all jobs currently pending execution (PD):$ squeue --user gen012 JOBID PARTITION NAME USER ST TIME NODES NODELIST(REASON) 381650 gridlong pmfuzzy_ gen012 R 7-03:13:06 1 nsc-msv020 381649 gridlong pmfuzzy_ gen012 R 7-03:15:06 1 nsc-msv018 381646 gridlong pmfuzzy_ gen012 R 7-03:18:28 1 nsc-msv008 381643 gridlong pmautocc gen012 R 7-03:25:38 1 nsc-msv017 381641 gridlong pmautocc gen012 R 7-03:28:26 1 nsc-msv007 381639 gridlong pmautocc gen012 R 7-03:32:40 1 nsc-msv004squeue --user gen012$ squeue --states=PD JOBID PARTITION NAME USER ST TIME NODES NODELIST(REASON) 387438 gridlong mc15_14T prdatlas PD 0:00 1 (Priority) 387437 gridlong mc15_14T prdatlas PD 0:00 1 (Priority) 387436 gridlong mc15_14T prdatlas PD 0:00 1 (Priority) 387435 gridlong mc15_14T prdatlas PD 0:00 1 (Priority) 387434 gridlong mc15_14T prdatlas PD 0:00 1 (Resources)squeue --states=PDScontrol command

Sometimes we want even more detailed information about a partition, node, or job. We get them with the scontrol command (documentation). Below are some examples of how to use this command.

Example of printing more detailed information about an individual partition:Example of more detailed information about the nsc-fp005 computing node:$ scontrol show partition PartitionName=gridlong AllowGroups=ALL AllowAccounts=ALL AllowQos=ALL AllocNodes=ALL Default=YES QoS=N/A DefaultTime=00:30:00 DisableRootJobs=NO ExclusiveUser=NO GraceTime=0 Hidden=NO MaxNodes=UNLIMITED MaxTime=14-00:00:00 MinNodes=0 LLN=NO MaxCPUsPerNode=UNLIMITED Nodes=nsc-msv0[01-20],nsc-gsv0[01-07],nsc-fp0[01-08] PriorityJobFactor=1 PriorityTier=1 RootOnly=NO ReqResv=NO OverSubscribe=NO OverTimeLimit=NONE PreemptMode=OFF State=UP TotalCPUs=1856 TotalNodes=35 SelectTypeParameters=NONE JobDefaults=(null) DefMemPerCPU=2000 MaxMemPerNode=UNLIMITED TRESBillingWeights=CPU=1.0,Mem=0.25Gscontrol show partition$ scontrol show node nsc-fp005 NodeName=nsc-fp005 Arch=x86_64 CoresPerSocket=8 CPUAlloc=0 CPUTot=16 CPULoad=0.00 AvailableFeatures=intel,gpu,k40 ActiveFeatures=intel,gpu,k40 Gres=gpu:2 NodeAddr=nsc-fp005 NodeHostName=nsc-fp005 Version=20.02.5 OS=Linux 5.7.12-1.el8.elrepo.x86_64 #1 SMP Fri Jul 31 16:22:54 EDT 2020 RealMemory=64200 AllocMem=0 FreeMem=56851 Sockets=2 Boards=1 State=RESERVED ThreadsPerCore=1 TmpDisk=0 Weight=1000 Owner=N/A MCS_label=N/A Partitions=gridlong BootTime=2020-11-09T12:54:17 SlurmdStartTime=2020-12-01T10:36:37 CfgTRES=cpu=16,mem=64200M,billing=16,gres/gpu=2 AllocTRES= CapWatts=n/a CurrentWatts=0 AveWatts=0 ExtSensorsJoules=n/s ExtSensorsWatts=0 ExtSensorsTemp=n/sscontrol show node nsc-fp005Sacct command

With the sacct command, we can find out more information about completed jobs and jobs in progress. For example, for a selected user, we can check the status of all tasks over a period of time.$ sacct --starttime '2021-01-10' --enddtime '2021-01-13' --user <name> 391812 ffmpeg gridlong fri-users 1 CANCELLED+ 0:0 391812.exte+ extern fri-users 1 COMPLETED 0:0 391812.0 ffmpeg fri-users 1 FAILED 127:0 391813 ffmpeg gridlong fri-users 1 COMPLETED 0:0 391813.exte+ extern fri-users 1 COMPLETED 0:0 391813.0 ffmpeg fri-users 1 COMPLETED 0:0 391825 ffmpeg gridlong fri-users 1 COMPLETED 0:0 391825.exte+ extern fri-users 1 COMPLETED 0:0 391825.0 ffmpeg fri-users 1 COMPLETED 0:0sacct --starttime '2021-01-10' --enddtime '2021-01-13' --user <name> - PD (PenDing) - job waiting in line,

-

Starting jobs on a cluster

In this chapter we will look at the srun, sbatchn and sallocn commands to start jobs and the scanceln command to cancel the job.Srun command

The simplest way is with the srun command. The command is followed by various switches with which we determine the quantity and type of machine resources that our business needs and various other settings. A detailed explanation of all the options available is available at the link (documentation). We will take a look at some of the most basic and most commonly used.To begin with we will run a simple system program hostname as our job, which displays the name of the node on which it runs. Example of starting the hostname program on one of the compute nodes:$ srun --ntasks=1 hostname nsc-msv002.ijs.sisrun --ntasks=1 hostnameWe used the --ntasks = 1 switch on the command line. With it we say that our business consists of a single task; we want a single instance of the hostname program to run. Slurm automatically assigns us one of the processor cores in the cluster and performs jobs on it.In the next step, we can try to run several tasks within our job:$ srun --ntasks=4 hostname nsc-msv002.ijs.si nsc-msv002.ijs.si nsc-msv002.ijs.si nsc-msv002.ijs.sisrun --ntasks=4 hostnameWe immediately notice the difference in the printout. Now, four of the same tasks have been performed within our job. They were performed on four different processor cores located on the same computing node (nsc-msv002.ijs.si).Of course, our tasks can also be divided between several nodes.Our job can always be terminated by pressing Ctrl + C during execution.Sbatch command

The downside of the srun command is that it blocks our command line until our job is completed. In addition, it is awkward to run more complex transactions with a multitude of settings. In such cases, we prefer to use the sbatch command, writing the job settings and individual tasks within our job to the bash script file.

#!/bin/bash #SBATCH --job-name=ime_mojega_posla #SBATCH --partition=gridlong #SBATCH --ntasks=4 #SBATCH --nodes=1 #SBATCH --mem-per-cpu=100MB #SBATCH --output=moj_posel.out #SBATCH --time=00:01:00 srun hostnameWe have an example of such a script in the box above. At the top of the script we have a comment #! /bin/bash that tells the command line that it is a bash script file. This is followed by line-by-line settings of our job, which always have the prefix #SBATCH. W e have already seen how to determine the reservation, the number of tasks and the number of nodes for our business (--reservation, --ntasks and --nodes) with the srun command.Let's explain the other settings:- --job-name = my_ job_name: the name of the job that is displayed when we make a query using the squeue command,

- --partition = gridlong: the partition within which we want to run our job (there is only one partition on the NSC cluster, so we can also omit this setting),

- --mem-per-cpu = 100MB: amount of system memory required by our job for each task (looking at the processor core),

- --output = my_job.out: the name of the file in which the content that our job would print to standard output (screen) is written,

- --time = 00: 01: 00: time limit of our job in hour: minute: second format.

This is followed by the launch of our job, which is the same as in the previous cases (hostname).

Save the content in the box above to a file, such as job.sh, and run the job:$ sbatch ./job.sh Submitted batch job 387508sbatch ./job.shWe can see that the command printed out our job identifier and immediately gave us back control of the command line. When the job is completed (we can check when with the help of the squeue command), the file my_job.out will be created in the current folder, in which the result of the execution will be displayed.$ cat ./my_job.out nsc-msv002.ijs.si nsc-msv002.ijs.si nsc-msv002.ijs.si nsc-msv002.ijs.sicat ./my_job.outScancel command

Jobs started with the sbatch command can be terminated with the scancel command during execution. We only need to specify the appropriate job identifier (JOBID).$ scancel 387508scancel 387508Salloc command

The third way to start a job is with the salloc command. It is used to predict how many computing capacities we will need for our tasks, and then we run jobs directly from the command line with the srun command. The advantage of using the salloc command is that when starting business with srun, we do not have to wait for free capacities every time. The salloc command also uses the same configuration switches as the srun and sbatch commands. If we reserve resources with the salloc command, then we do not need to constantly specify all the requirements for the srun command. Example of running two instances of hostname on one node:When using the salloc command, srun works similarly to using sbatch. With its help, we run our tasks on the already acquired computing capacities. The acquired calculation capacities are released by running exit at the command line after the end of execution. The salloc command offers us another interesting option for exploiting computing nodes. With it, we can obtain capacities on a computing node, connect to it via the SSH protocol and then execute commands directly on the node.$ salloc --time=00:01:00 --ntasks=2 salloc: Granted job allocation 389035 salloc: Waiting for resource configuration salloc: Nodes nsc-msv002 are ready for job $ srun hostname nsc-msv002.ijs.si nsc-msv002.ijs.si $ exitsalloc --time=00:01:00 --ntasks=2 srun hostname exitOn the nsc-fp005 node, we run the hostname program, which displays the node name. After completing the work on the computing node, we return to the login node using the exit command. Here we perform the exit again to release the capacities we have occupied with the salloc command.$ salloc --time=00:05:00 --ntasks=1 salloc: Granted job allocation 389039 salloc: Waiting for resource configuration salloc: Nodes nsc-fp005 are ready for job $ ssh nsc-fp005 $ hostname nsc-fp005.ijs.si $ exit $ exitsalloc --time=00:05:00 --ntasks=1 ssh nsc-fp005 hostname exit exit -

Modules and containers

Ordinary users (ie non-administrators) cannot install programs on the system. Installation must be arranged with the cluster administrator. You can always compile all the necessary software yourself and install it in your home directory, but this is a rather time-consuming and annoying task. In this section, we look at two approaches to make it easier to load a variety of software packages that we often use in supercomputing.Environment modules

The first approach is environment modules, which include selected user software. Modules are usually prepared and installed by an administrator, who also includes them in the module catalog. The user can then turn the modules on or off with the module load or module unload commands. Different modules can also contain versions of the same program, for example with and without support for graphics accelerators. A list of all modules is obtained with the commands module avail and module spider.We will need the FFmpeg module in the workshop. This is already installed on the NSC cluster, we just need to load it:Use the module list command to see which modules have been loaded.$ module load FFmpegmodule load FFmpeg

$ module list Currently Loaded Modules: 1) GCCcore/9.3.0 5) x264/20191217-GCCcore-9.3.0 9) expat/2.2.9-GCCcore-9.3.0 13) fontconfig/2.13.92-GCCcore-9.3.0 17) FriBidi/1.0.9-GCCcore-9.3.0 2) NASM/2.14.02-GCCcore-9.3.0 6) ncurses/6.2-GCCcore-9.3.0 10) libpng/1.6.37-GCCcore-9.3.0 14) xorg-macros/1.19.2-GCCcore-9.3.0 18) FFmpeg/4.2.2-GCCcore-9.3.0 3) zlib/1.2.11-GCCcore-9.3.0 7) LAME/3.100-GCCcore-9.3.0 11) freetype/2.10.1-GCCcore-9.3.0 15) libpciaccess/0.16-GCCcore-9.3.0 4) bzip2/1.0.8-GCCcore-9.3.0 8) x265/3.3-GCCcore-9.3.0 12) util-linux/2.35-GCCcore-9.3.0 16) X11/20200222-GCCcore-9.3.0module listContainers

The disadvantage of modules is that they must be prepared and installed by an administrator. If this is not possible, we can choose another approach and package the program we need in a Singularity container. Such a container contains our program and all other programs and program libraries that it needs to function. You can create it on any computer and then copy it to a cluster.When we have the appropriate container ready, we use it by always writing singularity exec <container> before the desired command. The FFmpeg container (ffmpeg_apline.sif file) is available here. Transfer it to a cluster and run:$ singularity exec ffmpeg_alpine.sif ffmpeg -versionsingularity exec ffmpeg_alpine.sif ffmpeg -versionA printout with information about the ffmpeg software version is displayed. Use the singularity program to run the ffmpeg_alpine.sif container. Then run the ffmpeg program in the container.You can also build the ffmpeg_alpine.sif container yourself. Searching the web with the keywords ffmpeg, container and docker, probably brings us to the website https://hub.docker.com/r/jrottenberg/ffmpeg/ with a multitude of different containers for ffmpeg. We choose the current version of the smallest, ready for Alpine Linux, which we build right on the login node.More detailed instructions for preparing containers can be found at https://sylabs.io/guides/3.0/user-guide/.

Quite a few frequently used containers are available in clusters to all users:- on the NSC cluster they are found in the / ceph / grid / singularity-images folder,

- on the Maister and Trdina clusters in the / ceph / sys / singularity folder.

-

-

-

Basic video processing

We will use the free FFmpeg software package to work with videos, FFmpeg supports most types of video and audio formats. In addition, it contains a wide range of filters for video processing. The FFmpeg software package is available for most Linux distributions for macOS and Windows.

On the cluster, we will run the FFmpeg software package via the command line. To make it easier to set the switches we can use a web interface such as FFmpeg Commander, or a program - on Linux and Windows we can use WinFF.Format conversion

First we load the appropriate module that will allow us to use the ffmpeg program on the cluster.Check if the module is working properly:$ module load FFmpegmodule load FFmpeg$ ffmpeg -version ffmpeg version 4.2.2 Copyright (c) 2000-2019 the FFmpeg developers built with gcc 9.3.0 (GCC) configuration: --prefix=/ceph/grid/software/modules/software/FFmpeg/4.2.2-GCCcore-9.3.0 --enable-pic --enable-shared --enable-gpl --enable-version3 --enable-nonfree --cc=gcc --cxx=g++ --enable-libx264 --enable-libx265 --enable-libmp3lame --enable-libfreetype --enable-fontconfig --enable-libfribidi libavutil 56. 31.100 / 56. 31.100 libavcodec 58. 54.100 / 58. 54.100 libavformat 58. 29.100 / 58. 29.100 …ffmpeg -versionLet's now try to use the ffmpeg program to process the video. First, download the videollama.mp41from thelinkand save it to the cluster.The simplest ffmpeg command converts a clip from one format to another (without additional switches, ffmpeg will select the appropriate encoding settings based on the extensions of the specified files). We need to make sure that the conversion is done on one of the computing nodes so we use srun.The -y switch makes ffmpeg overwrite existing files without prompting. Use the -i switch to specify the llama.mp4 input file, and write the llama.avi output file as the last argument, which should be created by the ffmpeg program.$ srun --ntasks=1 ffmpeg -y -i llama.mp4 llama.avisrun --ntasks=1 ffmpeg -y -i llama.mp4 llama.aviReducing the resolution

Due to the abundance of options, ffmpeg switch combinations can become quite complex. We find many examples online that we can use as a starting point when writing our commands.

For example, if we want to reduce the resolution of the image, we can use the filter scale. To reduce the resolution in both directions by half, write:This command creates a new llama-small.mp4 video from the llama.mp4 input video, in which the height and width of the video are twice as small. The \ character at the end of the first line indicates that the command continues on the next line.$ srun --ntasks=1 ffmpeg \ -y -i llama.mp4 -codec:a copy -filter:v scale=w=iw/2:h=ih/2 llama-small.mp4srun --ntasks=1 ffmpeg -y -i llama.mp4 -codec:a copy -filter:v scale=w=iw/2:h=ih/2 llama-small.mp4

The new arguments in the command are:- -codec:a copy: the audio track should be copied unchanged to the output file and

- -filter:v scale=w=iw/2:h=ih/2: use a (video) filter scale, with the width of the output video w equal to half the width of the input video iw (equal to the height).

To use another filter, we can replace the argument scale=w=iw/2:h=ih/2 with the appropriate string, a few examples:- hflip in vflip : mirror the image horizontally or vertically,

- edgedetect : detects edges in the image,

- crop=480:270:240:135 : cuts out a 480 × 270 image, starts at the point (240, 135),

- drawtext=text=%{pts}:x=w/2-tw/2:y=h-2*lh:fontcolor=green : writes a timestamp (pts) to the image.

Filters can be combined simply by separating them with a comma, for example scale=w=iw/2:h=ih/2,edgedetect . -

Parallel video processing

Many problems can be broken down into independent subproblems that can be processed in a parallel manner. A common phrase for this is: embarrassingly parallel problems.

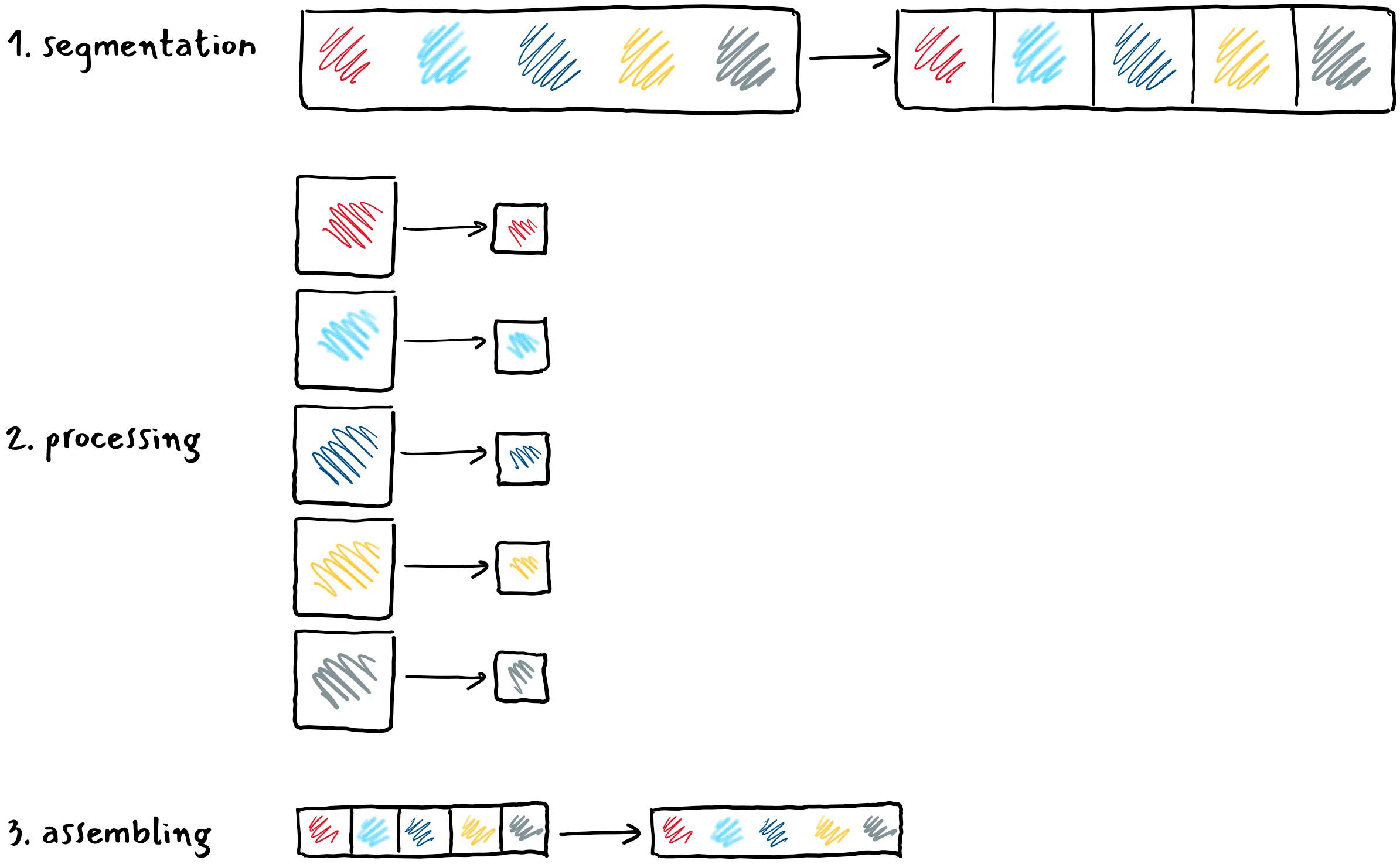

One such problem is video processing. Here's how to speed up video processing:

- first divide the video into a multitude of pieces on one core,

- then each piece is processed separately on its core and

- at the end the processed pieces are assembled back into a whole.

The advantage of such an approach is that the individual pieces are processed in a parallel manner, each at its own core. If we divided the video into N equal pieces in the first step, the processing time in the second step is approximately equal to the N part of the time required to process the entire video on one core. Even if we take into account the steps of cutting and assembling at the time of processing, which we do not have when processing on one core, we can still gain a lot in the end.

The advantage of such an approach is that the individual pieces are processed in a parallel manner, each at its own core. If we divided the video into N equal pieces in the first step, the processing time in the second step is approximately equal to the N part of the time required to process the entire video on one core. Even if we take into account the steps of cutting and assembling at the time of processing, which we do not have when processing on one core, we can still gain a lot in the end.

First, download the videobbb.mp41to the cluster.Step 1: Segmentation

We want to break the bbb.mp4 video into smaller pieces, which we will then process in a parallel manner. First we load the appropriate module, if we haven't already.We then divide the video into pieces that are 130 seconds long with the following command:$ module load FFmpegmodule load FFmpegwhere:$ srun --ntasks=1 ffmpeg \ -y -i bbb.mp4 -codec copy -f segment -segment_time 130 \ -segment_list parts.txt part-%d.mp4srun --ntasks=1 ffmpeg -y -i bbb.mp4 -codec copy -f segment -segment_time 130 -segment_list parts.txt part-%d.mp4

- -codec copy tells the audio and video to be copied unchanged to the output files,

- -f segment selects a segmentation option that cuts the input file,

- -segment_time 130 specifies the desired duration of each piece in seconds,

- -segment_list parts.txt saves the list of created pieces in the parts.txt file,

- part-%d.mp4 specifies the name of the output files, where %d is the extension that ffmpeg replaces with the sequence number of the piece during partitioning.

When the process is complete, we have the original video in the working directory, a list of parts.txt pieces, and sequential pieces part-0.mp4 to part-4.mp4:$ ls bbb.mp4 part-0.mp4 part-1.mp4 part-2.mp4 part-3.mp4 part-4.mp4 parts.txtlsStep 2: Processing

We process each piece in the same way. For example, if you want to reduce the dimensions of a part-0.mp4 piece by half and save the result to an out-part-0.mp4 file, use the command from the previous section:$ srun --ntasks=1 ffmpeg \ -y -i part-0.mp4 -codec:a copy -filter:v scale=w=iw/2:h=ih/2 out-part-0.mp4srun --ntasks=1 ffmpeg -y -i part-0.mp4 -codec:a copy -filter:v scale=w=iw/2:h=ih/2 out-part-0.mp4As always, the srun will require resources and run our task on the assigned computing node. In this way, we could process all the pieces one by one, but with a lot of manual work. So let’s take a look at a simple sbatch script that does this for us.#!/bin/sh

#SBATCH --job-name=ffmpeg1 # job name

#SBATCH --output=ffmpeg1.txt # execution log file

#SBATCH --time=00:10:00 # time limit for running job hours: minutes: seconds

#SBATCH --reservation=fri # reservation, if any; otherwise we delete the line

srun ffmpeg -y -i part-0.mp4 -codec:a copy -filter:v scale=w=iw/2:h=ih/2 out-part-0.mp4

srun ffmpeg -y -i part-1.mp4 -codec:a copy -filter:v scale=w=iw/2:h=ih/2 out-part-1.mp4

srun ffmpeg -y -i part-2.mp4 -codec:a copy -filter:v scale=w=iw/2:h=ih/2 out-part-2.mp4

srun ffmpeg -y -i part-3.mp4 -codec:a copy -filter:v scale=w=iw/2:h=ih/2 out-part-3.mp4

srun ffmpeg -y -i part-4.mp4 -codec:a copy -filter:v scale=w=iw/2:h=ih/2 out-part-4.mp4Save the script to the file ffmpeg1.sh and run it with the command$ sbatch ./ffmpeg1.sh Submitted batch job 389552sbatch ./ffmpeg1.shSo far, we haven't sped up the execution, as the script waits for each srun call to finish before running the next one. If we want to send all the pieces for processing at the same time, we add an & character at the end of each srun line, requiring the command to run in the background. Now the script does not wait for the command to complete, but immediately resumes executing the next command. At the end of the script, we must therefore add the wait command, which waits for all the tasks we ran in the background to complete. This makes sure that the script is not completed until all the pieces of video have been processed to completion.Each srun call represents one task in our job. Therefore, in the header of the script, we ask how many tasks to perform at a time. The setting --ntasks=5 means that Slurm will perform a maximum of five tasks at a time, even if there are multiple tasks. Be careful to add the argument --ntasks=1 to each srun call; without it, Slurm would repeat the task for each piece five times, which is not the most useful.#!/bin/sh

#SBATCH --job-name=ffmpeg2

#SBATCH --output=ffmpeg2.txt

#SBATCH --time=00:10:00

#SBATCH --ntasks=5 # the number of tasks in the job that are performed simultaneously

srun --ntasks=1 ffmpeg -y -i part-0.mp4 -codec:a copy -filter:v scale=w=iw/2:h=ih/2 out-part-0.mp4 &

srun --ntasks=1 ffmpeg -y -i part-1.mp4 -codec:a copy -filter:v scale=w=iw/2:h=ih/2 out-part-1.mp4 &

srun --ntasks=1 ffmpeg -y -i part-2.mp4 -codec:a copy -filter:v scale=w=iw/2:h=ih/2 out-part-2.mp4 &

srun --ntasks=1 ffmpeg -y -i part-3.mp4 -codec:a copy -filter:v scale=w=iw/2:h=ih/2 out-part-3.mp4 &

srun --ntasks=1 ffmpeg -y -i part-4.mp4 -codec:a copy -filter:v scale=w=iw/2:h=ih/2 out-part-4.mp4 &

waitArray jobs

The above method works, but is quite inconvenient. If we change the number of pieces, we need to add or correct lines in the script, which can quickly go wrong. We also see that the individual steps differ from each other only in number in the file names. Fortunately, Slurm has a solution for just such situations: array jobs. Let's look at how to process the above example:#!/bin/sh

#SBATCH --job-name=ffmpeg3

#SBATCH --time=00:10:00

#SBATCH --output=ffmpeg3-%a.txt # %a je nastavek za oznako naloge

#SBATCH --array=0-4 # območje spreminjanja vrednosti

srun ffmpeg \

-y -i part-$SLURM_ARRAY_TASK_ID.mp4 -codec:a copy -filter:v scale=w=iw/2:h=ih/2 \

out-part-$SLURM_ARRAY_TASK_ID.mp4We've added a switch --array=0-4, which tells Slurm to run the commands in the script for each of the numbers 0 through 4. Slurm will run as many tasks as there are numbers in the range specified by the --array switch in our case 5. If we want to limit the number of tasks performed simultaneously, for example to 3, we write --array=0-4%3.

Each srun command will be executed for one task, so --ntasks=1 can be omitted. We do not specify the actual file name in the command, but use the $SLURM_ARRAY_TASK_ID extension. For each task, Slurm will replace the attachment with one of the numbers from the range specified by the --array switch. We added the %a extension to the log file name, which Slurm also replaces with a number from the range specified by the --array switch. This will write each task to its own file. The $SLURM_ARRAY_TASK_ID is essentially an environmental variable that Slurm sets accordingly for each task. When Slurm executes the #SBATCH commands, this variable does not yet exist, so we must use the %a extension for the switches in Slurm.

After this step, we get out-part-0.mp4 to out-part-4.mp4 files in the working directory with the processed pieces of the original clip.Step 3: Assembling

All we have to do is combine the out-part-0.mp4 … out-part-4.mp4 files into one clip. To do this, we need to give ffmpeg a list of the pieces we want to merge. We list them in the out-parts.txt file with the following content:file out-part-0.mp4

file out-part-1.mp4

file out-part-2.mp4

file out-part-3.mp4

file out-part-4.mp4It can be created from the existing list of pieces of the original parts.txt clip. First rename the file to out-parts.txt. Open the out-parts.txt file in a text editor and find and replace all part strings with the file out-part string.

More elegantly, we can create a list of individual pieces of video with the help of the command line and the program sed (stream editor):Finally, from the list of pieces in the file out-parts.txt, we compose the output clip out-bbb.mp4.$ sed 's/part/file out-part/g' < parts.txt > out-parts.txtsed 's/part/file out-part/g' < parts.txt > out-parts.txt$ srun --ntasks=1 ffmpeg -y -f concat -i out-parts.txt -c copy out-bbb.mp4srun --ntasks=1 ffmpeg -y -f concat -i out-parts.txt -c copy out-bbb.mp4Finally, we can use the data transfer tool to remove temporary files.Steps 1, 2, 3 in one go

In previous sections, we speeded up video processing by dividing the task into several pieces, which we performed as multiple parallel tasks. We ran ffmpeg over each piece, each task used one kernel and knew nothing about the remaining pieces. Such an approach can be taken whenever the problem can be divided into independent pieces. We do not need to change the processing program.

In principle, each business is limited to one processor core. However, using program threads, one job can use multiple cores. The ffmpeg program can use threads for many operations. The above three steps of peeling can also be performed with a single command. We now run the job with a single task for the whole file, as the ffmpeg program will divide the processing into several pieces according to the number of cores we assign to it.So far, we have done all the steps using the FFmpeg module. This time we use the ffmpeg-alpine.sif container. If we haven't already, we transfer the FFmpeg container from the link to the cluster. When using the container before calling ffmpeg, add singularity exec ffmpeg_alpine.sif. Three programs are now included in the command:- srun sends the business to Slurm and starts the singularity program,

- the singularity program starts the ffmpeg-alpine.sif container and

- inside the ffmpeg-alpine.sif container it starts the ffmpeg program.

$ srun --ntasks=1 --cpus-per-task=5 singularity exec ffmpeg_alpine.sif ffmpeg \ -y -i bbb.mp4 -codec:a copy -filter:v scale=w=iw/2:h=ih/2 out123-bbb.mp4srun --ntasks=1 --cpus-per-task=5 singularity exec ffmpeg_alpine.sif ffmpeg -y -i bbb.mp4 -codec:a copy -filter:v scale=w=iw/2:h=ih/2 out123-bbb.mp4The process we used to do ourselves in three steps is now done by ffmpeg in about the same amount of time. With the --cpus-per-task switch we requested that Slurm reserve 5 processor cores for each task in our business.While working, ffmpeg displays the status in the last line:frame=17717 fps=125 q=29.0 size= 48128kB time=00:09:50.48 bitrate= 665.7kbits/s speed=4.16xThe speed data tells us that encoding is 4.06 times faster than real-time playback. In other words, if the video lasts 10 minutes, we will spend 10.5/4.16≈2.5 minutes encoding. -

Use of graphical process units

The graphics processing unit (GPU) is a special processor, basically designed to draw an image on the screen. Among other things, GPUs are optimized for fast computing with vectors and matrices, which is a common operation when working with graphics. Although GPUs are not suitable for solving general problems, operations that GPUs can perform very quickly prove to be useful in other domains as well, such as machine learning and cryptocurrency mining. Modern GPUs also have built-in support for working with certain types of videos. Let’s see how we can use GPU to convert a video to another format faster.

Programs such as ffmpeg can use GPUs from different manufacturers through standard interfaces. OpenCL and CUDA are the most commonly used. The latter is intended only for Nvidia graphics processing units. These are also installed in the NSC cluster.Preparing the container

The ffmpeg program does not support GPU by default, so we have to compile it with special settings. Here we will use an existing Docker container that already contains the appropriate version of ffmpeg. We convert it to a Singularity container with the commandWe created the ffmpeg_4-nvidia.sif container in the current directory. Use this container as usual with the singularity exec command:$ singularity pull docker://jrottenberg/ffmpeg:4-nvidiasingularity pull docker://jrottenberg/ffmpeg:4-nvidiaThe above command displays the settings that turn on support for GPU (CUDA) and related technologies. Technologies supported by a version of ffmpeg can be checked with the -hwaccels argument:$ singularity exec ffmpeg_4-nvidia.sif ffmpeg -version ffmpeg version 4.0.6 Copyright (c) 2000-2020 the FFmpeg developers built with gcc 7 (Ubuntu 7.5.0-3ubuntu1~18.04) configuration: … --enable-nonfree --enable-nvenc --enable-cuda --enable-cuvid …singularity exec ffmpeg_4-nvidia.sif ffmpeg -version$ singularity exec ffmpeg_4-nvidia.sif ffmpeg -hwaccels ffmpeg version 4.0.6 Copyright (c) 2000-2020 the FFmpeg developers … Hardware acceleration methods: cuda cuvidsingularity exec ffmpeg_4-nvidia.sif ffmpeg -hwaccelsVideo processing on a graphics processing unit

We will test the method cuda, which, among other things, can encode H.264 records on a graphics processing unit. If we want to use graphical process units in jobs, we must give the appropriate arguments:$ srun --gpus=1 singularity exec --nv ffmpeg_4-nvidia.sif ffmpeg \ -hwaccel cuda -hwaccel_output_format cuda \ -y -i bbb.mp4 -codec:a copy -filter:v scale_npp=w=iw/2:h=ih/2 \ -codec:v h264_nvenc gpe-bbb.mp4srun --gpus=1 singularity exec --nv ffmpeg_4-nvidia.sif ffmpeg -hwaccel cuda -hwaccel_output_format cuda -y -i bbb.mp4 -codec:a copy -filter:v scale_npp=w=iw/2:h=ih/2 -codec:v h264_nvenc gpe-bbb.mp4First, with the argument --gpus=1, we state that Slurm should request a node with one graphical processing unit. At the assigned node we run the container with singularity exec --nv. The --nv switch allows programs in the container to access the graphics processing unit. Finally, we require that ffmpeg actually use graphical acceleration to encode the video. Because ffmpeg cannot automatically detect the presence of a graphics processing unit and run the appropriate functions, we specify the requirements ourselves:

- with -hwaccel cuda -hwaccel_output_format cuda we load the appropriate libraries,

- with scale_npp we say that instead of the usual scale filter use a filter prepared for graphics processing units (npp - Nvidia performance primitives),

- with -codec:v h264_nvenc we select the H.264 coding algorithm prepared for graphics processing units.

Other settings are the same as before. In all previous calls to ffmpeg, we did not specify an encoding algorithm, as the -codec : v h264 setting is the default and can therefore be omitted.

If all went well, the coding is now much faster:frame=19037 fps=273 q=14.0 Lsize= 162042kB time=00:10:34.55 bitrate=2091.9kbits/s speed= 9.1xUnlike previous approaches, this acceleration was not achieved by parallel processing at the file level, but we used hardware to encode the video, which can perform the required operations much faster. Here, too, it is really a matter of parallel computing, but at a much lower level, as GPU can perform hundreds or thousands of parallel operations when encoding each individual frame. - with -hwaccel cuda -hwaccel_output_format cuda we load the appropriate libraries,

-

-

-

Exercise 1: Tools for working with a cluster

a) Registration on the cluster

Sign in to the cluster. If necessary, we install the appropriate program for access to the remote computer via the SSH protocol.b) File transfer programs

Install a program for transferring files between a personal computer and a cluster and connect to the cluster with it.

Create a text file file-or.txt on the desktop of a personal computer and write a few words in it. Create a new exercise1b folder on the cluster and upload the file-or.txt file to it. Open and edit the file on the remote computer (change the text) and save the change. Then we apply the file to the file-g.txt and transfer it back to the desktop of the personal computer. Check if any changes have been made to it.

Using a file transfer program on a remote computer, create a text.txt file in the exercise1b folder, open it, type a few words, and save it.c) Work directly on the cluster

In the terminal:- use the cd command exercise1b to move to the folder from the previous exercise,

- use the pwd command to check which folder you are in,

- use the cat text.txt command to look at the contents of the file,

- if desired, we can use the nano text.txt command to open the file with the editor and

- finally, with the cd .. command, return to the basic folder of the user account.

-

Exercise 2: Cluster information

a) Print cluster information

Run the commands from the chapter on the sinfo command and review the printouts. Remember the name of one of the computational nodes (column NODELIST), we will need it in Exercise 2c.b) Printout of information on jobs in line

Run the commands from the squeue command section and review the printouts. In the printout we get after calling the squeue command without switches, remember the user and the identifier (JOBID) one of his jobs. The first information will be needed when calling the squeue command with the switches in this exercise, and the second information in Exercise 2c for more detailed information).c) Printout of more detailed information

Run the commands from the scontrol command section and review the printouts. For individual commands, we use the node name, user and job identifier from the previous tasks, which we memorized from exercises 2a and 2b. -

Exercise 3: Creating and running cluster jobs

a) The srun command

Start the following operations:- Four instances of the hostname program on one computing node on the fri reservation. Set the job name to my_job. Use the srun command.

- Two instances of the hostname program on each of the two computing nodes on the fri reservation. Set the amount of memory per processor to 10 MB. Use the srun command.

b) The sbatch and scancel commands

Using the sbatch command, run the following operations:- Run four instances of the hostname program on a single node with the sbatch command. Follow the example in chapter Starting jobs on a cluster, sbatch command.

- Run the sleep 600 instance with the sbatch command. The program will wait 600 seconds after startup and finish without printing. We use the reservation fri. As a basis for our script, we use the example presented, in which we extend the time limit accordingly. Wait for the job to start (state R), then terminate it prematurely with the scancel command.

c) The salloc command

With the salloc command, we occupy enough capacity for one task (one processor core) on the fri reservation. Connect to the appropriate node with the ssh command and run the cat /proc/cpuinfo command on it, which tells us the details of the built-in processor. When we are done, we release the occupied capacities. -

Exercise 4: Video Processing

In this exercise, we use the examples presented in the chapter on video processing.

a) Using ffmpeg

Load the ffmpeg module and run the ffmpeg -version command, first on the input node and then on the computing node.b) Convert the record

Upload a short videollama.mp4to the cluster, convert it to a mov format, and transfer the output file back to our computer. There we watch it in our favorite player.c) Use of filters

- The image from the previous exercise is processed with the help of ffmpeg with one of the listed filters. Be sure to run the transaction on the computing node (use the srun command and the appropriate reservation, if you have one).

- Repeat the process with another filter, specifying another output file. Transfer the processed images back to our computer and view them.

-

Exercise 5: Parallel video processing

In this exercise, we use the examples presented in the chapter on parallel video processing.

a) Video segmentation

Download thebig-buck-bunyvideo to your PC and transfer it to the NSC cluster. Then cut it into pieces lasting 80 seconds each. Although this operation is not demanding, as it only copies parts of the snapshot to new files, we still run it on the computing node with the srun command. Use the reservation fri. Remember to load the FFmpeg module.b) Processing

Increase the resolution by a factor of 1.5 for the pieces of video we created in the previous task. We use the sbatch command and array jobs. We can help ourselves with the script ffmpeg3.sh.c) Assembling

We combine the pieces from exercise 5b into one clip, download it to our computer and watch it in the player. -

Exercise 6: Processing videos with containers

In this exercise, we use the examples presented in the chapter on the use of graphical process units.a) Prepare a container for graphic processing units

Prepare the container for graphic processing units according to the instructions in the Use of graphical process units chapter.b) Processing

Run the ffmpeg program using a graphical processing unit. Increase the resolution of bbb.mp4 by a factor of 1.5. We use the sbatch command and job strings. More detailed instructions can be found in chapter Video processing on a graphics processing unit.

-

-

-

About the course

Patricio Bulić, University of Ljubljana, Faculty of Computer and Information ScienceContent

At this workshop we will get acquainted with the structure of graphic processing units (GPU) and their programming. Through simple examples, we will learn to write and run programs on the GPU, transfer data between the host and the GPU, and use memory efficiently on the GPU. Finally, we will write a parallel image processing program that will run on the GPU. We will program in the C programming language and use the OpenCL framework, which supports GPUs from various manufacturers (Nvidia, AMD, Intel). The same approaches to using GPU include other frameworks, so with the acquired knowledge you will easily start programming Nvidia graphics cards in the CUDA framework or replace the C programming language with another one.The course of events

Day 1

Getting to know the hardware structure and operation of the GPU; learning about the connection between the host and the GPU; getting acquainted with the software and memory model of GPU; learning about the OpenCL environment; writing, translating, and running a simple program written in OpenCL on a GPU.Day 2

In a practical example, we will learn step by step about the efficient implementation of computational tasks on the GPU, their efficient parallelization and division of work by process units, and the efficient use of memory on the GPU.Day 3

Processing images on the GPU: we will read the images on the host, transfer them to the GPU, process them there with an interesting filter and transfer the processed images back to the host.Prerequisites

- Knowledge of SSH client and SLURM middleware. See the contents of the workshop Basics of Supercomputing

- Knowledge of programming, desirable knowledge of programming language C

Acquired knowledge

After the workshop you will:- understand how a heterogeneous computer system with GPU works,

- understand how GPU works,

- know the OpenCL software framework,

- know how to write, translate and run GPU programs,

- be able to efficiently divide work by process units and use memory on GPU,

- be able to make sense of ready-made GPU libraries.

Instructions for installing Visual Studio Code

At the workshop, we will program in the Visual Studio Code development environment and run the programs in the NSC cluster. Therefore, in the Visual Studio Code environment, we will use the Remote-SSH extension, which allows us to connect to an NSC cluster via the SSH protocol. With this extension, it is possible to download and edit files directly on the NSC cluster, run commands, and even debug programs running on it. Instructions for installing Visual Studio Code, Remote-SSH extensions, and connecting to an NSC cluster can be found here.Program code

The program code of the problems that will be discussed at the workshop can be found at https://repo.sling.si/patriciob/opencl-delavnica. You can download it to your computer with the command:

$ git clone https://repo.sling.si/patriciob/opencl-delavnica.git

The material is published under the Creative Commons Attribution-Noncommercial-Share Alike 4.0 International License. ↩

The workshop is prepared under the auspices of the European project EuroCC, which aims to establish national centers of competence for supercomputing. More about the EuroCC project can be found on the SLING website.

-

-

-

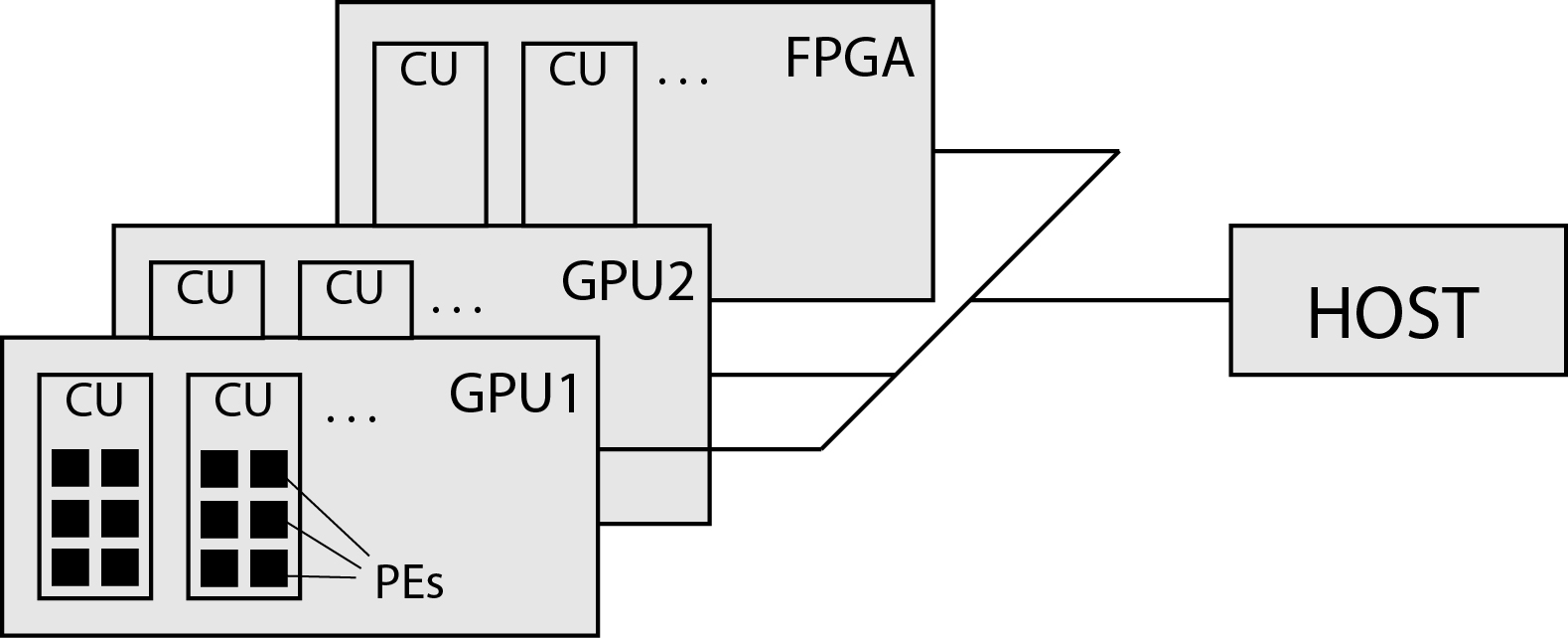

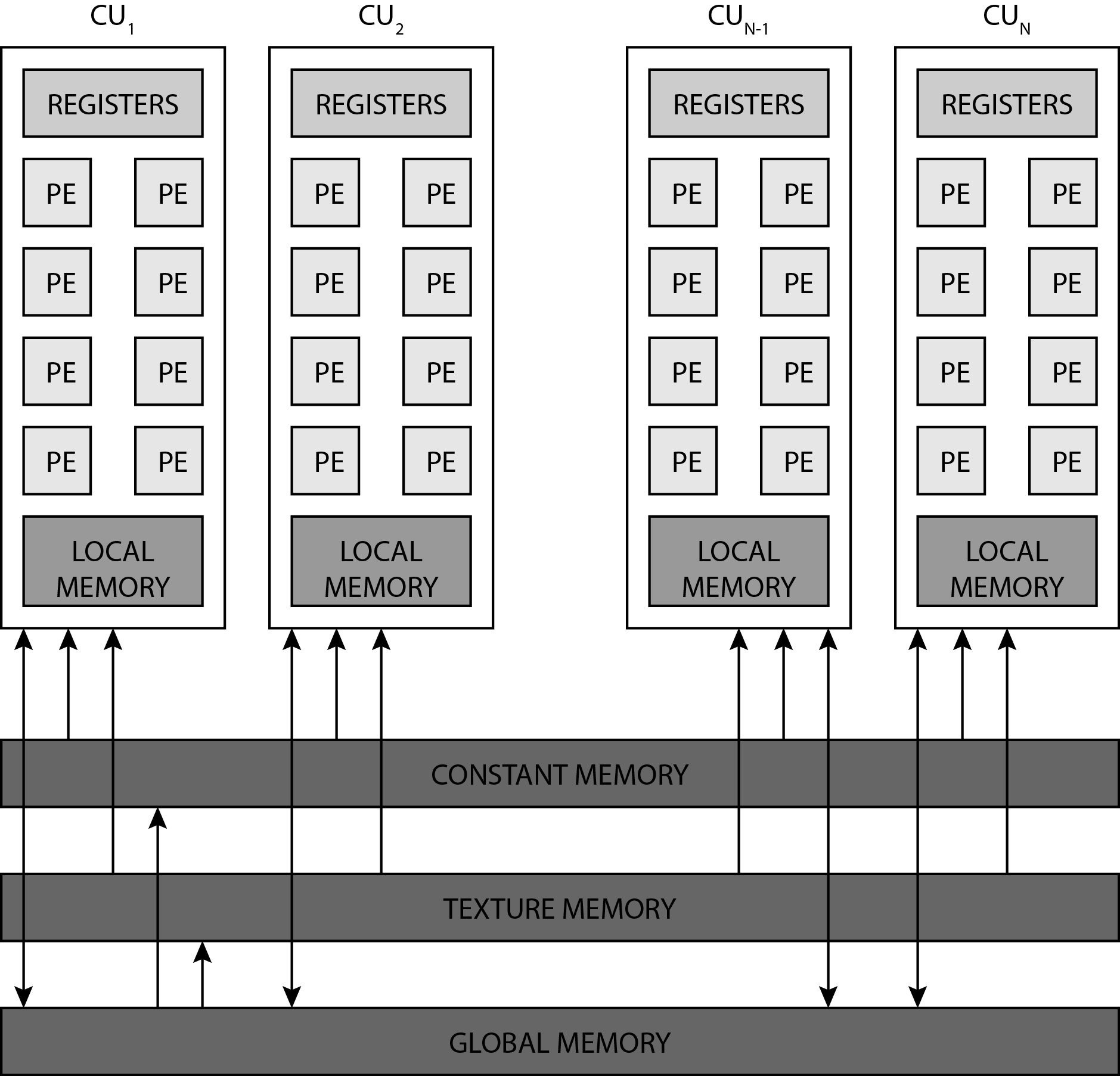

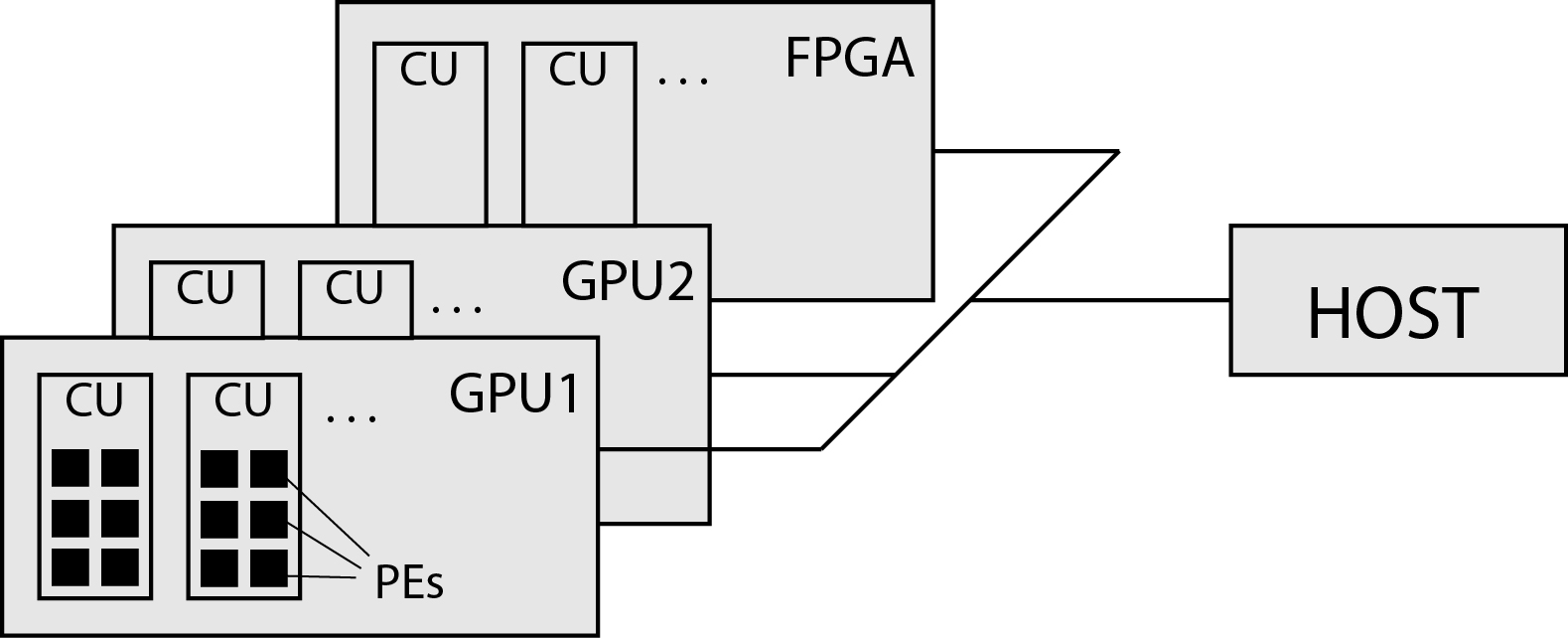

Heterogeneous systems

A heterogeneous computer system is a system that, in addition to the classic central processing unit (CPU) and memory, also contains one or more different accelerators. Accelerators are computing units that have their own process elements and their own memory, and are connected to the central processing unit and main memory via a fast bus. A computer that contains accelerators in addition to the CPU and main memory is called a host. The figure below shows an example of a general heterogeneous system. Today we know a series of accelerators. The most widely used are graphics processing units (GPUs) and programmable field arrays (FPGAs). Accelerators have a large number of dedicated process units and their own dedicated memory. Their process units are usually adapted to specific problems (for example, performing a large number of matrix multiplications) and can perform these problems fairly quickly (certainly much faster than a CPU). We call an accelerator a device.

Today we know a series of accelerators. The most widely used are graphics processing units (GPUs) and programmable field arrays (FPGAs). Accelerators have a large number of dedicated process units and their own dedicated memory. Their process units are usually adapted to specific problems (for example, performing a large number of matrix multiplications) and can perform these problems fairly quickly (certainly much faster than a CPU). We call an accelerator a device.

Devices process data in their memory (and their address space) and in principle do not have direct access to the main memory on the host. On the other hand, the host can access the memories on the accelerators, but cannot address them directly. It accesses the memories only through special interfaces that transfer data via the bus between the device memory and the main memory of the host.

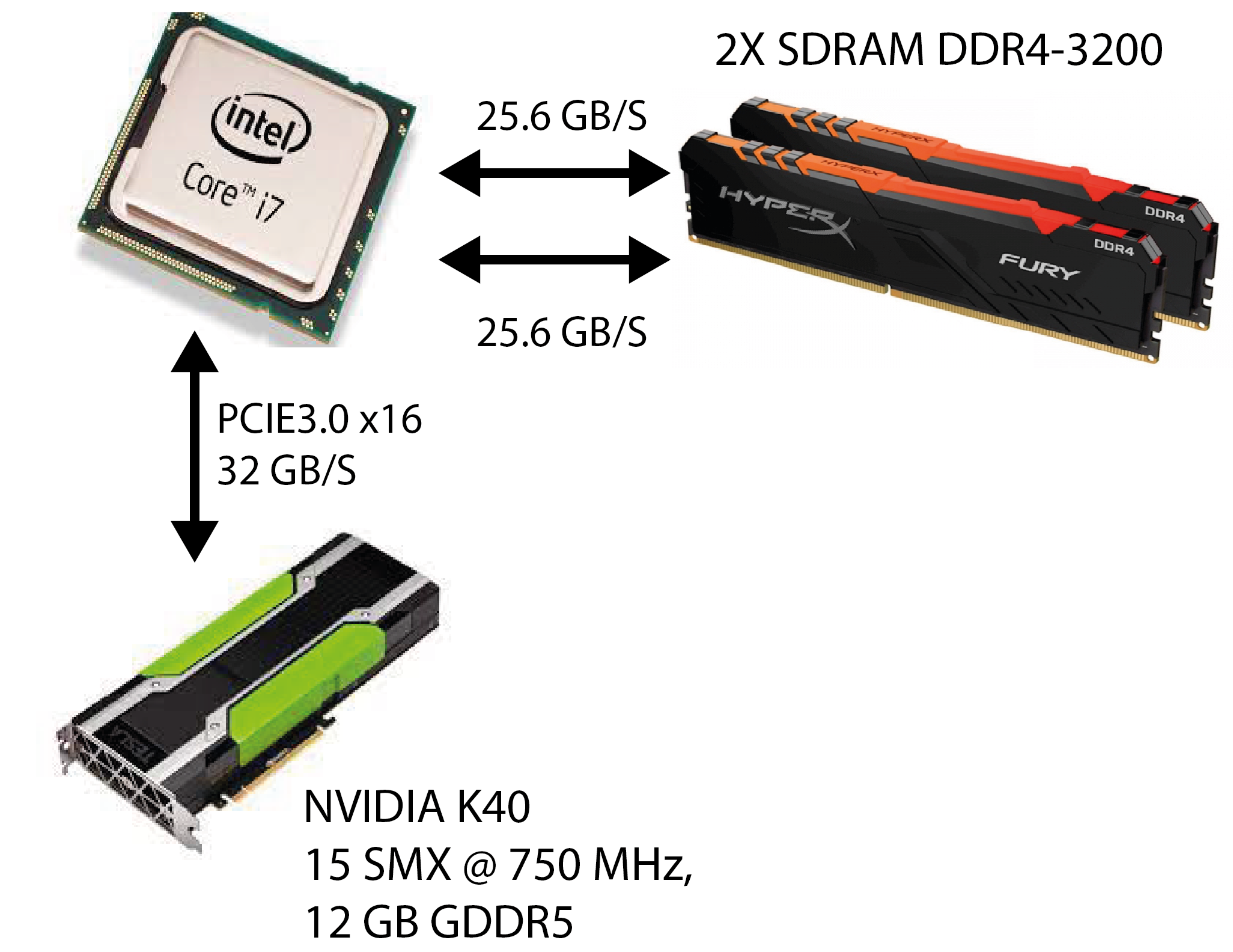

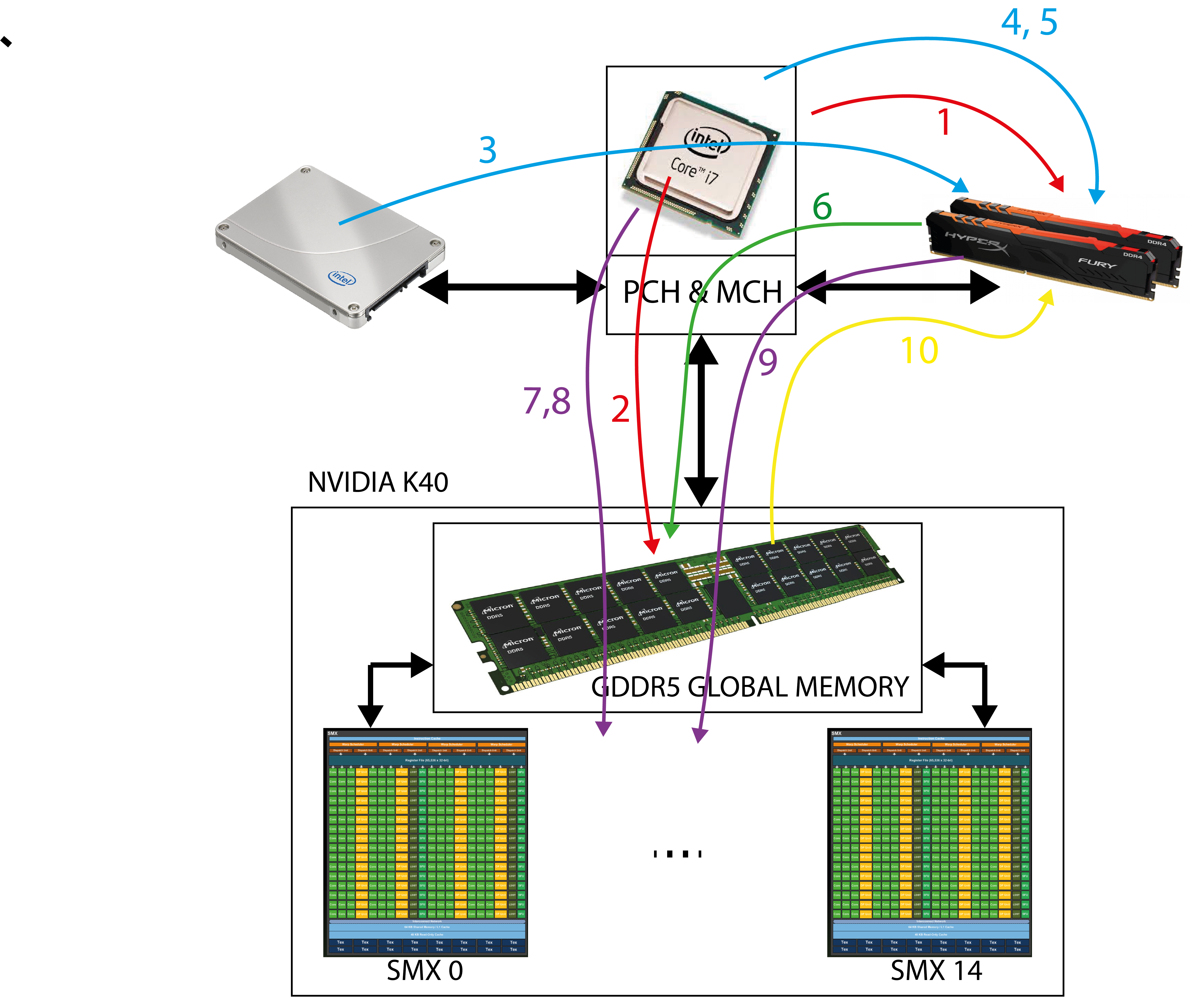

The figure below shows the organization of a smaller heterogeneous system such as that found in a personal computer. The host is a personal desktop computer with an Intel i7 processor. The main memory of the host is in DDR4 DIMMs and is connected to the CPU via a memory controller and a two-channel bus. The CPU can address this memory (for example, with LOAD / STORE commands) and store commands and data in it. The maximum theoretical single channel transfer rate between the DIMM and the CPU is 25.6 GB / s. The accelerator in this heterogeneous system is the Nvidia K40 graphics processing unit. It has a large number of process units (we will get to know them below) and its own memory (in this case it has 12 GB of GDDR5 memory). Memory in the GPE can be addressed by process units in the GPE, but cannot be addressed by the CPU on the host. Also, processors on the GPE cannot address the main memory on the host. The GPE (device) is connected to the CPU (host) via a high-speed 16-channel PCIe 3.0 bus, which enables data transfer at a maximum of 32 GB / s. Data between the main memory on the host and the GPE memory can only be transferred by the CPU via special interfaces.

The main memory of the host is in DDR4 DIMMs and is connected to the CPU via a memory controller and a two-channel bus. The CPU can address this memory (for example, with LOAD / STORE commands) and store commands and data in it. The maximum theoretical single channel transfer rate between the DIMM and the CPU is 25.6 GB / s. The accelerator in this heterogeneous system is the Nvidia K40 graphics processing unit. It has a large number of process units (we will get to know them below) and its own memory (in this case it has 12 GB of GDDR5 memory). Memory in the GPE can be addressed by process units in the GPE, but cannot be addressed by the CPU on the host. Also, processors on the GPE cannot address the main memory on the host. The GPE (device) is connected to the CPU (host) via a high-speed 16-channel PCIe 3.0 bus, which enables data transfer at a maximum of 32 GB / s. Data between the main memory on the host and the GPE memory can only be transferred by the CPU via special interfaces.Programming of heterogeneous systems

We will use the OpenCL framework to program heterogeneous computer systems. Programs written in the OpenCL framework consist of two parts:- program to be run on the host and

- program running on the device (accelerator).

A host program

The program on the host will be written in the C programming language as part of the workshop. It is an ordinary program contained in the C function main(). The task of the program on the host is to:- determine what devices are in the system,

- prepare the necessary data structures for the selected device,

- create buffers to transfer data to and from the device

- reads and translates from a file the program that will run on the device,

- the translated program downloads along with the data to the device and runs it,

- after the program runs on the device, it transfers the data back to the host.

Program on the device

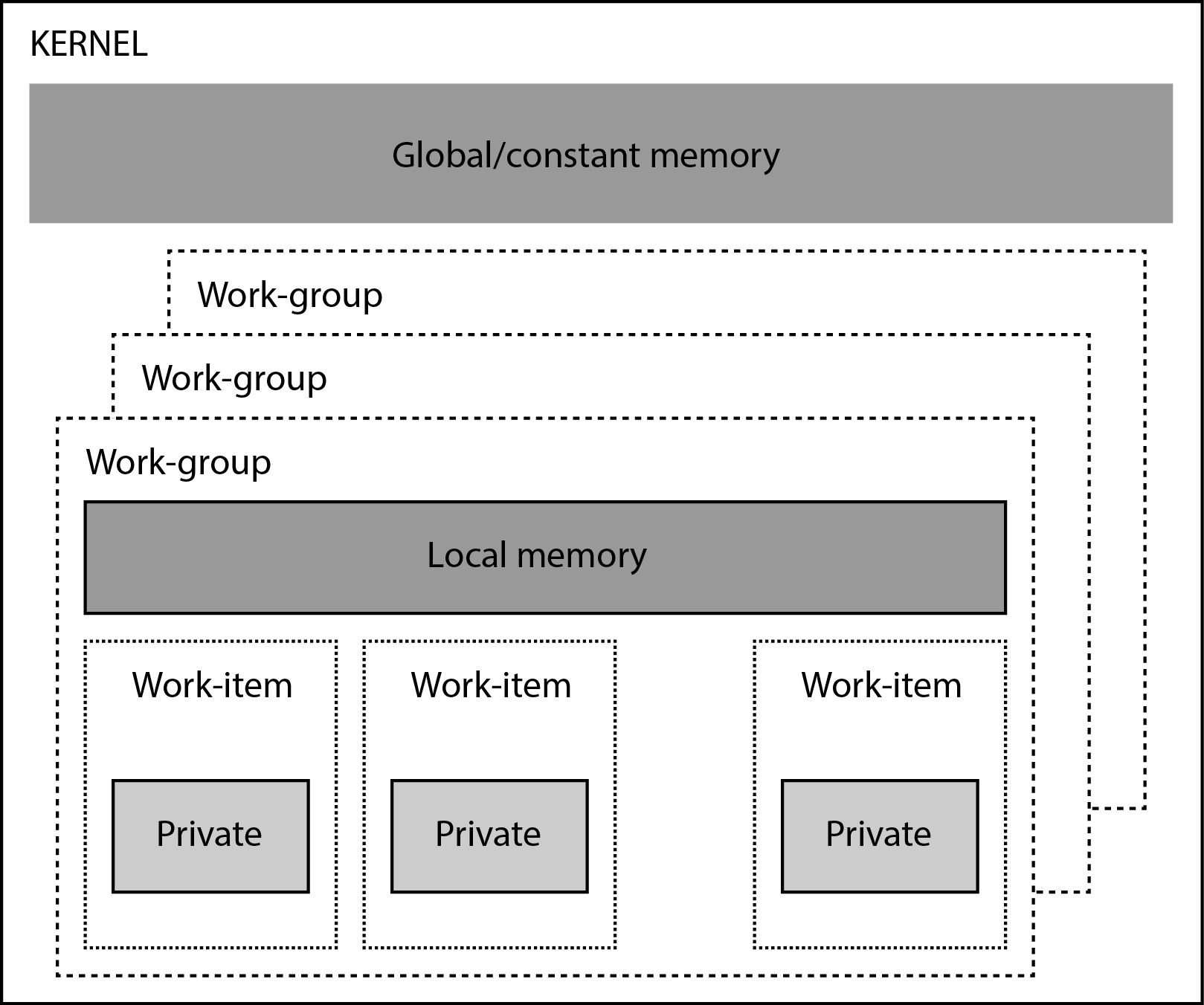

We will write programs for devices in the OpenCL C language. We will learn that this is actually a C language with some changed functionalities. Programs written for the device are first translated on the host, then the host, along with the data and arguments, transfers them to the device where they are executed. The program running on the device is called a kernel. Pliers are run in parallel - a large number of process units on the device are run by the same kernel. In the following, we will get to know the implementation model provided for the devices by the OpenCL framework. The OpenCL framework provides the same runtime model and the same memory hierarchy for all types of devices, so we can program very different devices with it. In the workshop, we will limit ourselves to programming graphic process units. -

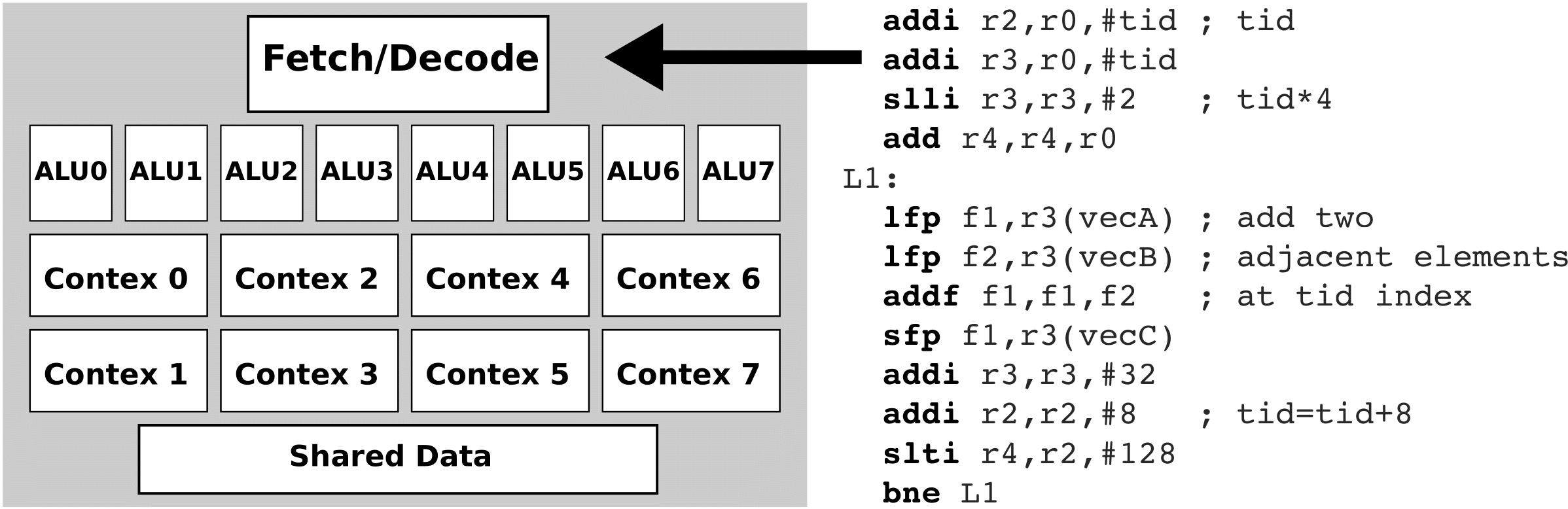

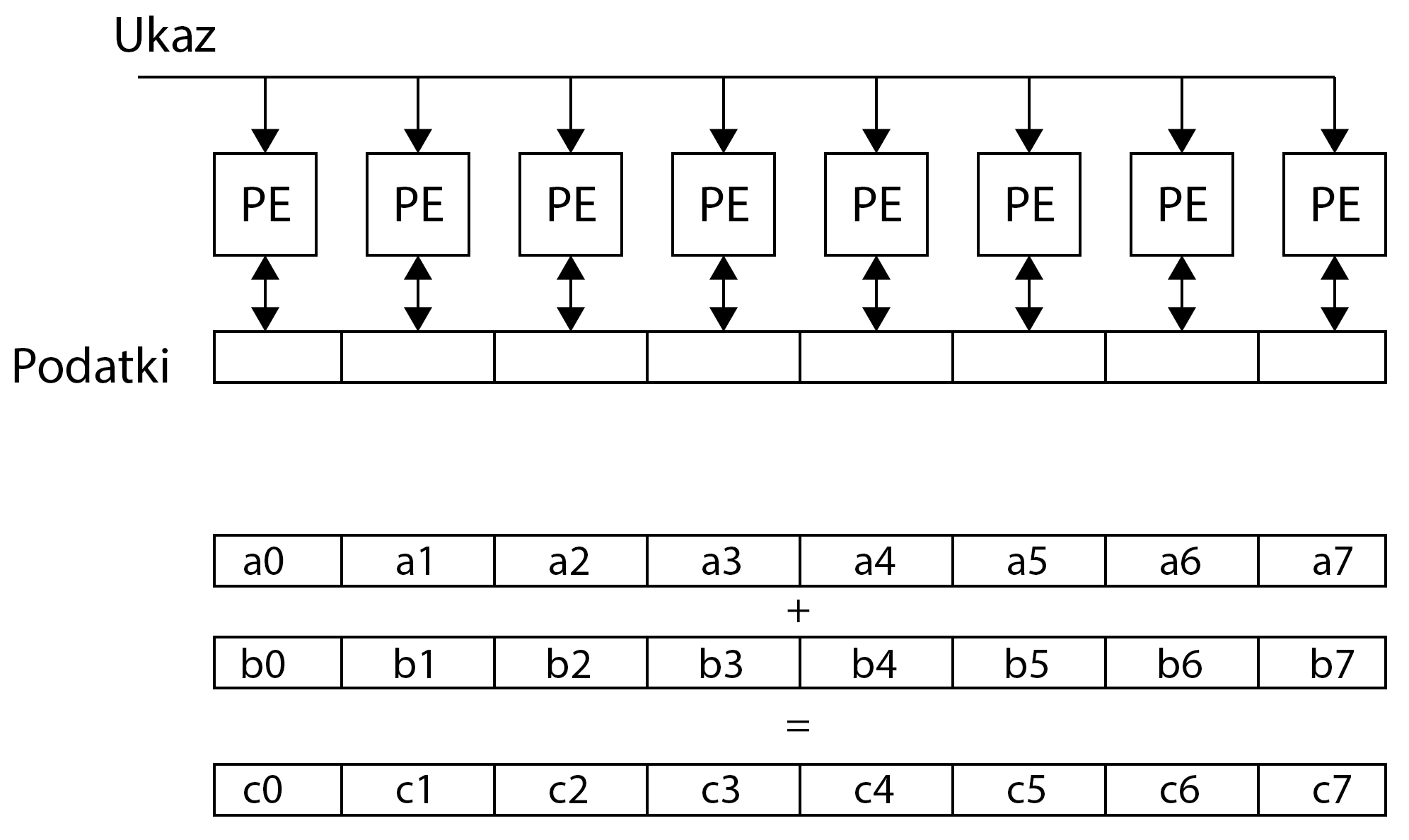

Anatomy of graphic process units

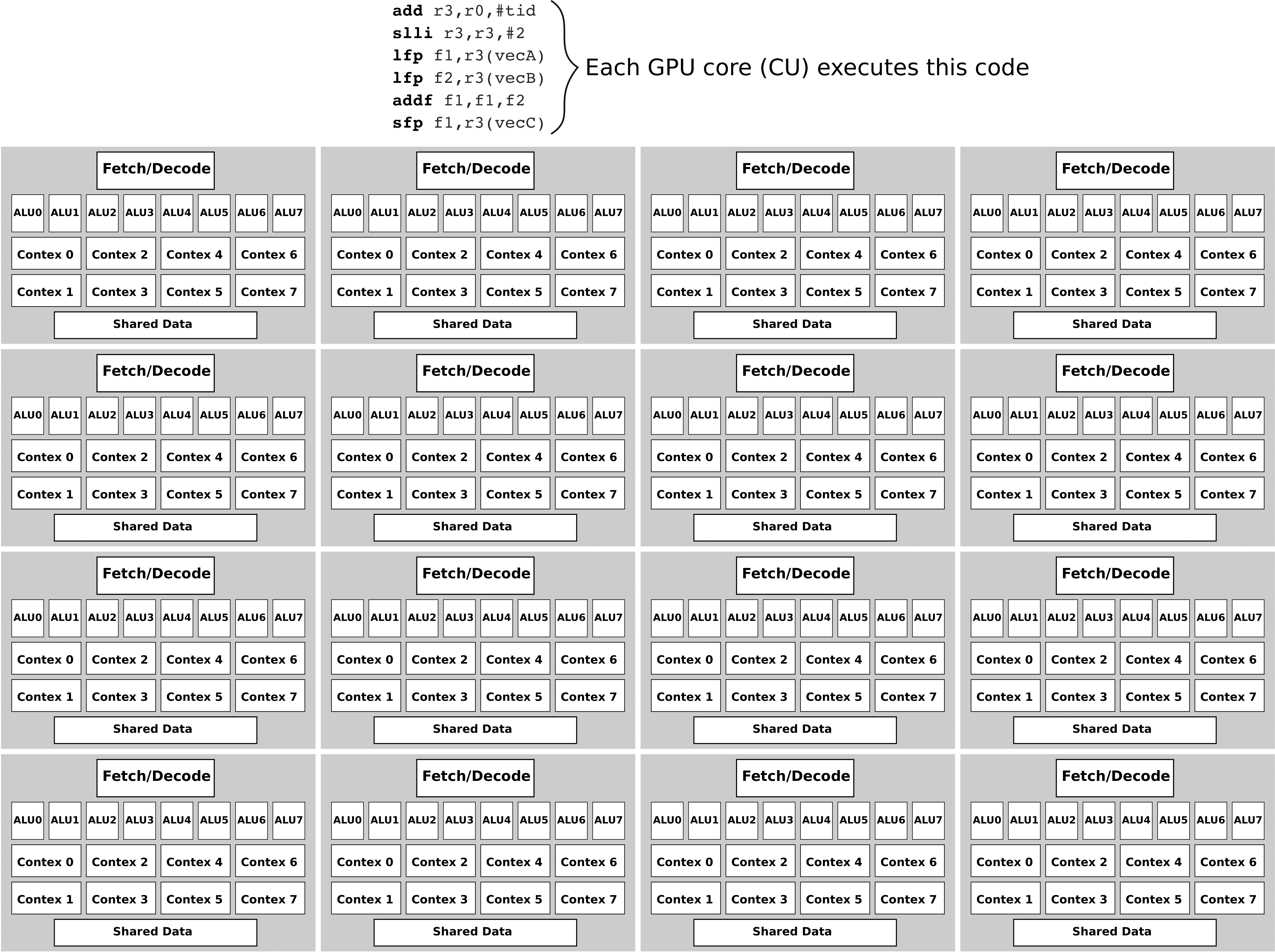

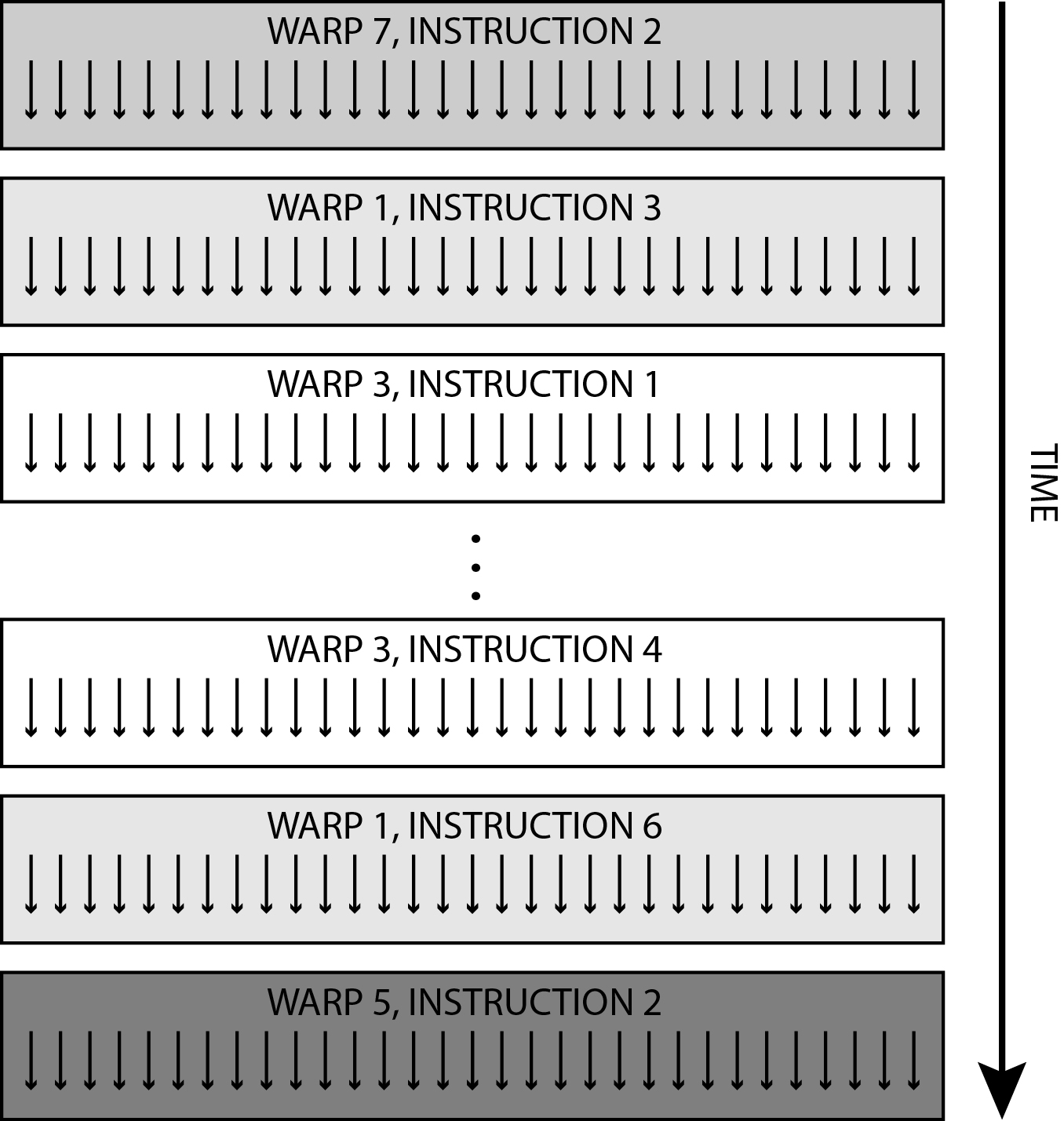

To make it easier to understand the operation and structure of modern graphic units, we will look below at a simplified description of the idea that led to their emergence. GPUs were created in the desire to execute program code which has a large number of relatively simple and repetitive operations on a large number of smaller processing units and not on a large, complex and energy-hungry central processing unit. Many of the problems that modern GPUs are designed for include: image and video processing, operations on large vectors and matrices, deep learning, etc.

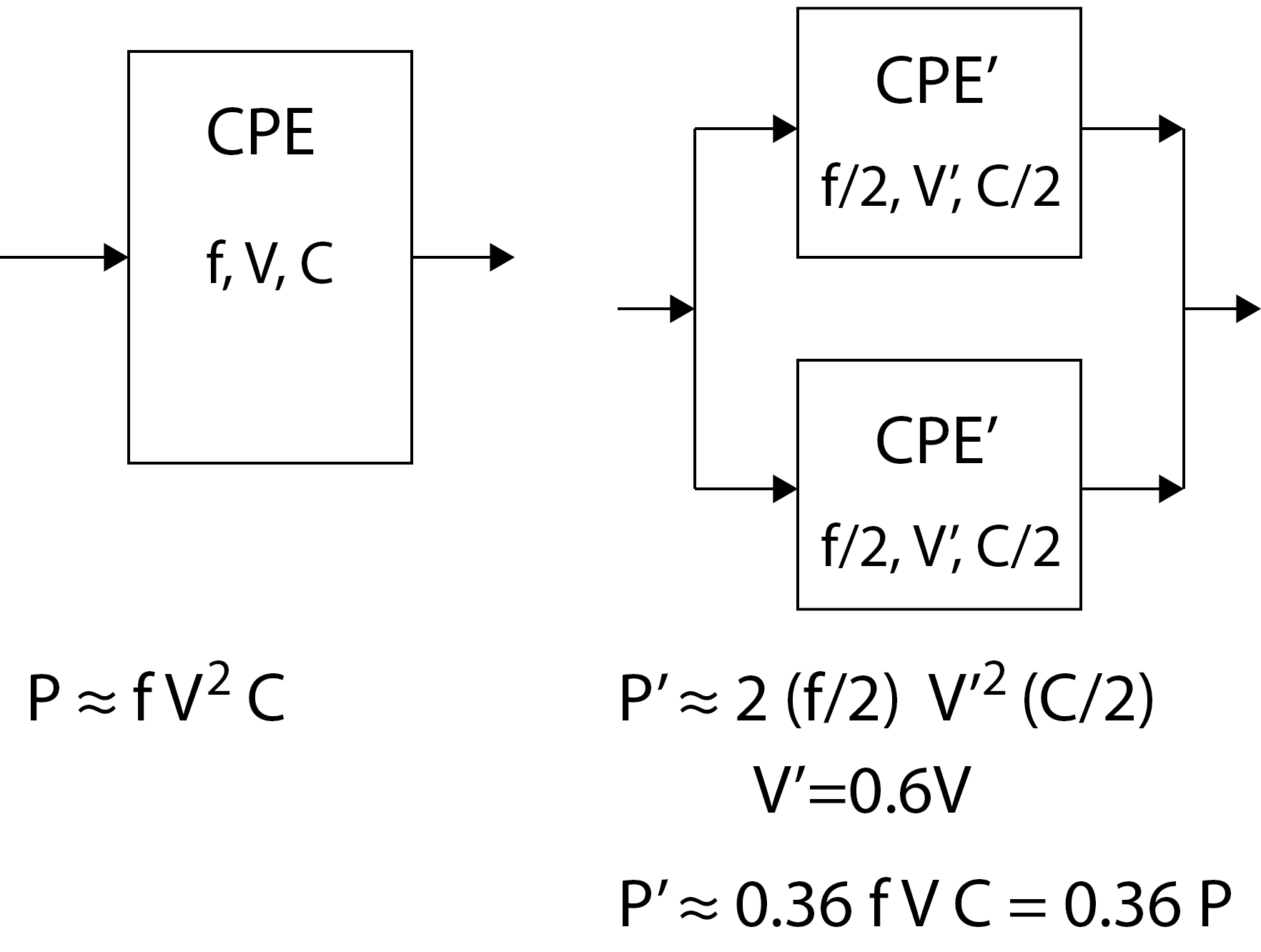

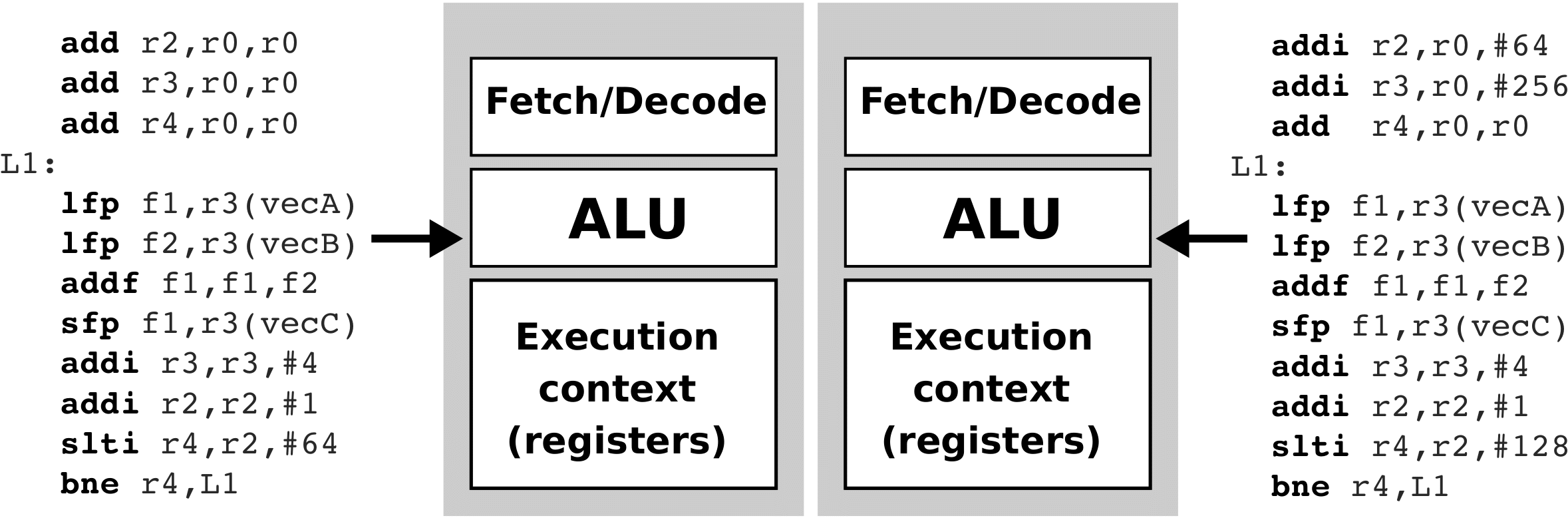

Modern CPUs are very complex digital circuits that speculatively execute commands in multiple pipelines, and the commands support a large number of diverse operations (more or less complex). CPUs have built-in branch prediction units and trace types in the pipelines, in which they store microcodes of commands waiting to be executed. Modern CPUs have multiple cores and each core has at least a first-tier L1 cache. Due to the above, the cores of modern CPUs together with caches occupy a lot of space on the chip and consume a lot of energy. In parallel computing, we strive to have as many simple process units as possible that are small and energy efficient. These smaller processing units typically operate at a clock speed several times lower than the CPU clock clock. Therefore, smaller process units require a lower supply voltage and thus significantly reduce energy consumption. The figure above shows how power and power consumption can be reduced in a parallel system. On the left side of the image is a CPU that processes with frequency f. To work with frequency f, it needs the energy it gets from the supply voltage V. The internal capacitance (that is, a kind of inertia that resists rapid voltage changes on digital connectors) of such a CPU depends mainly on its size on the chip and is marked with C. The power required by the CPU for its operation is proportional to the clock frequency, the square of the supply voltage and the capacitance.

The figure above shows how power and power consumption can be reduced in a parallel system. On the left side of the image is a CPU that processes with frequency f. To work with frequency f, it needs the energy it gets from the supply voltage V. The internal capacitance (that is, a kind of inertia that resists rapid voltage changes on digital connectors) of such a CPU depends mainly on its size on the chip and is marked with C. The power required by the CPU for its operation is proportional to the clock frequency, the square of the supply voltage and the capacitance.

On the right side of the image, the same problem is solved with two CPU' processing units connected in parallel. Suppose that our problem can be broken down into two exactly the same subproblems, which we can solve separately, each on its own CPU'. Assume also that the CPUs' processing units are half the size of the CPU in terms of chip size and that they operate at a frequency of f/2. Because they work at half frequency, they also need less energy. It turns out that if we halve the clock frequency in a digital system, we only need 60% of the supply voltage for the system to work. As the CPUs are half as small, their capacitance is only C/2. The power P' that such a parallel system now needs for its operation is only 0.36 P.The evolution of GPU