Section outline

-

-

Nvidia Tesla K40 GPE

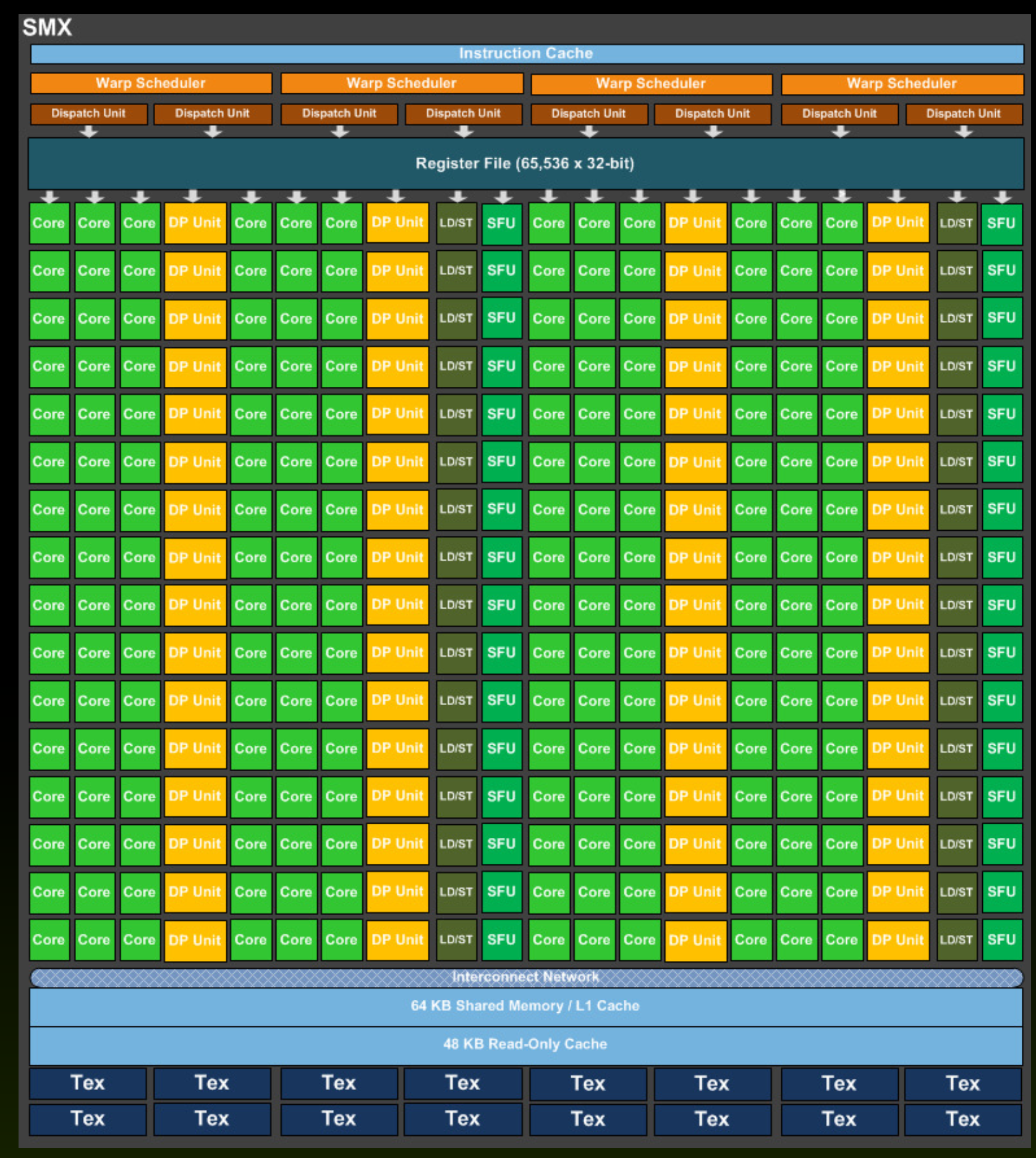

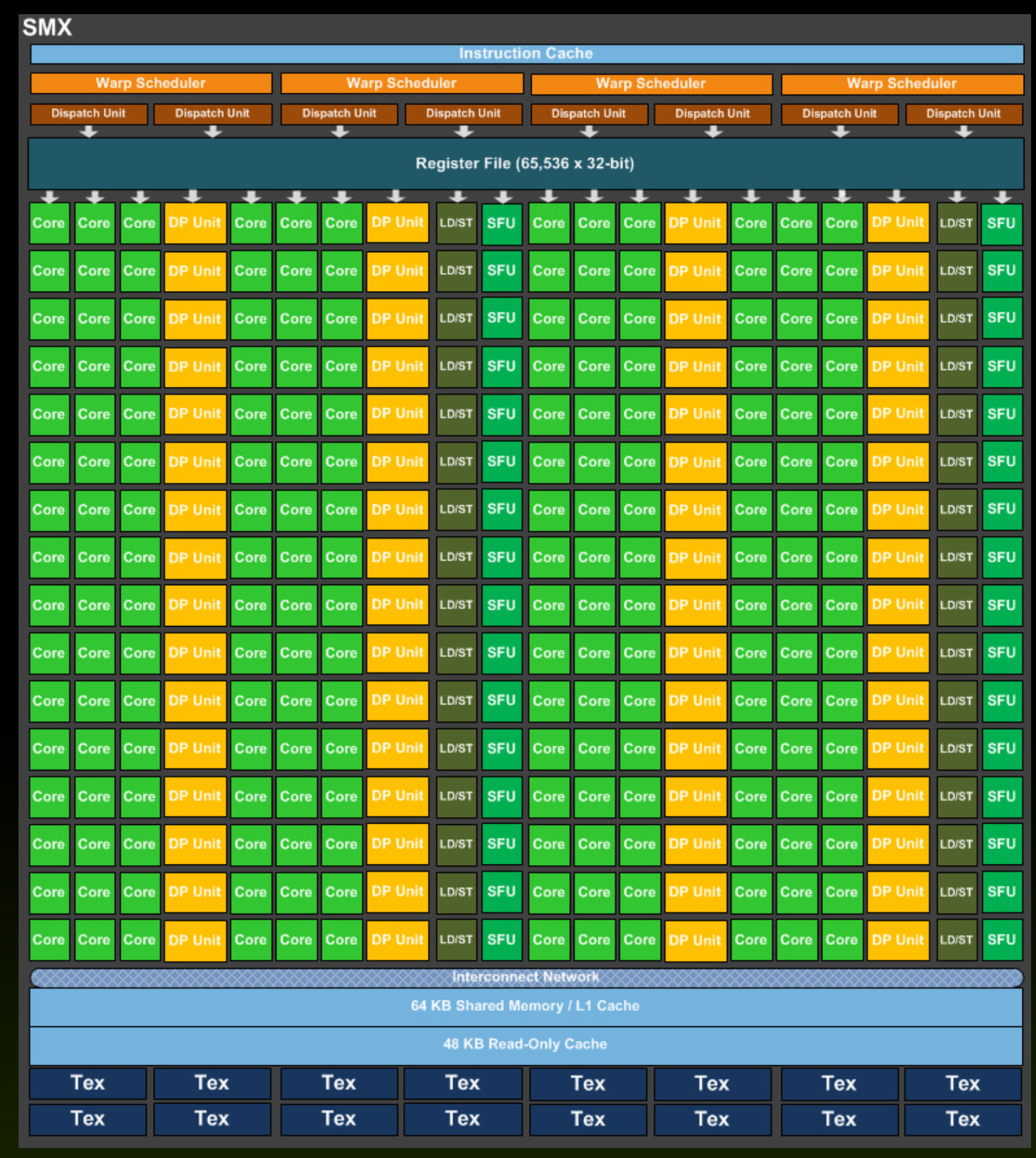

Let's look at the structure of a typical GPU on the example of Nvidia Tesla K40, shown in the picture below. It contains 15 compute units (CU), which Nvidia calls the NeXt Generation Streaming Multiprocessor (SMX). The CU structure is shown in the figure below. It contains 128 processors, which Nvidia calls cores (stream processor).

The CU structure is shown in the figure below. It contains 128 processors, which Nvidia calls cores (stream processor).

The Tesla K40 has a total of 1920 process elements (15 CU * 128 PE in CU).

Implementing kernel

Kernel running on the GPU must be written in such a way that it is explicitly specified what each thread is doing. How we do this will be learned below. When a kernel is run on the GPU, the thread sorter, labeled as Giga Thread Engine, will first arrange the individual threads into compute units (CU). Within the compute units, the internal sorter will arrange the individual threads by process elements. The number of threads that GPU executes and sorts simultaneously is limited. There is also a limited number of threads that can be sorted and executed by individual compute units. A more detailed description of implementation and limitations in sorting and implementing threads will be given in the next section. -

Execution model

Graphical process units with a large number of process elements are ideal for accelerating problems that are data parallel. These are problems in which the same operation is performed on a large number of different data. Typical data parallel problems are computing with large vectors / matrices or images, where the same operation is performed on thousands or even millions of data simultaneously. If we want to take advantage of such massive parallelism offered to us by GPUs, we need to divide our programs into thousands of threads. As a rule, a thread on a GPU performs a sequence of operations on a particular data (for example, one element of the matrix), and this sequence of operations is usually independent of the same operations that other threads perform on other data. The program written in this way can be transferred to the GPU, where the internal sorters will arrange for sorting threads by compute units (CU) and process elements (PE). The English term for thread in the terminology used in OpenCL is work-item.

Threads are sorted on GPU in two steps:

- The programmer must explicitly divide individual threads into work-groups within the program. Threads in the same working group will be implemented on the same compute unit. Because the compute units have built-in local memory that is accessible to all threads on it, they will be able to exchange data quickly and easily without using the slower global GDDR5 memory on the GPU. In addition, the threads on the same compute unit can be synchronized with each other quite easily. The main sorter on the GPU will classify the workgroups evenly across all compute units. The working groups are classified into compute units completely independently of each other and in any order. Several working groups can be sent to one compute unit for implementation (today typically a maximum of 16).

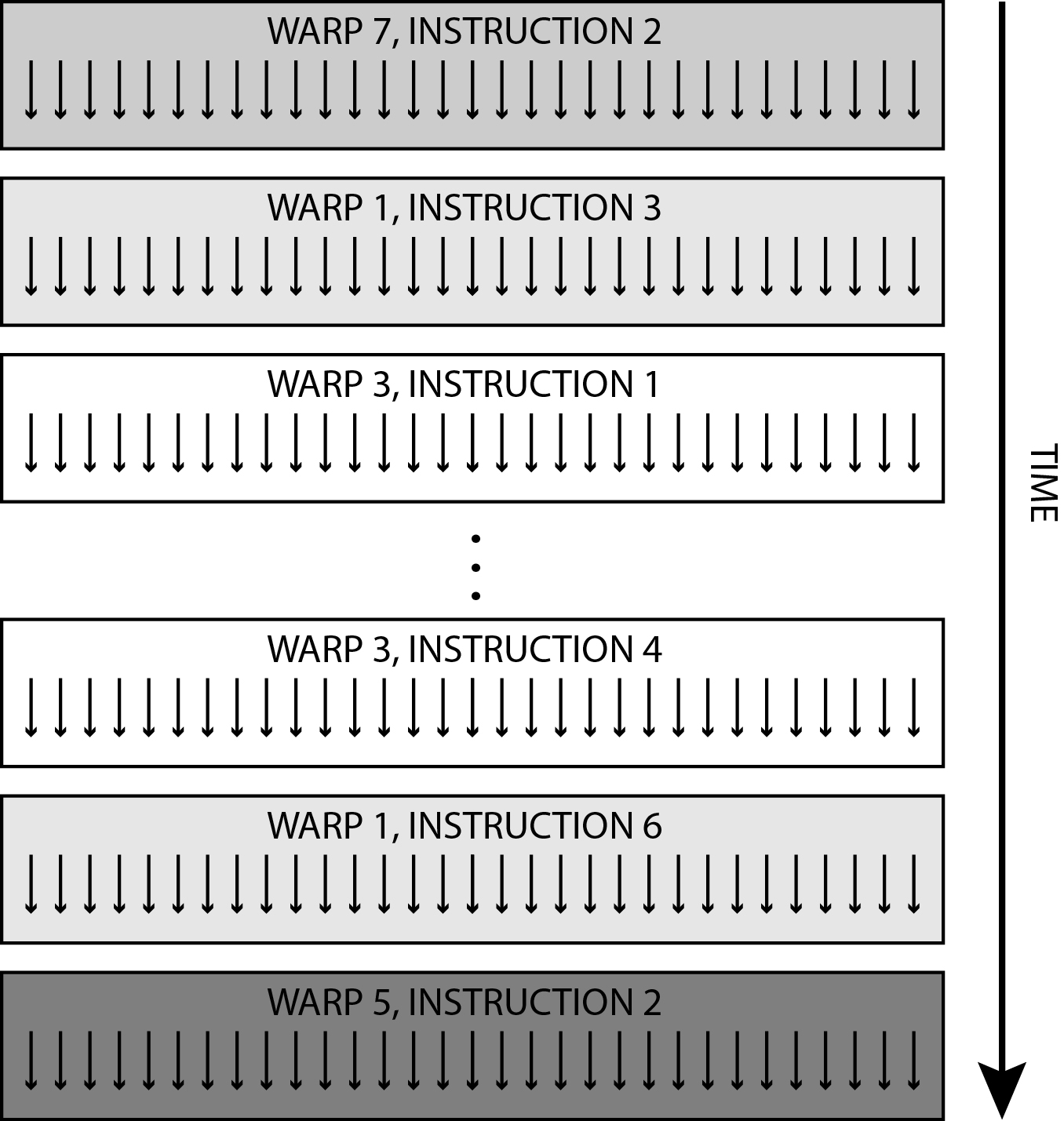

- The sorter in the calculation unit will send threads from the same workgroup to process elements for implementation. It sends exactly 32 threads to each cycle for execution. We call these threads a warp. The bundle always contains 32 consecutive threads from the same workgroup. All threads in the bundle start at the same place in the program, but each has its own registers, including the program counter. Such a chamois even in a bundle can be arbitrarily branched, although this is not desirable. The best efficiency in bundle execution is achieved when all threads execute the same sequence of commands over different data (lock-step execution). If threads in a bundle execute jump commands, branching differently, their execution is serialized until they all come to the same command again, greatly reducing execution efficiency. Therefore, as programmers, we must make sure that there are no unnecessary branches (if-else statements) inside the thread. The implementation of the threads in bundles is shown in the figure below.

The number of threads in one working group is limited upwards. There is also a limited number of working groups that are assigned to one compute unit at the same time and the number of threads that are allocated to one compute unit. For the Tesla K40, the maximum number of threads in a workgroup is 1024, the maximum number of workgroups on one calculation unit is 16 and the maximum number of threads that can be executed on one calculation unit is 2048. It follows from the latter that a maximum of 64 (2048/32) bundles.

The number of threads in one working group is limited upwards. There is also a limited number of working groups that are assigned to one compute unit at the same time and the number of threads that are allocated to one compute unit. For the Tesla K40, the maximum number of threads in a workgroup is 1024, the maximum number of workgroups on one calculation unit is 16 and the maximum number of threads that can be executed on one calculation unit is 2048. It follows from the latter that a maximum of 64 (2048/32) bundles.

We usually do smaller workgroups in programs that contain 128 or 256 threads. In this way, we can perform several working groups on the compute unit (for 256 threads in group 8, for 128 threads in working group 16 and for 1024 threads in working group 2) and thus facilitate the work of the sorter, which performs bundles from different working groups in any in order. This is especially true when threads from one workgroup are waiting before locking (synchronizing) and the sorter can send a bundle of threads from another workgroup into execution.

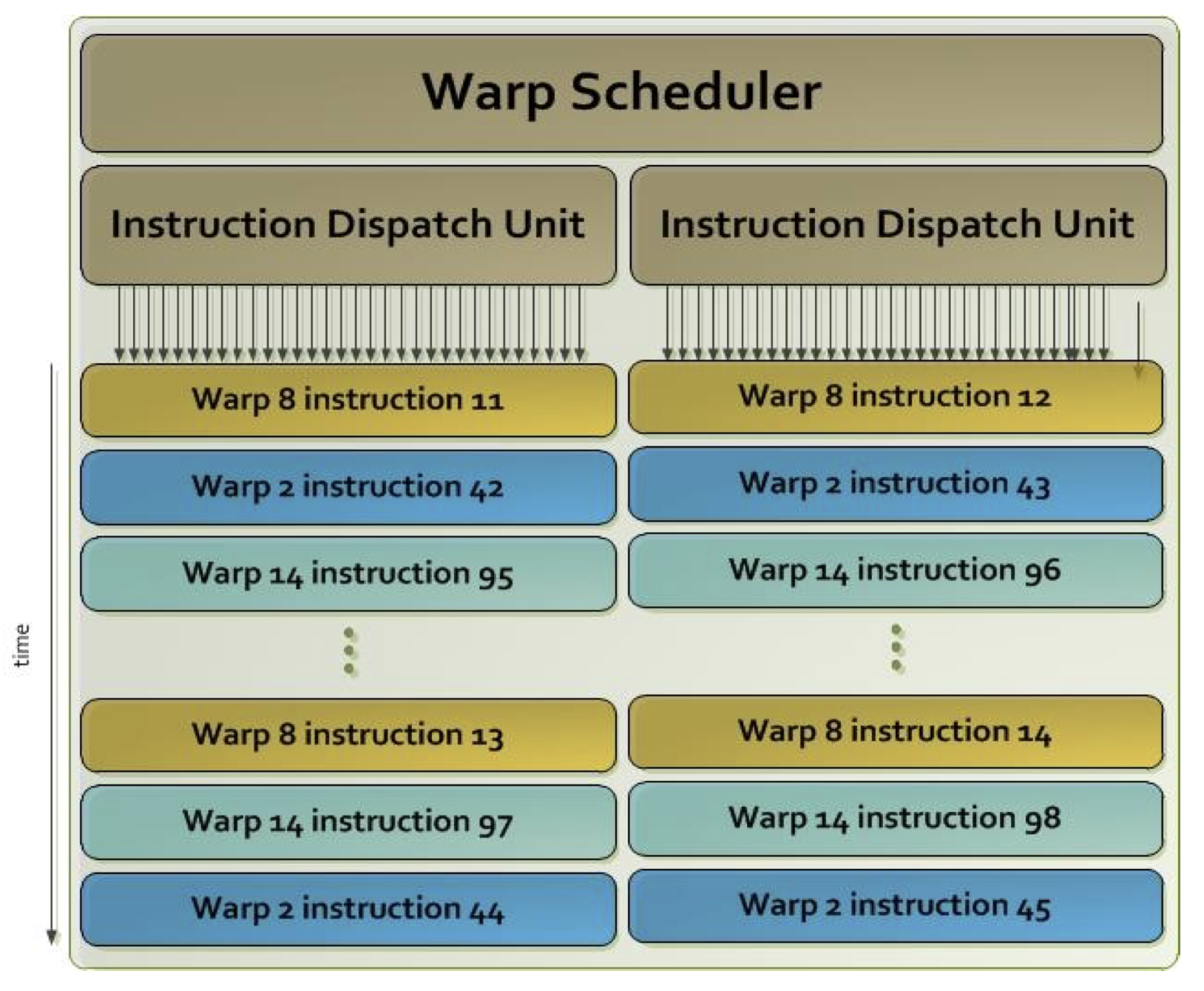

The image below shows the compute unit in the Tesla K40 GPU. Each compute unit as four bundle sorters (marked as warp schedulers in the figure), so four bundles can be executed at the same time. In addition, each bundle sorter issues two commands at a time in execution (it has two dispatch units) from the same bundle of threads, which means that it can issue eight commands at a time - so that eight bundles are run at a time. In this way, they ensured that all process units were occupied at all times. We have not mentioned them so far, but in addition to 192 process elements, each calculation unit contains 64 units for floating-point calculation with double precision (DP unit) and 32 units for special operations, such as trigonometric operations (SFU - Special Functions). Unit). The figure below shows the operation of one beam sorter.

Each compute unit as four bundle sorters (marked as warp schedulers in the figure), so four bundles can be executed at the same time. In addition, each bundle sorter issues two commands at a time in execution (it has two dispatch units) from the same bundle of threads, which means that it can issue eight commands at a time - so that eight bundles are run at a time. In this way, they ensured that all process units were occupied at all times. We have not mentioned them so far, but in addition to 192 process elements, each calculation unit contains 64 units for floating-point calculation with double precision (DP unit) and 32 units for special operations, such as trigonometric operations (SFU - Special Functions). Unit). The figure below shows the operation of one beam sorter. We see that two commands from the same beam are issued at any given time. The order of execution of the bundles is arbitrary and usually depends on the readiness of the operands.

We see that two commands from the same beam are issued at any given time. The order of execution of the bundles is arbitrary and usually depends on the readiness of the operands.

The table below summarizes the maximum values of individual parameters in the Tesla K40.Limitations in Tesla K40 Number of threads in the bundle 32 Maximum number of beams in the calculation unit 64 Maximum number of threads in the calculation unit 2048 Maximum number of threads in group 1024 Maximum number of working groups in the calculation unit 16 Maximum number of registers per thread 255 The maximum size of local memory in the calculation unit 48 kB -

Memory hierarchy

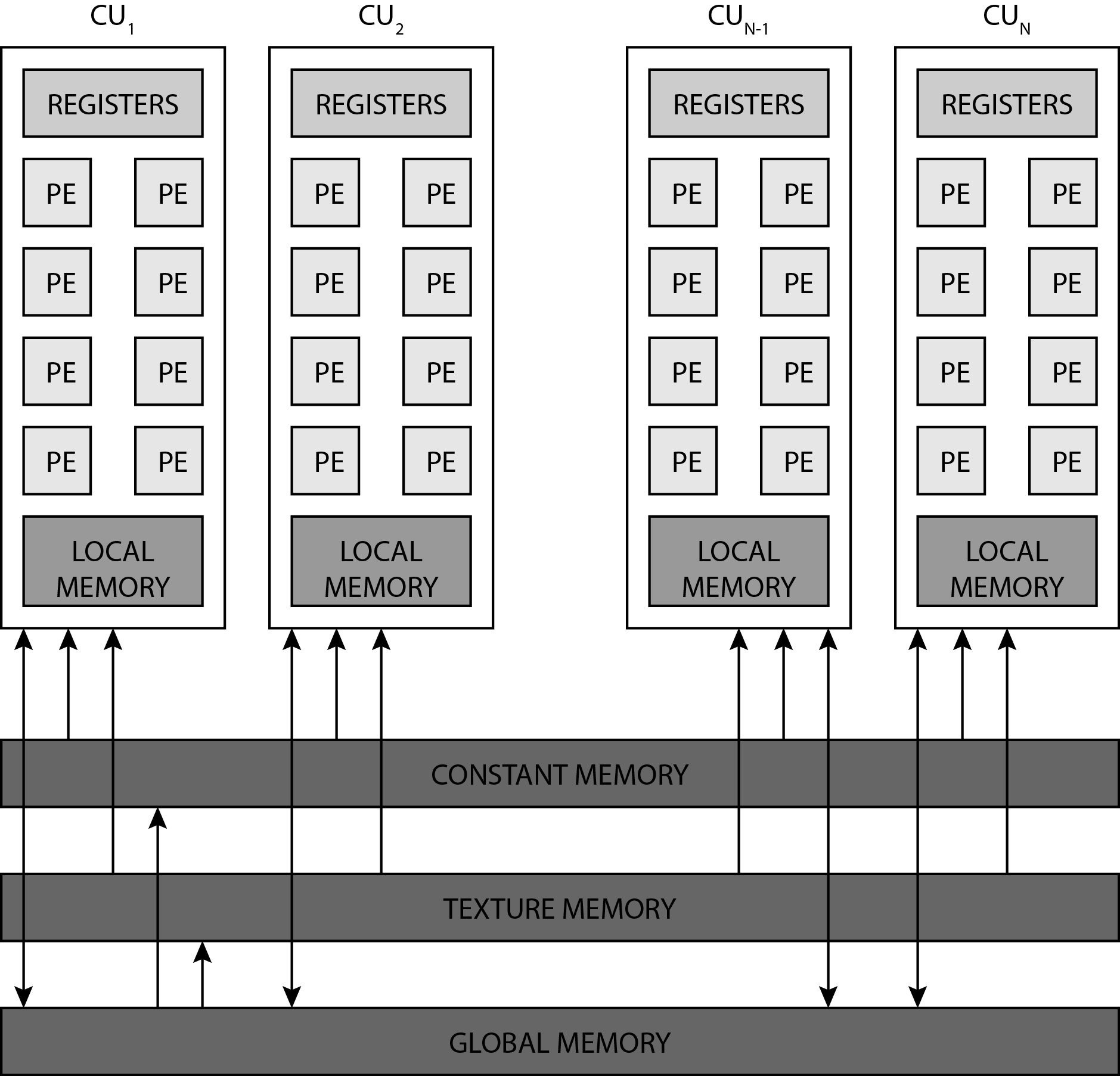

GPUs contain various memories that are accessed by individual process elements or individual threads. The image below shows the memory hierarchy on the GPU.

Registers

Each compute unit (CU) contains several thousand registers, which are evenly distributed among the individual threads. The registers are private for each thread. The compute unit in the Tesla K40 has 65,536 registers and each thread can use a maximum of 255 registers. Threads keep the most frequently accessed operands in the registers. Suppose we have 120 working groups with 128 threads each. On the Tesla K40 with 15 calculation units, we will have eight working groups per calculation unit (120/15 = 8), with each thread receiving up to 64 registers (65536 / (8 * 128) = 64).Local memory

Each compute unit has a small and fast SRAM (static RAM) memory, which is usually automatically divided into two parts during program startup:- first-level cache (L1) and

- local memory that can be used on either compute unit

With the Tesla K40 accelerator, this memory is 64kB in size and can be shared in three different ways:- 16 kB L1 and 48kB local memory,

- 32 kB L1 and 32 kB local memory

- 48 kB L1 and 16kB local memory.

As a rule, local memory is used for communication between threads within the same compute uint (threads exchange data with the help of local memory).Global memory

It is the largest memory porter on the GPU and is common to all threads and all compute units. The Tesla K40 is implemented in GDDR5. Like all dynamic memories, it has a very large access time (some 100 hour period). Access to main memory on the GPU is always performed in larger blocks or segments - with the Tesla K40 it is 128 bytes. Why segment access? Because the GPU forces the threads in the item bundle to simultaneously access data in global memory. Such access forces us into data parallel programming and has important implications. If threads in a bundle access adjacent 8-, 16-, 24-, or 32-bit data in global memory, then that data is delivered to the threads in a single memory transaction because they form a single segment. However, if only one thread from the bundle accesses a data in global memory (for example, due to branches), access is performed to the entire segment, but unnecessary data is discarded. However, if two threads from the same bundle access data belonging to two different segments, then two memory accesses are required. It is very important that when threading our programs, we ensure that memory access is grouped into segments (memory coalescing) - we try to ensure that all threads in the bundle access sequential data in memory.Constant and texture memories

Constant and texture memories are just separate areas in global memory where we store constant data, but they have additional properties that allow easier access to data in these two areas: - constant data in constant memory is always cached, this memory allows sending a single data to all threads in the bundle at once (broadcasting), - constant data (actually images) in the texture memory is always cached in the cache, which is optimized for 2D access; this speeds up access to textures and constant images in global memory.

-