Section outline

-

-

Addition of vectors

Let’s start with a simple example of adding two vectors. We will solve the problem in two ways. First, we’ll start out quite naively and focus only on the basics of GPU programming and the steps on the host that are needed to run our first program on GPU. In the second mode, we will then focus on the kernel improvement implemented on the GPU.

The code below shows the implementation of a function in C for summing two vectors whose elements are real numbers, represented by floating-point notation with single precision (float).1 2 3 4 5 6 7 8 9 10 11 12 13

// add the elements of two arrays void VectorAdd(float *vecA, float *vecB, float *vecC, int iNumElements) { int iGID = 0; while (iGID < iNumElements) { vecC[iGID] = vecA[iGID] + vecB[iGID]; iGID += 1; } }The arguments of the function are the addresses of all three vectors (vecA, vecB, vecC) and the number of elements in the vectors (iNumElements). The vectors are summed by summing all the identical elements in the input vectors vecA and vecB and saving the result in the equilateral element of the vector vecC. Since the vectors have iNumElements elements, we will add the vectors in a loop that will repeat the iNumElements times.

In the loop, individual elements of the vector are indexed with the integer index iGID. We chose the name on purpose (Global Index), the reason for such a choice will be known to us soon.A naive attempt to add vectors

We would now like to run the VectorAdd() function on the GPU. We realized that GPUs are ideal for performing tasks where we have a lot of data parallelism - in our case this is even more true, since we perform the same operation (addition) over all elements of vectors. In addition, these operations are independent of each other (the elements of the vectors can be summed in any order) and can thus be summed in parallel.Kernel

In previous chapters, we learned that programs for GPU (kernels) are written in such a way that they can be executed (simultaneously) by all threads from the NDRange space (this will of course be defined below) - these are all threads that will be run on GPU . Therefore, we need to divide the work done by the VectorAdd() function as evenly as possible between the threads. In our case, this is a fairly simple task, as we will divide the work to begin with so that each thread will add only the same elements. Therefore, we will run as many threads on the GPU as there are elements in the vectors - in our case, this is the iNumElements thread.

All the iNumElements threads that we will run are made up of NDRange space. We have already mentioned that this space can be one-, two-, or three-dimensional and that we adjust the dimension of the space to the data. Since our vectors are one-dimensional fields, we will organize the NDRange space in our case in one dimension (X). The global size of this space will be iNumElements. Therefore, the global index of each thread that will run on the GPU will be uniquely determined from that space and will actually correspond to the index of the elements that the thread adds up. The kernel that all threads will run on the GPU is shown in the code below:1 2 3 4 5 6 7 8 9 10 11 12

__kernel void vecadd_naive ( __global float* vecA, __global float* vecB, __global float* vecC, int iNumElemements){ int iGID = get_global_id(0); if (iGID < iNumElemements) { vecC[iGID] = vecA[iGID] + vecB[iGID]; } }We see that the kernel code is very similar to the VectorAdd() function code. Each function we want to perform on the GPU must be declared as a kernel (__kernel). We named the kernel vecadd_naive (), and its arguments are the titles of the vectors and the number of elements in the vectors. The vector addresses this time have the __global specifier, which determines that these addresses refer to the global memory on the GPU. The vectors we want to add to the GPU must be stored on the GPU, because the compute units on the GPU cannot address the main memory on the host. Because vectors can be quite large, global memory is the only suitable memory space for storing vectors. In addition, all compute units can address global memory and therefore the vector elements will be accessible to all threads, regardless of which compute unit each thread will run on (we have no influence on this anyway).

Each thread first determines its global iGID index in the NDRange space with the get_global_id(0) function. Argument 0 when calling a function specifies that we require an index in dimension X. Then each thread sums identical elements in vectors whose index is equal to its index, but only if its index is less than or equal to the number of elements in vectors (so in a kernel if sentence). In this way we provide two:

- the data with which each thread works are uniquely determined for each thread and there is no possibility that two threads might try to write to the same element of the vector vecC,

- memory access is grouped into segments where all threads in the same bundle access sequential 8-, 16-, 24-, or 32-bit data in memory (memory coalescing).

A detailed description of the OpenCL C programming language can be found on The OpenCL C Specification website.Host program

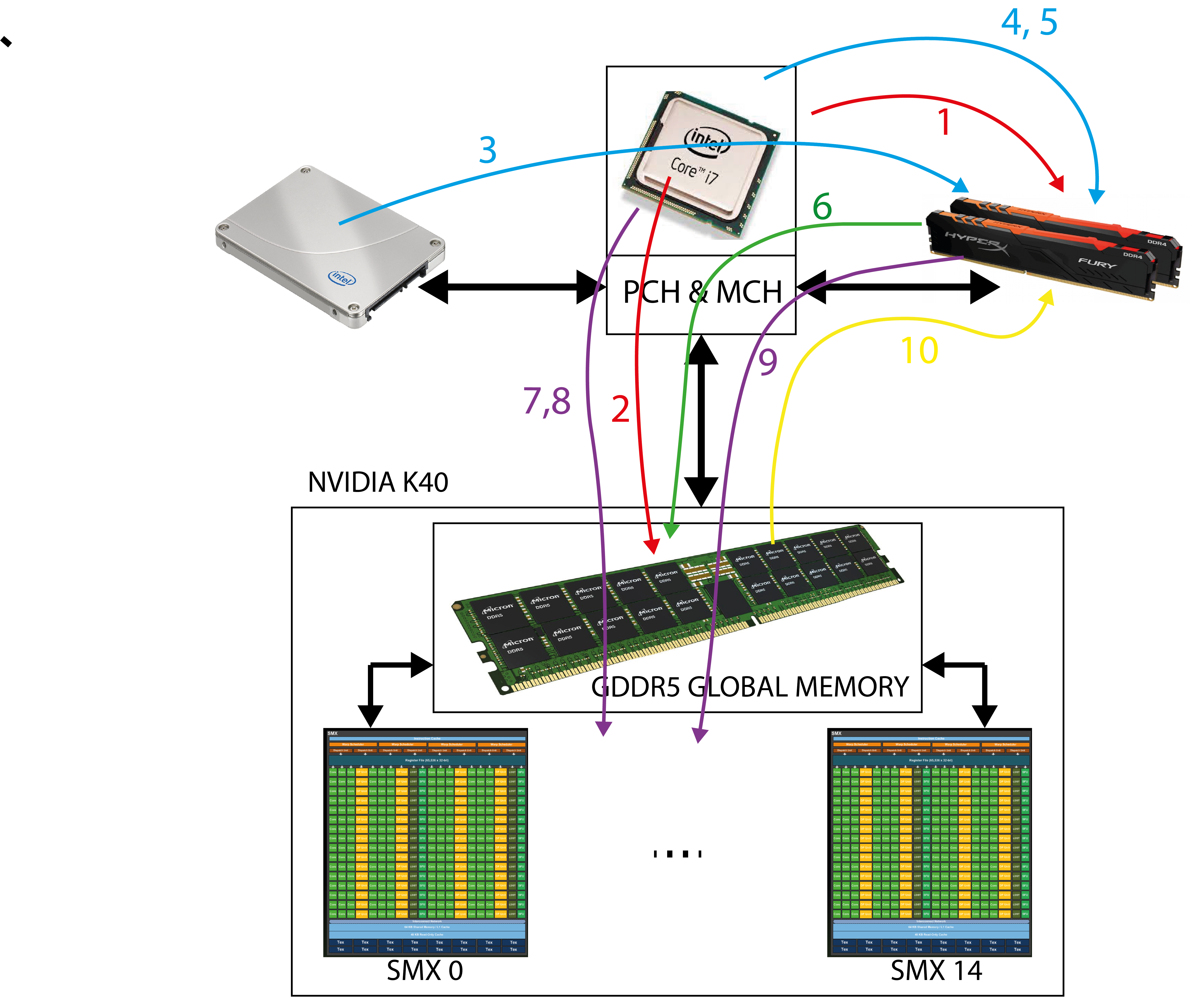

Once we have written the kernel, we need to translate it first. We translate the pins for the selected device, so we must first select the platform, the device within the platform, create the context and command line for each device as we learned in the previous chapter. With this, the work of the program on the host is far from over. The latter must now perform the following tasks:

- reserve space in main memory and initialize data on the host,

- reserve space in the device's global memory where we will store our vectors,

- read the device application from file to memory,

- compile a device program - the device program will be translated during the execution of the program on the host,

- create a kernel from the translated program, which we will run on the selected device,

- transfer data from the host to the device

- set kernel arguments,

- set the size and organization of the NDRange thread space,

- run the kernel on the device and

- read data from the global memory on the device after the kernel execution is complete.

The image above shows all the steps that a program must perform on the host before and after running the pins on the GPE device.

The image above shows all the steps that a program must perform on the host before and after running the pins on the GPE device.Initialize data on the host

The program on the host must initialize all the data needed to compute on the device. We need to be aware that the host can only access its main memory and for now, all the data initialized by the host will be in the main memory. In our case, we need to reserve space for all three vectors and initialize the vectors we want to add:1 2 3 4 5 6 7 8 9 10 11 12 13 14 15

cl_float* vecA_h; cl_float* vecB_h; cl_float* vecC_h; int iNumElements = 256*4; // works for vectors with up to 1024 elements // Allocate host arrays vecA_h = (void *)malloc(sizeof(cl_float) * iNumElements); vecB_h = (void *)malloc(sizeof(cl_float) * iNumElements); vecC_h = (void *)malloc(sizeof(cl_float) * iNumElements); // init arrays: for (int i = 0; i<iNumElements; i++ ) { vecA_h[i] = 1.0; vecB_h[i] = 1.0; }We added _h to the names of the vectors we store on the host to emphasize that this is the data on the host. All elements of the input vectors on the host were set to 1.Reserve space in the device's global memory

We now need to reserve space in the device’s global memory where we will store all the vectors that occur in the computation. We use the clCreateBuffer() function to reserve space in the device's global memory. This reserves a datasize size space. When reserving space in the global memory, specify whether the device will only read from the reserved space (CL_MEM_READ_ONLY), only write to it (CL_MEM_WRITE_ONLY) or will have read and write access (CL_MEM_READ_WRITE). The clCreateBuffer() function returns a handler (type cl_mem) to a memory object (reserved space) in the device's global memory.Remember that the host (CPU) does not have direct access to this memory so never try to use this handler as a pointer and dereference it to the host!1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39

//*************************************************** // Create device buffers //*************************************************** cl_mem vecA_d; // Input array on the device cl_mem vecB_d; // Input array on the device cl_mem vecC_d; // Output array on the device // Size of data: size_t datasize = sizeof(cl_float) * iNumElements; // Use clCreateBuffer() to create a buffer object (d_A) // that will contain the data from the host array A vecA_d = clCreateBuffer( context, CL_MEM_READ_ONLY, datasize, NULL, &status); clerr_chk(status); // Use clCreateBuffer() to create a buffer object (d_B) // that will contain the data from the host array B vecB_d = clCreateBuffer( context, CL_MEM_READ_ONLY, datasize, NULL, &status); clerr_chk(status); // Use clCreateBuffer() to create a buffer object (d_C) // with enough space to hold the output data vecC_d = clCreateBuffer( context, CL_MEM_WRITE_ONLY, datasize, NULL, &status); clerr_chk(status);Reading kernelKernels are written in files with the .cl extension. In our case, the kernel.cl file will only contain a kernel of vecadd_naive(). In general, the kernel.cl file could contain any number of pliers and the functions that these pins call. We need to compile the program for the selected device, so we do this when the host already has all the information about the platform and devices. Before compiling, we need to read the program from the kernel.cl file into the host memory and then create a program object suitable for translation from it. We do this with the code below.1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35

//*************************************************** // Create a program object for the context //*************************************************** FILE* programHandle; // File that contains kernel functions size_t programSize; char *programBuffer; cl_program cpProgram; // 6 a: Read the OpenCL kernel from the source file and // get the size of the kernel source programHandle = fopen("kernel.cl", "r"); fseek(programHandle, 0, SEEK_END); programSize = ftell(programHandle); rewind(programHandle); printf("Program size = %lu B \n", programSize); // 6 b: read the kernel source into the buffer programBuffer // add null-termination-required by clCreateProgramWithSource programBuffer = (char*) malloc(programSize + 1); programBuffer[programSize] = '\0'; // add null-termination fread(programBuffer, sizeof(char), programSize, programHandle); fclose(programHandle); // 6 c: Create the program from the source // cpProgram = clCreateProgramWithSource( context, 1, (const char **)&programBuffer, &programSize, &status); clerr_chk(status); free(programBuffer);First we open the kernel.cl file and read the code from it and save it as a sequence of characters in the program string Buffer. Only then, with the clCreateProgramWithSource() function, do we read the programBuffer character string and create the appropriate translation object cpProgram from it.Compile the program for the selected GPE device

The translation of the read program, which is stored in the cpProgram object, is translated with the clBuildProgram() function, as shown in the code below.1 2 3 4 5 6 7 8 9 10 11 12

//*************************************************** // Build the program //*************************************************** status = clBuildProgram( cpProgram, 0, NULL, NULL, NULL, NULL); clerr_chk(status);We must be aware that only now, ie during the execution of the program on the host, the kernel, which we read from the kernel.cl file, is translated. Any errors in the code in kernel.cl will only appear now, during translation, and not when compiling the host program. Therefore, in the event of translation errors (for the clBuildProgram() function), they must be stored in a data set in main memory and displayed if necessary. This can be done with the clGetProgramBuildInfo() function:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29

if (status != CL_SUCCESS) { size_t len; printf("Error: Failed to build program executable!\n"); // Firstly, get the length of the error message: status = clGetProgramBuildInfo(cpProgram, devices[0], CL_PROGRAM_BUILD_LOG, 0, NULL, &len); clerr_chk(status); // allocate enough memory to store the error message: char* err_buffer = (char*) malloc(len * sizeof(char)); // Secondly, copy the error message into buffer status = clGetProgramBuildInfo(cpProgram, devices[0], CL_PROGRAM_BUILD_LOG, len * sizeof(char), err_buffer, NULL); clerr_chk(status); printf("%s\n", err_buffer); free(err_buffer); exit(1); }The clGetProgramBuildInfo() function is called twice. First, to determine the length of the error message, and second, to read the entire error message. The following is an example of an error printout in the case where we used an undeclared name for vector B in a kernel:

Error: Failed to build program executable! <kernel>:9:35: error: use of undeclared identifier 'vecBB'; did you mean 'vecB'? vecC[myID] = vecA[myID] + vecBB[myID]; ^~~~~ vecB <kernel>:2:33: note: 'vecB' declared here __global float* vecB, ^Creating a kernel from a translated program

In general, our translated program could contain more kernels. So now we only create a kernel of vecadd_naive() from the program that we want to run on the device. We do this with the clCreateKernel() function.1 2 3 4 5 6 7 8 9 10 11

//*************************************************** // Create and compile the kernel //*************************************************** cl_kernel ckKernel; // Create the kernel ckKernel = clCreateKernel( cpProgram, "vecadd_naive", &status); clerr_chk(status);Data transfer to the device

The vectors that we initialized in the first step are transferred from the host's main memory to previously created memory objects in the device's global memory. Data transfer is actually triggered by writing the appropriate command to the cmdQueue device command line. We do this with the clEnqueueWriteBuffer() function.1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30

//*************************************************** // Write host data to device buffers //*************************************************** // Use clEnqueueWriteBuffer() to write input array A to // the device buffer bufferA status = clEnqueueWriteBuffer( cmdQueue, vecA_d, CL_FALSE, 0, datasize, vecA_h, 0, NULL, NULL); clerr_chk(status); // Use clEnqueueWriteBuffer() to write input array B to // the device buffer bufferB status = clEnqueueWriteBuffer( cmdQueue, vecB_d, CL_FALSE, 0, datasize, vecB_h, 0, NULL, NULL); clerr_chk(status);Setting kernel arguments

In order to run the selected kernel vecadd_naive(), we need to set arguments to it. To do this, use the clSetKernelArg() function.1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22

//*************************************************** // Set the kernel arguments //*************************************************** // Set the Argument values status = clSetKernelArg(ckKernel, 0, sizeof(cl_mem), (void*)&vecA_d); status |= clSetKernelArg(ckKernel, 1, sizeof(cl_mem), (void*)&vecB_d); status |= clSetKernelArg(ckKernel, 2, sizeof(cl_mem), (void*)&vecC_d); status |= clSetKernelArg(ckKernel, 3, sizeof(cl_int), (void*)&iNumElements); clerr_chk(status);The second argument of the clSetKernelArg() function indicates the order of the argument when the clip is called.Setting up and organizing the NDRange space

We are just about to run the threads that will execute the selected kernel on the GPU device. We still need to determine how many threads we will run on the GPU device, how these threads will be organized into workgroups, and how the threads and workgroups will be organized in the NDRange space. We call this the NDRange space setting. For now, we will be working in the one-dimensional NDRange space, so its size and organization are determined by the following variables szLocalWorkSize and szGlobalWorkSize:1 2 3

// set and log Global and Local work size dimensions const size_t szLocalWorkSize = 128; const size_t szGlobalWorkSize = iNumElements;

In the code above, we specified that we want to have 128 threads in the workgroup and that we want to run as many threads as the long vectors (iNumElements).

Launch the kernel on the selected GPU deviceTo start the kernel on the selected device, write the command to run the kernel and the description of the NDRange space in the cmdQueue command line. We do both with the clEnqueueNDRangeKernel() function.1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16

//*************************************************** // Enqueue the kernel for execution //*************************************************** // Launch kernel status = clEnqueueNDRangeKernel( cmdQueue, ckKernel, 1, NULL, &szGlobalWorkSize, &szLocalWorkSize, 0, NULL, NULL); clerr_chk(status);In the code above, we said that we want to run a kernel of ckKernel, that the NDRange space has dimension 1, that its global size is szGlobalWorkSize, and that the threads are organized into szLocalWorkSize size workgroups.At this point, it should be noted that when we stop a command in the command line (for example, to transfer data or run a kernel), we have no influence on when the command will actually be executed. The clEnqueueWriteBuffer() and clEnqueueNDRangeKernel() functions are non-blocking and terminate immediately. So we don’t really know when the data will be transferred, when the kernel starts and ends running on the device. The type only provides us with the order of execution of commands in it. Thus, we can argue that the kernel will certainly not start running until the data is transferred from the host to the device.Reading data from GPU device

When the kernel is complete, the vector with the sums is transferred from the device's global memory to the host's main memory.1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20

//*************************************************** // Read the output buffer back to the host //*************************************************** // Synchronous/blocking read of results status = clEnqueueReadBuffer( cmdQueue, vecC_d, CL_TRUE, 0, datasize, vecC_h, 0, NULL, NULL); clerr_chk(status); // Block until all previously queued OpenCL commands in a command-queue // are issued to the associated device and have completed clFinish(cmdQueue);But how do we now know when the data will actually be copied and when we can access it? To do this, call the clFinish() function, which blocks the execution of the main program until the cmdQueue command type is emptied.

Erasing memory on the host

At the end of the program, we still need to free up all the reserved memory space on the host.1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20

//************************************************* // Cleanup //************************************************* if (srcA_h) free(vecA_h); if (srcB_h) free(vecA_h); if (srcC_h) free(vecA_h); if (platforms) free(platforms); if (devices) free(devices); if(ckKernel) clReleaseKernel(ckKernel); if(cpProgram) clReleaseProgram(cpProgram); if(cmdQueue) clReleaseCommandQueue(cmdQueue); if(context) clReleaseContext(context); if(srcA_d) clReleaseMemObject(srcA_d); if(srcB_d) clReleaseMemObject(srcB_d); if(srcC_d) clReleaseMemObject(srcC_d);The full code from this chapter can be found in the 02-vector-add-short folder here.

Addition of arbitrarily long vectors

The vecadd_naive() kernel we used to add the vectors has one drawback - each thread that the vecadd_naive() kernel performs will calculate only one sum. Recall that the number of threads that make up a workgroup is limited and that the number of threads that run simultaneously on one unit of account is also limited. With the Tesla K40 device, the maximum number of threads that are executed on one calculation unit at a time is 2048. Since the Tesla K40 device has only 15 calculation units, the maximum number of threads that can be executed at one time is 30,720. If we run more than that many threads, the driver will have to serialize their execution on the GPU. Therefore, it is much better to determine the maximum number of threads that we run from how many threads we will put in one workgroup, how many groups can be executed on one unit of account at a time, and how many units of account are on the GPU. In this case, we will probably have a smaller number of threads than the length of the vectors. We solve the problem with the bottom kernel.1 2 3 4 5 6 7 8 9 10 11 12 13 14 15

__kernel void VectorAdd_arbitrary(__global float* vecA, __global float* vecB, __global float* vecC, int iNumElements) { // get index into global data array int iGID = get_global_id(0); int iGS = get_global_size(0); while (iGID < iNumElements) { //add the vector elements vecC[iGID] = vecA[iGID] + vecB[iGID]; iGID = iGID + iGS; } }Now, each thread will first determine its global iGID index and the global size of the NDRange iGS space (this is actually the number of all active threads). It will then add the identical elements in the while loop by adding two identical elements in one iteration and then increasing the iGID by as many as all the active threads. Thus, it will move to the next two identical elements and add them up. The loop ends when the iGID index exceeds the size of the vectors.

Surely you are wondering why one thread would not add up an adjacent pair of identical elements or 4 adjacent pairs of identical elements? The reason lies in the memory access mode! Recall that memory is accessed in segments. To ensure coordinated memory access, two adjacent threads in the bundle must access two adjacent memory words.

The program on the host stays the same this time - only the second kernel needs to be loaded. You can also try to play with different vector sizes and different NDRange space settings yourself.

The full code from this chapter can be found in the 04-vector-add-arb folder here. -

Scalar product of vectors

Let’s try to play around with a little more interesting problem now. This time, instead of adding the vectors, we will program the scalar product of two vectors - we first multiply the identical elements with each other, and then we add the products.A naive approach

The first implementation of a scalar product will be similar to the summation of vectors. The idea is that threads on the GPU work products between identical elements of vectors, while the host sums all partial products. The kernel for the products of identical elements of vectors is:1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16

__kernel void DotProd_naive(__global float* vecA, __global float* vecB, __global float* vecC, int iNumElements) { // get index into global data array int iGID = get_global_id(0); int iGS = get_global_size(0); while (iGID < iNumElements) { //add the vector elements vecC[iGID] = vecA[iGID] * vecB[iGID]; iGID = iGID + iGS; } }After completing the kernel, the host must read the product vector from the device and add all its elements in a loop:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23

/ Synchronous/blocking read of results status = clEnqueueReadBuffer( cmdQueue, vecC_d, CL_TRUE, 0, datasize, vecC_h, 0, NULL, NULL); clerr_chk(status); // Block until all previously queued OpenCL commands in a command-queue // are issued to the associated device and have completed clFinish(cmdQueue); // sum of partial products: float result = 0.0; for (int i=0; i<iNumElements; i++) { result += vecC_h[i]; }The full code from this chapter can be found in the 05-dot-product-naive folder here.

Scalar product with reduction and use of local memory

In the above example, the host must add up all the partial products. If the vectors are very long, the host will need a lot of time to transfer the partial products from the device, and then also add the partial products for quite some time. Therefore, we will now present a solution that will add up all the partial products. In doing so, we will learn how threads can use local memory and how they participate in computation. The calculation of the scalar product will consist of three steps:

- N threads will be divided into G working groups. Each thread in the working group will compute the partial products between the identical elements of the vectors and add them to its partial product, which it will store in the local memory. Since we will have L=N/G threads in the workgroup, we will have L partial products stored in the local memory at the end of this step.

- Then, all threads within one working group will work together to add up the L partial products in the working group and store their result in the intended place in the global memory of the device. For G workgroups, we will have at the end of this step G totals of partial products in the global memory of the device.

- We will now transfer only G totals from the device's global memory to the host and add them there. Because the workgroups G are much smaller than the size of the vectors N, the host will transfer significantly less data and have much less work to

add up.

Step 1: partial products in the group

The code below shows the part of the kernel responsible for multiplying the identical elements of the vectors and summing the products.1 2 3 4 5 6 7 8 9 10 11 12 13 14 15

int myGID = get_global_id(0); int myLID = get_local_id(0); int myWGID = get_group_id (0); __local float vecC_wg[64]; vecC_wg[myLID] = 0.0; while (myGID < iNumElemements) { vecC_wg[myLID] += vecA[myGID] * vecB[myGID]; myGID += get_global_size (0); } //-- wait at the barrier! barrier(CLK_LOCAL_MEM_FENCE);In our case, we will have 64 threads in one working group. First, in line 5, we declare the field in which each thread in the working group will store the sum of its products:

5

__local float vecC_wg[64];The __local extension specifies that the vecC_wg field is stored in local memory. Although this declaration is specified for each thread in the group, the compiler will create only one field in the local memory of the calculation unit, which will be common to all threads in the workgroup.

Then, in line 7, each thread initializes its element, which will store its sum of partial products.

The myLID value determines the index of the threads in the workgroup. Thus, each thread will initialize only the element of the field that belongs to it.

Now in the while loop (lines 9 to 12)9 10 11 12

while (myGID < iNumElemements) { vecC_wg[myLID] += vecA[myGID] * vecB[myGID]; myGID += get_global_size (0); }each thread multiplies the identical elements of the vectors corresponding to its global index and counts the products in vecC_wg [myLID].

Before we add all the elements of the vecC_wg [myLID] field in the second step, we need to make sure that all the threads in the group have completed the execution of the while loop. We say that the threads have to wait at the barrier. Obstacles in OpenCL are implemented with the barrier function (CLK_LOCAL_MEM_FENCE). The function blocks the further execution of the program until it is called by everyone in the working group. In other words, all threads in the workgroup must call the barrier function (CLK_LOCAL_MEM_FENCE) before any thread can continue running the program.Step 2: sum of partial products in the group

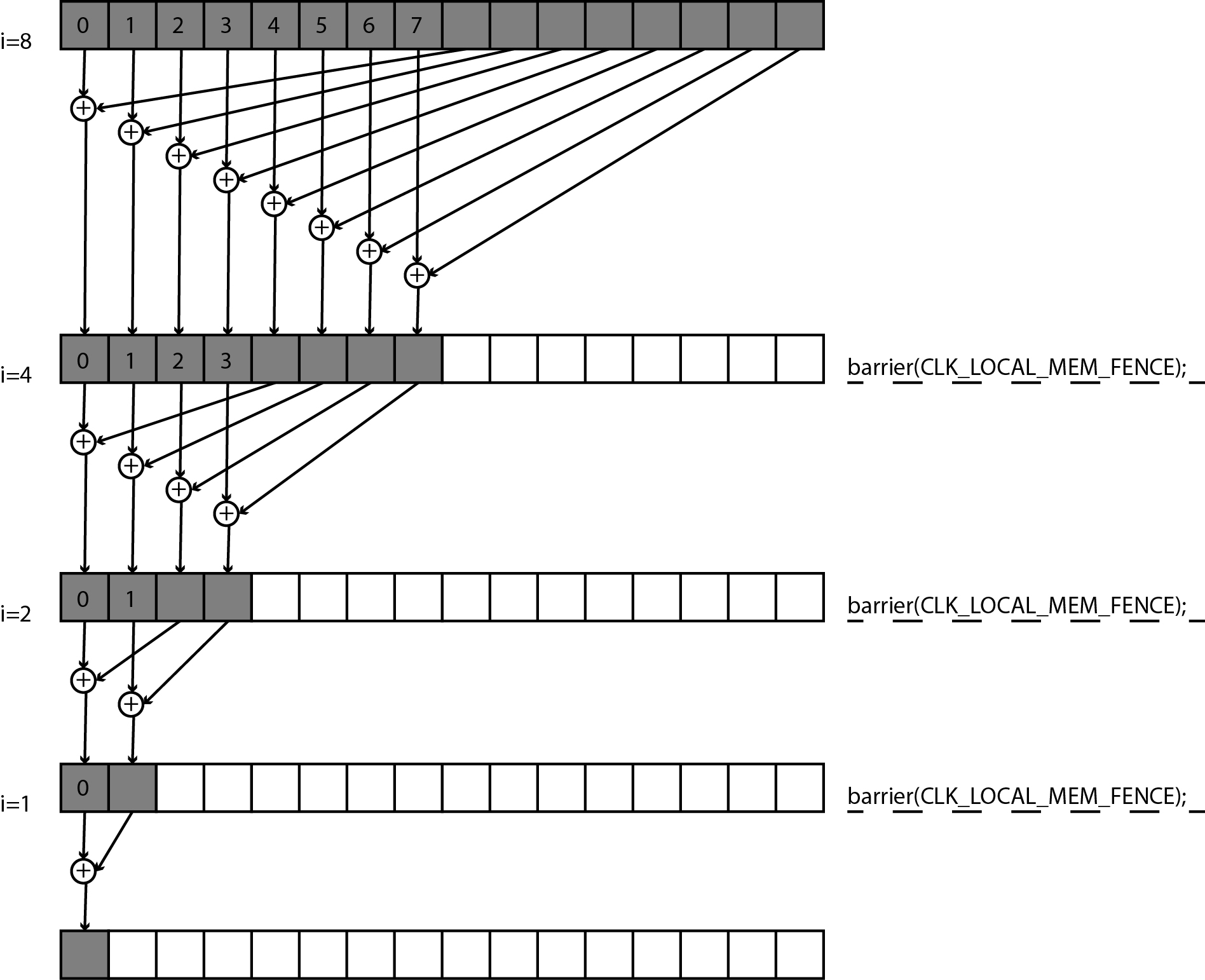

In the second step, we need to add all the elements of the vecC_wg [myLID] field. We would naively solve this by adding only one thread in the working group to all these elements. However, there is a better solution shown in the image below:

We will use a procedure called reduction to add the elements. Suppose we have only 16 elements to add up and 16 threads in a working group. In the first step, each of the first eight threads in the workgroup will add an element with its myLID index and an element with its myLID + 8 index and write the sum in an element with its myLID index. The remaining eight threads in the working group will not do anything. Then all the threads will wait at the obstacle. In the next step, only each of the first four threads will add an element with its myLID index and an element with its myLID + 4 index, the other threads will not add up. Before continuing with the work, all the threads will again wait in front of the obstacle. Thus, we will continue the process until there is only one thread with index 0 left, which will add up the element with index 0 and the element with index 1. The reduction procedure just described is shown by the code below in lines 24 to 34.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40

__kernel void DotProd_reduction (__global float* vecA, __global float* vecB, __global float* localproducts, int iNumElemements) { int myGID = get_global_id(0); int myLID = get_local_id(0); int myWGID = get_group_id (0); __local float vecC_wg[64]; vecC_wg[myLID] = 0.0; while (myGID < iNumElemements) { vecC_wg[myLID] += vecA[myGID] * vecB[myGID]; myGID += get_global_size (0); } //-- wait at the barrier! barrier(CLK_LOCAL_MEM_FENCE); localproducts[myWGID] = 0.0; // Reduction: int i = get_local_size (0) / 2; while (i != 0) { if (myLID < i) { //perform the addition: vecC_wg[myLID] += vecC_wg[myLID + i]; } i = i/2; // wait at the barrier: barrier(CLK_LOCAL_MEM_FENCE); } // only one WI writes the result: if (myLID == 0) { localproducts[myWGID] = vecC_wg[0]; } }An attentive reader will notice that the barrier function (CLK_LOCAL_MEM_FENCE) is called by all threads in the group and not just those that add up. The reason is that the barrier function (CLK_LOCAL_MEM_FENCE) requires that all threads in the group execute it before any thread can continue working. If the barrier function (CLK_LOCAL_MEM_FENCE) were naively placed in a branch (if statement), the execution of the kernel would stop forever at the obstacle, as the threads that reach the obstacle would wait for all the remaining threads in the group that did not perform the branch.

At the end of the reduction, the sum of all elements in the vecC_wg [myLID] field will be written in the vecC_wg[0] element. Finally, a thread with myLID=0 will overwrite this result in the device's global memory in the localproducts field to a location with an index corresponding to the index of the myWGID workgroup (lines 36 to 39):36 37 38 39

// only one WI writes the result: if (myLID == 0) { localproducts[myWGID] = vecC_wg[0]; }The program on the host this time requires minor changes. The partial results that we will read from the device will now be contained in the vecC_d field, which will have only as many elements as there are workgroups:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15

const size_t szLocalWorkSize = 64; const size_t szGlobalWorkSize = 2048; int iNumGroups = (int)(szGlobalWorkSize / szLocalWorkSize); size_t datasizeC = sizeof(cl_float) * iNumGroups; // Use clCreateBuffer() to create a buffer object (d_C) // with enough space to hold the output data vecC_d = clCreateBuffer( context, CL_MEM_WRITE_ONLY, datasizeC, NULL, &status); clerr_chk(status);In the code above, we assumed that we run only 2048 threads and that each workgroup has 64 threads.

Finally, the host must read the partial products of the individual working groups and add them up.1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22

// Synchronous/blocking read of results status = clEnqueueReadBuffer( cmdQueue, vecC_d, CL_TRUE, 0, datasizeC, vecC_h, 0, NULL, NULL); clerr_chk(status); // Block until all previously queued OpenCL commands in a // command-queue are issued to the associated device and have completed clFinish(cmdQueue); // check the result float result = 0.0; for (int i=0; i<iNumGroups; i++) { result += vecC_h[i]; }The full code from this chapter can be found in the 06-dot-product-reduction folder here.

Measuring kernel execution time

The execution time of kernel on the GPU can also be measured. For this we use the t.i. events - GPU devices can be required to report all events related to commands that we send by command line. Two interesting events are the beginning and the end of the kernel implementation. To measure the execution time of a kernel we need to:

- Enable the use of events when creating command types:

1 2 3 4 5

cmdQueue = clCreateCommandQueue( context, devices[0], CL_QUEUE_PROFILING_ENABLE, // enable profiling &status);- The command to start the kernel is associated with the event (last argument)

1 2 3 4 5 6 7 8 9 10 11 12 13

cl_event event; // Launch kernel status = clEnqueueNDRangeKernel( cmdQueue, ckKernel, 2, NULL, szGlobalWorkSize, szLocalWorkSize, 0, NULL, &event); // link to event clerr_chk(status);- after starting the kernel we are waiting for the event:

1

clWaitForEvents(1, &event);When we transfer the results from the GPU back to the host, we use the OpenCL API of the clGetEventProfilingInfo function to read the time of the two events CL_PROFILING_COMMAND_START and CL_PROFILING_COMMAND_END and calculate the difference between them:1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16

cl_ulong time_start; cl_ulong time_end; clGetEventProfilingInfo(event, CL_PROFILING_COMMAND_START, sizeof(time_start), &time_start, NULL); clGetEventProfilingInfo(event, CL_PROFILING_COMMAND_END, sizeof(time_end), &time_end, NULL); double nanoSeconds = time_end-time_start; printf("Kernel Execution time is: %0.3f milliseconds \n",nanoSeconds / 1000000.0);The full code from this chapter can be found in the 07-dot-product-profiling folder here.

-

Matrix multiplication

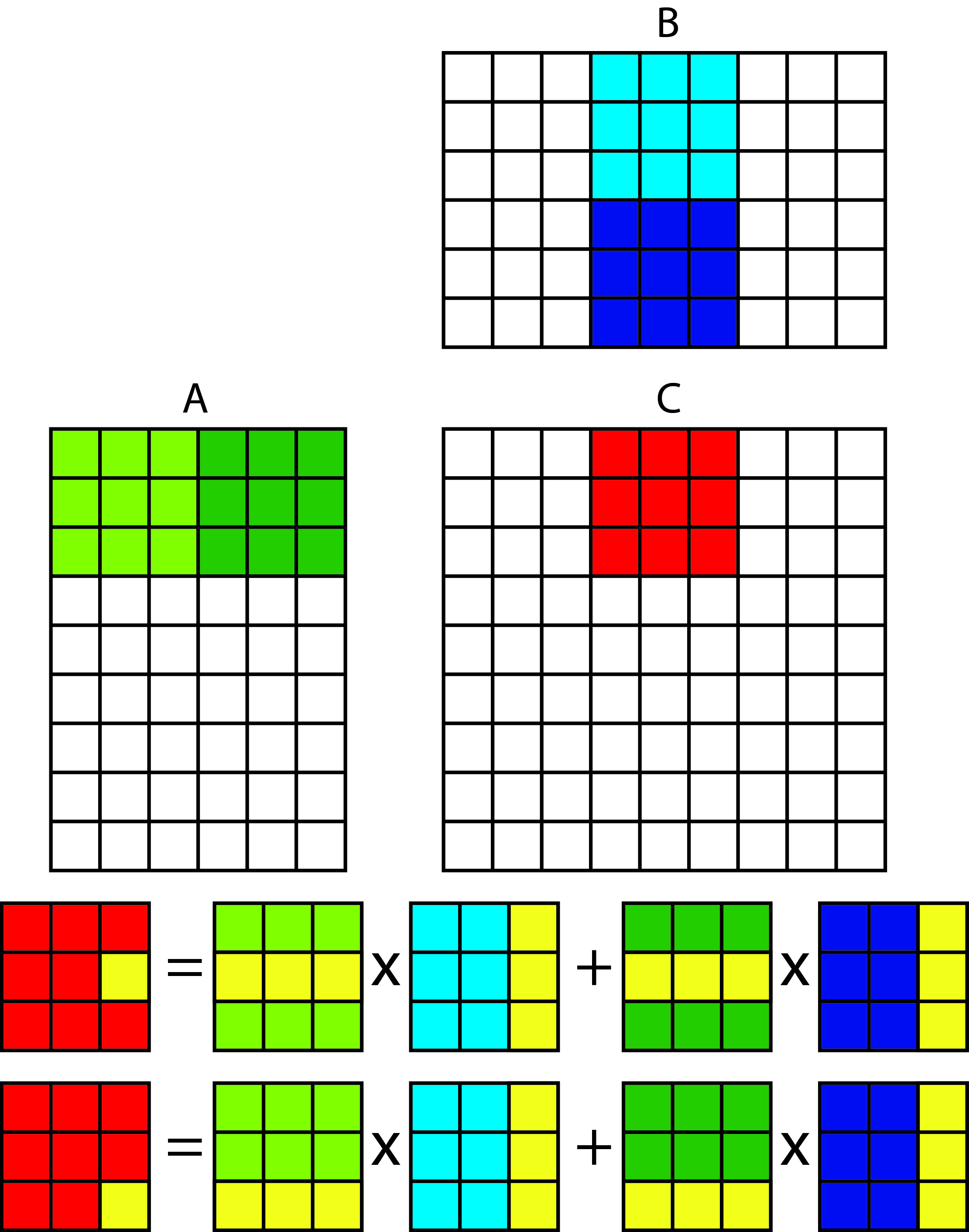

We are now tackling a problem that will help us understand the 2-dimensional space of the NDRange and the use of local memory to speed up kernel implementations on GPU devices. For this purpose, we will program the multiplication of matrices. Without loss of generality, we will confine ourselves to square matrices of size NxN. The multiplication of the square matrices A and B is shown in the figure below:

The element of the matrix C [i, j] is obtained by scalarly multiplying the i-th row of the matrix A and the j-th column of the matrix B. So, for each element of the matrix C we have to calculate one scalar product between the row from A and the column from B. the figure shows which rows and columns need to be scalarly multiplied by each other to obtain the elements C [1,2], C [5,2] and C [5,7]. For example, for C [5,7] we need to scalarly multiply the 5th row from matrix A and the 7th column from matrix B.

Naive matrix multiplication

With a simple version of matrix multiplication, we will first learn about the use of two-dimensional NDRange spaces.Kernel

This time, we’re not going to deal with the effectiveness of a kernel yet. We will prepare it so that each thread it will perform will calculate only one element of the product matrix.1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19

__kernel void matmul_naive( __global float* matA, __global float* matB, __global float* matC, int N){ int i = get_global_id(1); // row (Y) int j = get_global_id(0); // column (X) float dp; dp = 0.0; for (int k = 0; k < N; k++){ dp += matA[i*N + k] * matB[k*N + j]; } matC[i*N + j] = dp; }Since the elements of the matrices are organized in a 2-dimensional field, it is most natural that we also organize the threads into a two-dimensional space NDRange. Each thread will now be defined by a pair of global indexes (i, j). In the above code, therefore, each thread first obtains its global index by calling the get_global_id function (lines 8 and 9).

8 9

int i = get_global_id(1); // row (Y) int j = get_global_id(0); // column (X)Each thread then calculates the scalar product between the i-th row in matrix A and the j-th column in matrix B and stores the result in element C [i, j] of the product matrix (rows 13 to 18).Host program

The program on the host must reserve space for all three matrices on the host and initialize matrices A and B and reserve space in the device's global memory for three NxN size matrices (lines 20 to 34)20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53

cl_float* matA_h; cl_float* matB_h; cl_float* matC_h; // Size of data: size_t datasize = sizeof(cl_float) * iNumElements * iNumElements; // Allocate host arrays matA_h = (void *)malloc(datasize); matB_h = (void *)malloc(datasize); matC_h = (void *)malloc(datasize); // init arrays: for (int i = 0; i<iNumElements; i++ ) { for (int j = 0; j<iNumElements; j++ ) { matA_h[i*iNumElements + j] = 1.0; matB_h[i*iNumElements + j] = 1.0; } } cl_mem matA_d; // Input array on the device cl_mem matB_d; // Input array on the device cl_mem matC_d; // Output array on the device s // Use clCreateBuffer() to create a buffer object (d_A) // that will contain the data from the host array A matA_d = clCreateBuffer( context, CL_MEM_READ_ONLY, datasize, NULL, &status); clerr_chk(status); // do the same for matB_d an d matC_d ...In addition, the program on the host must set the global size of the NDRange space and the number of threads in the workgroup.

1 2

const size_t szLocalWorkSize[2] = {16, 16}; const size_t szGlobalWorkSize[2] = {N, N};In the example above, we determined that we would run NxN threads in two-dimensional NDRange space, and that each workgroup would contain 16x16 threads.The kernel on the GPU device is started with the clEnqueueNDRangeKernel function, where the third argument determines that the NDRange space is two-dimensional.1 2 3 4 5 6 7 8 9 10

status = clEnqueueNDRangeKernel( cmdQueue, ckKernel, 2, NULL, szGlobalWorkSize, szLocalWorkSize, 0, NULL, NULL);Multiplication of matrices by tiles using local memory

The previous matrix multiplication code has quite a few serious flaws. Each thread reads 2xN elements from the global memory: a row from matrix A and a column from matrix B. On close observation we see that all threads that compute an element of matrix C in the same row access the same row of matrix A, but each to a different column of matrix B. Worst of all, when accessing columns in matrix B, we do not consider coordinated access to global memory. Adjacent threads do not access adjacent elements of matrix B, which greatly slows down the execution of the kernel, as two adjacent elements in a column can be more than 128 bytes apart in long rows and fall into different segments. In such a case, a separate memory access is required for each column element from matrix B!

On the other hand, it is known that the multiplication of matrices can be performed by tiles - we multiply smaller submatrices (tiles), as shown in the figure below. We will therefore obtain the same result if we calculate the elements of submatrix C gradually by multiplying the submatrices (tiles) of matrix A and matrix B. Following the picture above, the idea is as follows: First load the light green plate of matrix A and the light blue plate of matrix B into the local memory and make them together. Thus we get a partially calculated red tile of matrix C. In the next step we load a dark green tile from matrix A and a dark blue tile from matrix B, multiply them and add the result to the red tile from matrix C. Since in some step both tiles from matrices A and B in local memory, we have no problem with coordinated access to global memory. On top of that, access to local memory is 100 times faster than access to global memory!The kernel that multiplies the matrix by tiles is represented by the code below.

We will therefore obtain the same result if we calculate the elements of submatrix C gradually by multiplying the submatrices (tiles) of matrix A and matrix B. Following the picture above, the idea is as follows: First load the light green plate of matrix A and the light blue plate of matrix B into the local memory and make them together. Thus we get a partially calculated red tile of matrix C. In the next step we load a dark green tile from matrix A and a dark blue tile from matrix B, multiply them and add the result to the red tile from matrix C. Since in some step both tiles from matrices A and B in local memory, we have no problem with coordinated access to global memory. On top of that, access to local memory is 100 times faster than access to global memory!The kernel that multiplies the matrix by tiles is represented by the code below.1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42

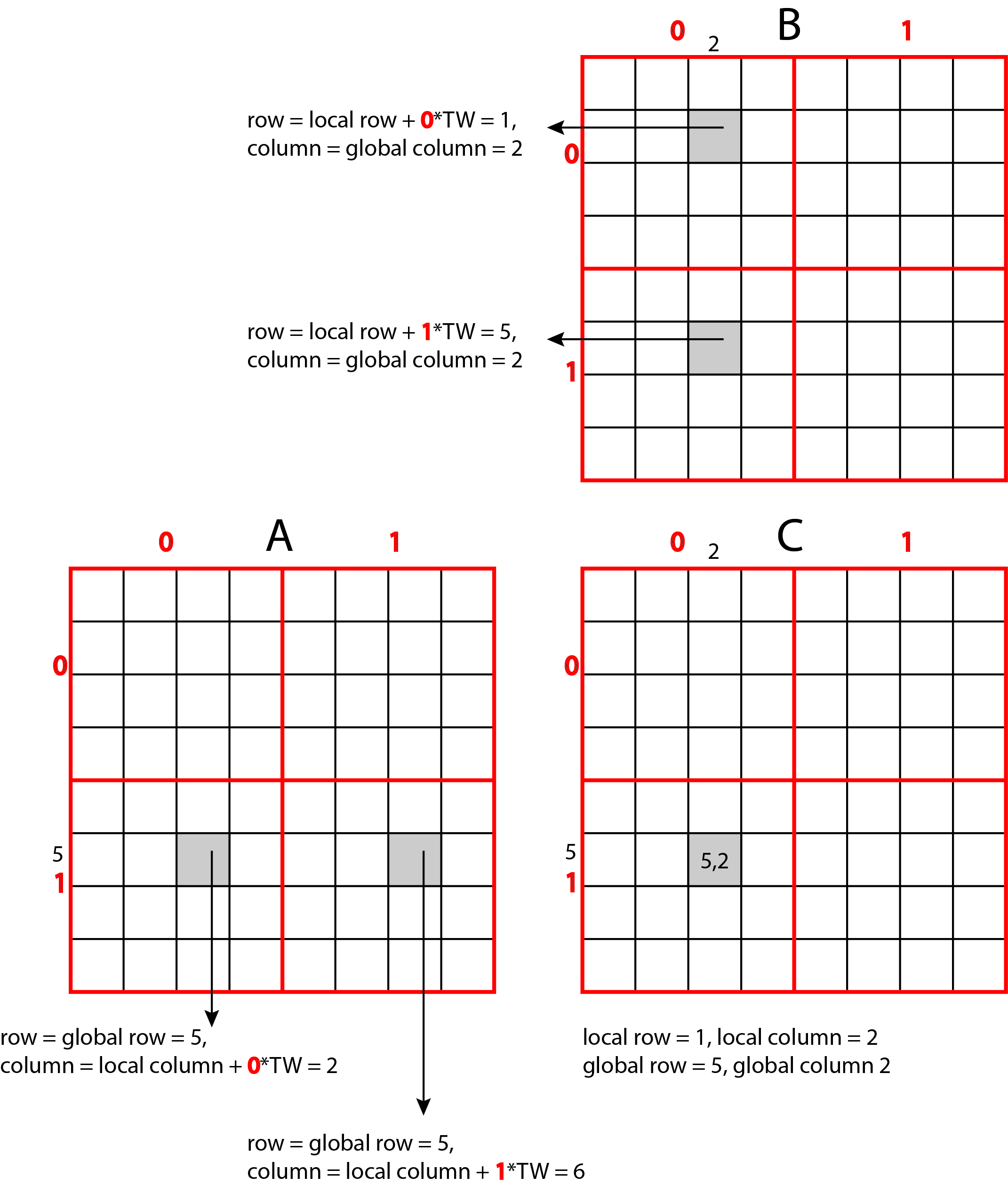

#define TW 16 __kernel void matmul_tiled( __global float* matA, __global float* matB, __global float* matC, int N){ // Local memory to fit the tiles __local float tileA[TW][TW]; __local float tileB[TW][TW]; // global thread index int rowGID = get_global_id(1); // row in NDRange int colGID = get_global_id(0); // column in NDRange // local thread index int rowLID = get_local_id(1); // row in tile int colLID = get_local_id(0); // column in tile float dp = 0.0; // Iterate through all the tiles necessary to calculate the products: for (int iTile = 0; iTile < N/TW; iTile++){ // Collaborative load of tiles: // - Load a tile of matrix A into local memory: tileA[rowLID][colLID] = matA[rowGID*N + (colLID + iTile*TW)]; // - Load a tile of matrix B into local memory: tileB[rowLID][colLID] = matB[(rowLID + iTile*TW)*N+colGID]; // Synchronise to make sure the tiles are loaded barrier(CLK_LOCAL_MEM_FENCE); for(int k = 0; k < TW; k++) { dp += tileA[rowLID][k] * tileB[k][colLID]; } // Wait for all WI to finish before loading next tile: barrier(CLK_LOCAL_MEM_FENCE); } // save the dot product into matrix C in global memory: matC[rowGID*N + colGID] = dp; }Each thread in the for loop walks through all the tiles needed to calculate its element of the matrix C (lines 23 to 38).

23 24 25

for (int iTile = 0; iTile < N/TW; iTile++){ ... }In each iteration, the for loops of the threads from the same workgroup first transfer the tiles from matrix A and matrix B to the local memory and wait at the obstacle (lines 24 to 31).

24 25 26 27 28 29 30 31 32

// Collaborative load of tiles: // Load a tile of matrix A into local memory: tileA[rowLID][colLID] = matA[rowGID*N + (colLID + iTile*TW)]; // Load a tile of matrix B into local memory: tileB[rowLID][colLID] = matB[(rowLID + iTile*TW)*N+colGID]; // Synchronise to make sure the tiles are loaded barrier(CLK_LOCAL_MEM_FENCE); }Here we need to stop for a moment and explain the indexes we use when reading tiles from the device’s global memory. Assume that the tiles are of dimension TWxTW, where TW = 4, and that N = 8. Assume that the thread computes element C [5,2]. This element belongs to a tile with an index (1.0). The thread responsible for calculating element C [5,2] has a global index (5,2) and a local index (1,2). This thread must now access tiles (1,0) and (1,1) from matrix A and tiles (0,0) and (1,1) from matrix B. Thread (5,2) will be in charge of loading the following elements to local memory:- A (5,2) and B (1,2) in the first iteration and

- A (5.6) and B (5.2) in the second iteration.

The figure below shows the relationship between the local index of each tile element, and the local and global index of the thread it reads. The code on the host will remain the same as in the naive multiplication of matrices, we just need to load a kernel of matmul_tiled.

The code on the host will remain the same as in the naive multiplication of matrices, we just need to load a kernel of matmul_tiled.

The full code from this chapter can be found in the 08-matrix-mul folder here. -

Image processing

Edge enhancement is an image filter that increases the contrast of edges. The edge is defined as a significant local change in image intensity. At the ideal step, the intensity change occurs in a single pixel step. Ideal edges are very rare in images, especially if the images are smoothed beforehand to remove noise from them. Changes in intensity therefore occur at several consecutive pixels, such an edge is called a ramp. The edge highlight filter works by recognizing sharp edges in the image (for example, the edge between the subject and the background) and increasing the contrast of the image in the area directly around the edge. This makes the edge look more defined. The image below shows an example of highlighting edges:Input image Output image

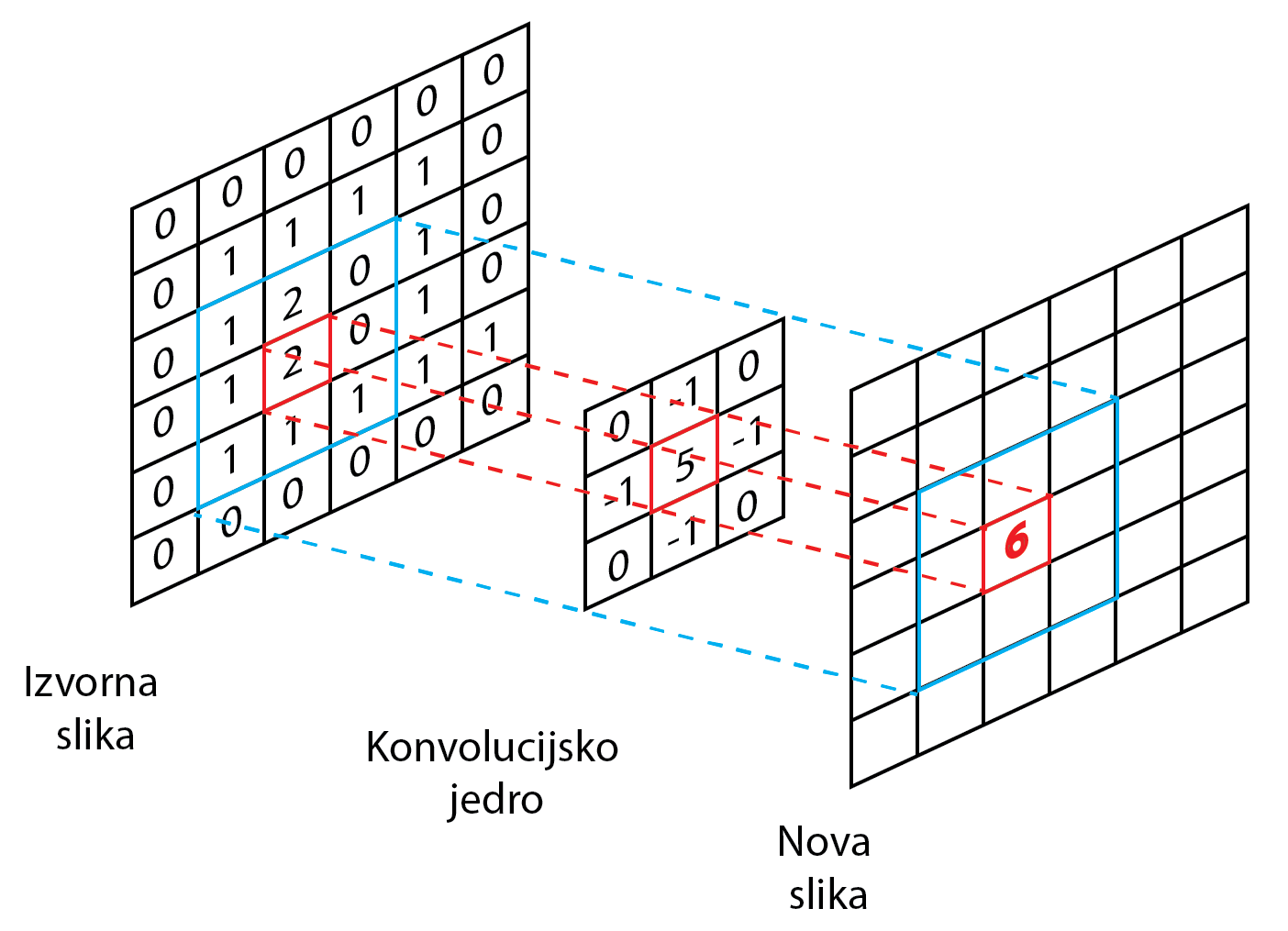

We use a 3x3 Laplace core for the edge highlighting process. We multiply each pixel and its surroundings in the original image by the Laplace core, add the products, and use the sum for the value of the pixel in the new image with the highlighted edges. We call this process convolution. The figure below shows one step of the convolution with the Laplace nucleus.

We use a 3x3 Laplace core for the edge highlighting process. We multiply each pixel and its surroundings in the original image by the Laplace core, add the products, and use the sum for the value of the pixel in the new image with the highlighted edges. We call this process convolution. The figure below shows one step of the convolution with the Laplace nucleus. The figure shows the Lapac core, which we will use to highlight the edges in the figure. We see how we emphasize the value of the pixel with a value of 2, which forms an edge with a pixel on its right (value 0). The new value, 6, is obtained by placing the core on the original image, multiplying the identical elements and adding the partial products.

The figure shows the Lapac core, which we will use to highlight the edges in the figure. We see how we emphasize the value of the pixel with a value of 2, which forms an edge with a pixel on its right (value 0). The new value, 6, is obtained by placing the core on the original image, multiplying the identical elements and adding the partial products.Working with pictures

We will use the publicly accessible STB library to read images from disk to memory and write back to disk. The library is quite extensive, but we will only use the stbi_load and stbi_write functions, which are defined in the stb_image.h and stb_image_write.h headers. Instructions for using the functions can be found here.Images in memory

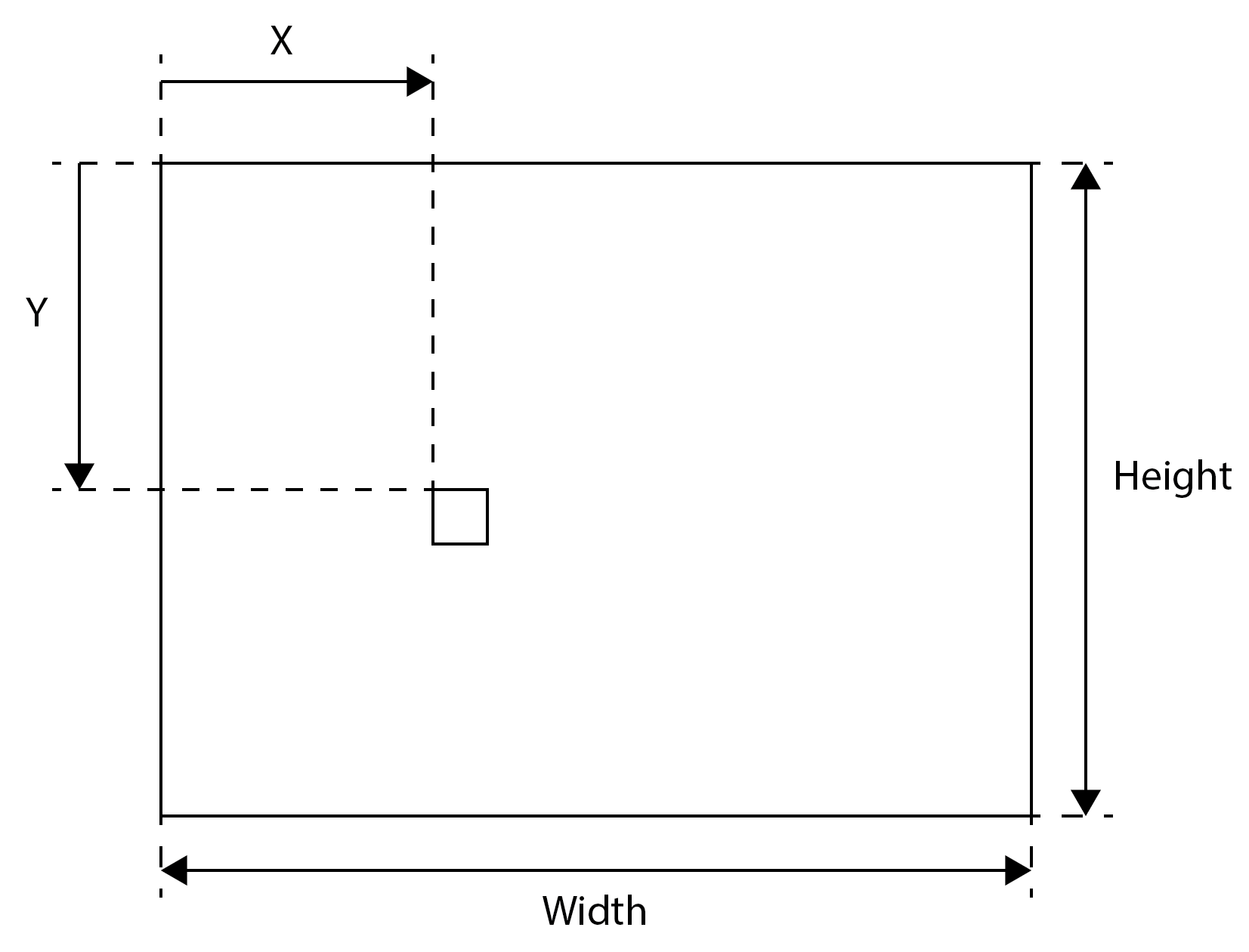

We will first read the images from the files on the disk into memory. Images are made up of a multitude of pixels. For grayscale images, one pixel is usually represented by eight bits that define the grayscale level (from black to white). The image plane of the Width x Height dimension is represented in the memory as a vector of image points, whereby an individual image point is accessed as follows: image [Y x Width + X]. Access to each image point is shown in the image below. The quantities X (column), Y (row), Width (width) and Height (height) are expressed in pixels.

The quantities X (column), Y (row), Width (width) and Height (height) are expressed in pixels.

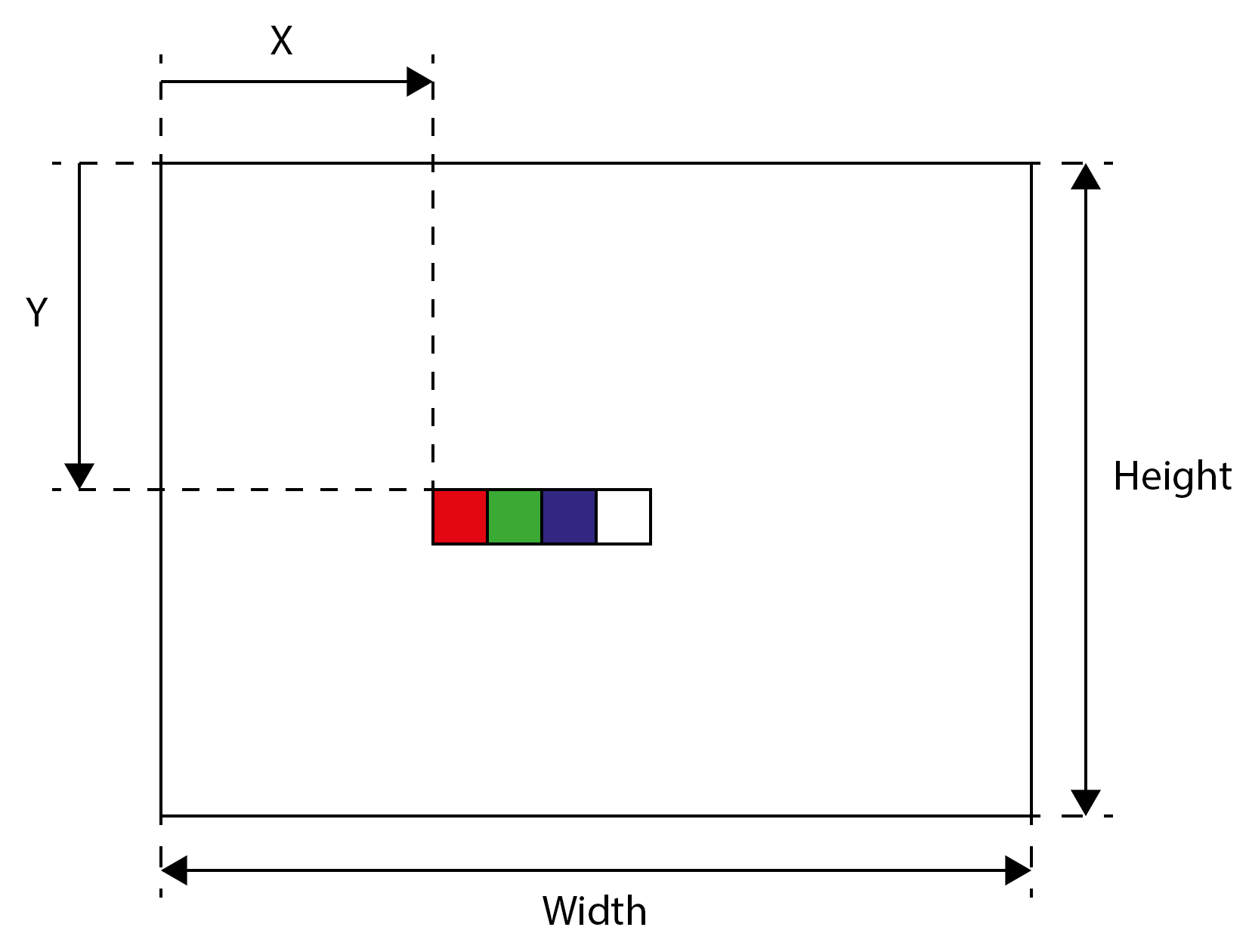

For color images, typically each pixel is represented by 4 x 8 bits or four bytes. The first three bytes specify the three color components (RGB, Red, Green, and Blue), while for certain records (such as png), the last byte specifies transparency. We also say that an image consists of four channels. Each pixel is therefore represented in memory by four values, each value being written by one byte. The four-channel image of the Width x Height dimensions in memory is shown in the image below. An individual pixel is now accessed as follows: image [(Y x Width + X) x CPP + channel], where CPP is the number of channels per pixel and can be between 1 and 4, channel however, the channel index is from 0 to 3.

An individual pixel is now accessed as follows: image [(Y x Width + X) x CPP + channel], where CPP is the number of channels per pixel and can be between 1 and 4, channel however, the channel index is from 0 to 3.

In the following, we will limit ourselves to four-channel images (even if they are gray) and we will write them to memory with the stbi_load function. In the case of grayscale images (Rn channel), the last three bytes of the pixel will be unused. At first glance, the solution is wasteful, as grayscale images take up four times as much memory space, but we will have the same code to process all types of images because of this.Kernels to emphasize the edges

Each thread will calculate a new value of one pixel in the image. For this purpose, with 3x3 filters, it will have to access eight more pixels in the immediate vicinity of the pixel for which it calculates the new value. With a selected Laplace kernel, it is sufficient to access only four adjacent pixels at which the kernel has nonzero values.

In the following, we present four kernels - from the most inefficient and at the same time the simplest to the most efficient and the most complex.Naive filter for powdering edges

The simplest implementation of a sharpenGPU kernel to use an edge highlight filter is shown in the code below.1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31

//Simplest kernel (memory accesses not optimal) __kernel void sharpenGPU(__global unsigned char *imageIn, __global unsigned char *imageOut, int width, int height, int cpp) { int y = get_global_id(0); int x = get_global_id(1); if (x < width && y < height) { // for each color channel for (int c = 0; c < cpp; c++) { unsigned char px01 = getIntensity(imageIn, y - 1, x, c, height, width, cpp); unsigned char px10 = getIntensity(imageIn, y, x - 1, c, height, width, cpp); unsigned char px11 = getIntensity(imageIn, y, x, c, height, width, cpp); unsigned char px12 = getIntensity(imageIn, y, x + 1, c, height, width, cpp); unsigned char px21 = getIntensity(imageIn, y + 1, x, c, height, width, cpp); short pxOut = (5 * px11 - px01 - px10 - px12 - px21); if (pxOut > 255) pxOut = 255; if (pxOut < 0) pxOut = 0; imageOut[(y * width + x) * cpp + c] = (unsigned char)pxOut; } } }In the for loop, we walk across all the channels and for each channel separately, the thread reads the values of five pixels from the image: the pixel for which it calculates the new value, and its four neighbors (left, right, top and bottom), as required by the Laplace core . To access the values of individual pixels, prepare the getIntensity function:

1 2 3 4 5 6 7 8 9 10 11 12

//Helper function to extract intensity value for a pixel form image inline unsigned char getIntensity( __global unsigned char *image, int y, int x, int channel, int height, int width, int cpp) { if (x < 0 || x >= width) return 0; if (y < 0 || y >= height) return 0; return image[(y * width + x) * cpp + channel]; }Such a kernel is simple, but it has one serious drawback. As we have written many times, access to main memory is always done by all threads from the bundle at the same time and is always 128 bytes long - four bytes per thread. In our case, each thread needs only one of four bytes in one iteration, but reads all four when reading and discards unnecessary ones! Then in the next iteration it accesses the same segment in the global memory again, even though it accessed this segment in the previous iteration! But what if, in the previous iteration, she simply discarded the data she didn’t need at the time.The two-dimensional space of NDRange is set as follows:1 2 3 4 5

const size_t szLocalWorkSize[2] = {16, 16}; const size_t szGlobalWorkSize[2] = { ((width - 1) / szLocalWorkSize[1] + 1) * szLocalWorkSize[1], ((height - 1) / szLocalWorkSize[0] + 1) * szLocalWorkSize[0] };Threads will be implemented in 16 x 16 workgroups. The total number of threads (global size of the NDRange space) depends on the size of the input image and must be divisible by the size of the workgroup in both dimensions, in our case 16. Because the dimensions of the input images are not necessarily divisible by 16, when determining the number of threads, round the size of the image upwards so that it is divisible by 16.A kernel of sharpenGPU is performed in 1.39 milliseconds on an Nvidia Tesla K40 GPE when processing an image of 2580x1319 pixels:[patriciob@nsc-login 09-image-filter]$ srun prog sharpenGPU celada_in.png celada_out.png Loaded image celada_in.png of size 2580x1319. Program size = 5894 B Kernel Execution time is: 1.388 millisecondsEdge highlighting filter with regard to the organization of data in memory

A more efficient kernel is obtained by considering the mechanism of access to global memory (memory coalescing). The thread in the bundle always has access to at least four bytes, so it's a good idea to take advantage of this - read all four channels with one read! We use OpenCL vector data types for this purpose. Vector data types allow us to perform operations on short vectors of length 2, 4, 8 or 16 elements. The arithmetic operation is performed simultaneously over the parallel elements of the vectors. The code below shows a kernel of sharpenGPU_vector, which reads all four channels into a vector of length four at a time and performs the operation required by the Laplace kernel over all elements of the vector (all channels) at once.1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19

__kernel void sharpenGPU_vector(__global uchar4 *imageIn, __global uchar4 *imageOut, int width, int height) { int y = get_global_id(1); int x = get_global_id(0); if (x < width && y < height) { short4 px01 = getIntensity4(imageIn, y - 1, x, height, width); short4 px10 = getIntensity4(imageIn, y, x - 1, height, width); short4 px11 = getIntensity4(imageIn, y, x, height, width); short4 px12 = getIntensity4(imageIn, y, x + 1, height, width); short4 px21 = getIntensity4(imageIn, y + 1, x, height, width); short4 pxOut = (short4)(5) * px11 - px01 - px10 - px12 - px21; imageOut[y * width + x] = convert_uchar4_sat(pxOut); } }The uchar4-type kernel arguments are vectors containing four 8-bit values (uchar type). Similarly, short4 is a vector of four 16-bit short data. The openCL function convert_uchar4_sat (line 17) explicitly converts the vector of 16-bit short4 elements into the vector of 8-bit uchar4 elements using saturation arithmetic. To vector read individual pixels from global memory, use the getIntensity4 function:1 2 3 4 5 6 7 8 9

inline short4 getIntensity4(__global uchar4 *image, int y, int x, int height, int width) { if (x < 0 || x >= width) return (short4)(0); if (y < 0 || y >= height) return (short4)(0); return convert_short4(image[y * width + x]); }The openCL function convert_short4 performs an explicit conversion from the uchar4 vector to the short4 vector. This time, a kernel of sharpenGPU_vecotor on the Nvidia Tesla K40 GPE is performed in 0.66 milliseconds when processing an image of 2580x1319 pixels:

[patriciob@nsc-login 09-image-filter]$ srun prog sharpenGPU_vector celada_in.png celada_out.png Loaded image celada_in.png of size 2580x1319. Program size = 5894 B Kernel Execution time is: 0.662 millisecondsWe see that the acceleration due to the consideration of global memory accesses is considerable and therefore we must always be careful how the threads access the data in the global memory.Edge highlighting filter using local memory

In the previous kernels, each thread reads five pixels. Since each pixel in the image is adjacent to four pixels (left, right, top, and bottom), we will read each pixel from main memory four times. 256 threads in a 16x16 workgroup practically share adjacent pixels. Therefore, it is better to read a sub-image of the appropriate size from the image in the global memory to the local memory. This way, the threads in the workgroup will have all the necessary pixels in the local memory. Access to pixel data will be several hundred times faster, and we will read each pixel only once from global memory. In fact, we need to read 18x18 pixels into the local memory, because even at the edges of the workgroup they need an extra edge of pixels.The sharpenGPU_local_vector pin to highlight edges using local memory is represented by the code below.1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48

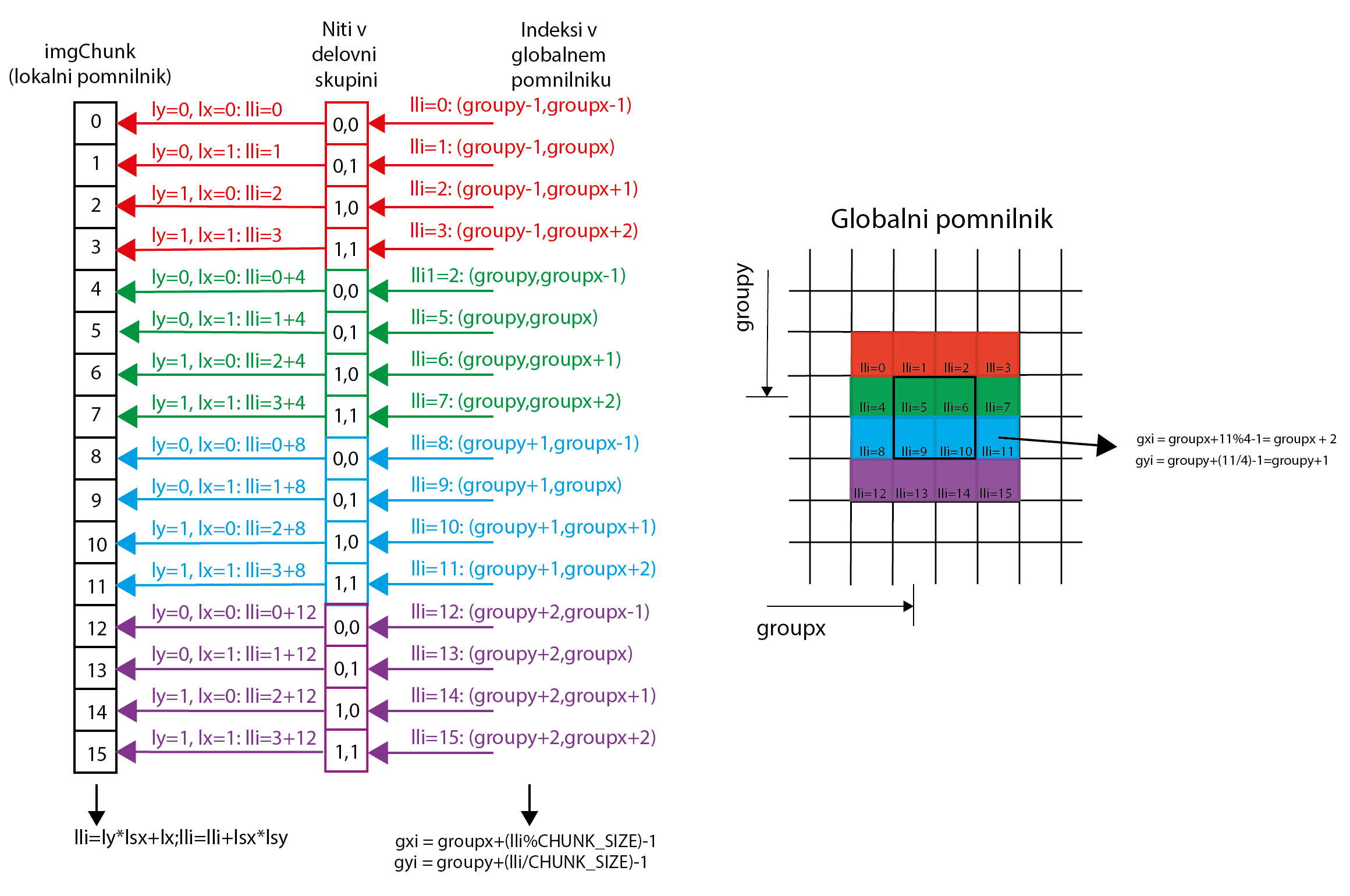

__kernel void sharpenGPU_local_vector_linear(__global uchar4 *imageIn, __global uchar4 *imageOut, int width, int height, int cpp) { int gy = get_global_id(1); int gx = get_global_id(0); int ly = get_local_id(1); int lx = get_local_id(0); int lsy=get_local_size(1); int lsx=get_local_size(0); // reserve local memory __local short4 imgChunk[CHUNK_SIZE * CHUNK_SIZE]; // load an image chunk to local memory // linearize local indices and move block through the image chunk for (int lli=ly*lsx+lx;lli<CHUNK_SIZE*CHUNK_SIZE;lli+=lsx*lsy){ int groupx=get_group_id(0)*lsx; // global X offset for the work-group int groupy=get_group_id(1)*lsy; // global Y offset for the work-group // Now calculate 2D global indices back from local linear ones: int gyi=groupy+(lli/CHUNK_SIZE)-1; // ROW: global Y offset + row in the workgroup int gxi=groupx+lli%CHUNK_SIZE-1; // COLUMN: global X offset + column in the workgroup // read pixel flom global to local memory imgChunk[lli]=getIntensity4(imageIn, gyi, gxi, height, width, cpp); } // wait for threads to finish barrier(CLK_LOCAL_MEM_FENCE); if (gx < width && gy < height) { int pxi = (ly + 1) * CHUNK_SIZE + (lx + 1); short4 px01 = imgChunk[pxi - CHUNK_SIZE]; short4 px10 = imgChunk[pxi - 1]; short4 px11 = imgChunk[pxi]; short4 px12 = imgChunk[pxi + 1]; short4 px21 = imgChunk[pxi + CHUNK_SIZE]; short4 pxOut = (short4)(5) * px11 - px01 - px10 - px12 - px21; imageOut[gy * width + gx] = convert_uchar4_sat(pxOut); } }First, the threads transfer a chunk of image from the global memory to the local memory (lines 19-32).Assume that CHUNK_SIZE = 4 and that the size of the workgroups is 2x2 (get_local_size() returns 2 in both dimensions). This means that 4 threads must transfer 16 elements from the global memory to imgChunk in the local memory. Note that imgChunk is declared as a 1D vector (line 12 in a kernel of sharpenGPU_local_vector) and not as a 2D box! Nor do they transfer data from global memory to local memory, as shown in the figure below:

To have access to consecutive words in global memory, for one block of thread we always calculate all the linearized indices (lli) of the elements in imgChunk that the individual threads in the block carry. These linearized indexes are also used to determine the global memory elements that each thread transmits (picture on the right) with the following code:

25 26 27

// Now calculate 2D global indices back from local linear ones: int gyi=groupy+(lli/CHUNK_SIZE)-1; // ROW: global Y offset + row in the workgroup int gxi=groupx+lli%CHUNK_SIZE-1; // COLUMN: global X offset + column in the workgroupwhere groupx and groupy determine the global offset of the workgroup in global memory.Before calculating the output image point, all threads wait at the obstacle. Each thread then calculates a new value for its pixel, reading the sub-image from local memory (imgChunk):34 35 36 37 38 39 40 41 42 43 44 45 46 47

// wait for threads to finish barrier(CLK_LOCAL_MEM_FENCE); if (gx < width && gy < height) { int pxi = (ly + 1) * CHUNK_SIZE + (lx + 1); short4 px01 = imgChunk[pxi - CHUNK_SIZE]; short4 px10 = imgChunk[pxi - 1]; short4 px11 = imgChunk[pxi]; short4 px12 = imgChunk[pxi + 1]; short4 px21 = imgChunk[pxi + CHUNK_SIZE]; short4 pxOut = (short4)(5) * px11 - px01 - px10 - px12 - px21; imageOut[gy * width + gx] = convert_uchar4_sat(pxOut); }This time kernel of sharpenGPU_local_vector on the Nvidia Tesla K40 GPE is performed in 0.56 milliseconds when processing a 2580x1319 pixel image:

[patriciob@nsc-login 09-image-filter]$ srun prog sharpenGPU_local_vector_linear celada_in.png celada_out.png Loaded image celada_in.png of size 2580x1319. Program size = 7000 B Kernel Execution time is: 0.557 millisecondsWe see that the use of local memory brings us additional performance speed, as each image element is read only once from the global memory (except for image elements at the edges, which are read twice). Compared to the naive version of the kernel, the acceleration is 2.5 times! The latter finding should not surprise us, as we know that the bottleneck in computers is actually DRAM memory, and by considering the organization of data in DRAM memory, how to access DRAM memory, and caching (since local memory is a software-controlled cache), we can significantly influence time implementation of the programs.

The full code from this chapter can be found in the 09-image-filter folder here.

-